Navigating Activity Cliffs: Challenges and AI Solutions for Predictive Materials and Drug Discovery

This article provides a comprehensive examination of the activity cliff phenomenon, where minute structural changes in molecules cause significant property shifts, posing a major challenge for AI in materials and...

Navigating Activity Cliffs: Challenges and AI Solutions for Predictive Materials and Drug Discovery

Abstract

This article provides a comprehensive examination of the activity cliff phenomenon, where minute structural changes in molecules cause significant property shifts, posing a major challenge for AI in materials and drug discovery. We explore the foundational concepts of activity cliffs and their impact on predictive modeling, review cutting-edge AI methodologies like contrastive reinforcement learning and target-aware models designed to address these discontinuities, analyze benchmarking results and common failure modes of existing models, and discuss rigorous validation frameworks. Aimed at researchers and drug development professionals, this synthesis offers a roadmap for developing more robust, cliff-aware generative AI models to accelerate reliable materials innovation and therapeutic development.

Defining the Activity Cliff Phenomenon: Why Small Changes Create Big Problems in AI

What is an Activity Cliff? A Formal Definition and Key Examples

In the fields of medicinal chemistry and chemoinformatics, an activity cliff (AC) refers to a pair or group of structurally similar compounds that exhibit a large difference in potency against the same biological target. This phenomenon represents a critical discontinuity in structure-activity relationships (SAR), presenting both challenges and opportunities for drug discovery. Activity cliffs defy the traditional similarity principle in chemistry, which states that structurally similar molecules should have similar biological effects. For researchers in materials generative AI, understanding activity cliffs is paramount, as these SAR discontinuities significantly impact the performance of machine learning models in molecular property prediction and de novo molecular design. This guide provides a formal definition of activity cliffs, quantitative methods for their identification, and key examples, with a specific focus on implications for AI-driven research.

Formal Definition and Core Concepts

The Formal Definition

An activity cliff is formally defined as a pair of structurally similar or analogous compounds that are active against the same biological target but display a large difference in potency [1]. This definition rests upon two fundamental criteria that must be satisfied simultaneously:

- Structural Similarity: The two compounds must meet a specified criterion of molecular similarity.

- Potency Difference: The difference in their biological activity must exceed a defined threshold [2] [1].

This phenomenon is often described as the embodiment of SAR discontinuity, where minor structural modifications lead to significant, often abrupt, shifts in biological activity [3].

The Underlying Principle and Its Exception

The concept of an activity cliff directly challenges the molecular similarity principle, a foundational concept in chemistry and drug discovery. This principle posits that chemically similar compounds should exhibit similar biological activities [4]. Activity cliffs are the notable exception to this rule, demonstrating that small chemical changes can sometimes lead to dramatic differences in potency [4] [1]. Understanding these exceptions is crucial for SAR studies and AI-based molecular design, as they reveal critical chemical transformations with substantial biological impact.

Quantitative Identification of Activity Cliffs

The Activity Cliff Index (ACI)

To quantitatively identify activity cliffs, researchers employ a metric known as the Activity Cliff Index (ACI). This index mathematically captures the "smoothness" of the SAR landscape around a compound. The ACI for two compounds, x and y, is defined using the following formula [3]:

$$ ACI(x,y;f):=\frac{|f(x)-f(y)|}{d_T(x,y)}, \quad x,y \in S $$

In this formula:

- ( f(x) ) and ( f(y) ) represent the biological activity (e.g., binding affinity) of compounds x and y.

- ( d_T(x,y) ) denotes the Tanimoto distance, a measure of structural dissimilarity based on molecular fingerprints [3]. A high ACI value indicates a steep activity cliff—a small structural change (low Tanimoto distance) results in a large activity difference (high absolute activity difference).

Criteria for Activity Cliff Assessment

Systematic identification of activity cliffs requires precise criteria for molecular similarity and potency differences. The table below summarizes the primary criteria used in the field.

Table 1: Key Criteria for Defining and Identifying Activity Cliffs

| Criterion | Description | Common Measures & Thresholds |

|---|---|---|

| Structural Similarity | Assesses the degree of molecular structural resemblance. | - Fingerprint-Based: Tanimoto similarity (e.g., Tc) using descriptors like ECFP [1]. Thresholds are representation-dependent [1].\n- Substructure-Based: Matched Molecular Pairs (MMPs). Two compounds differ only at a single site [3] [5] [1]. No threshold needed [1]. |

| Potency Difference | Quantifies the difference in biological activity. | - Constant Threshold: An at least 100-fold difference (e.g., ΔpKi ≥ 2.0) is frequently applied [5] [1].\n- Class-Dependent Threshold: Statistically derived per activity class (e.g., mean + 2 standard deviations of the pair-wise potency difference distribution) [1]. |

| Potency Measurement | The experimental data used for activity comparison. | - Equilibrium Constants (Ki or KD) are generally preferred for high accuracy [1]. pKi (= -log10Ki) is often used for analysis [3] [5]. |

Generations of Activity Cliffs

The methodology for defining activity cliffs has evolved, leading to the recognition of different "generations" that reflect increasing chemical interpretability and relevance.

Table 2: Evolution of the Activity Cliff Concept through Different Generations

| Generation | Similarity Criterion | Potency Difference Criterion | Key Characteristics |

|---|---|---|---|

| First | Numerical (e.g., fingerprint-based Tc) or substructure-based. | Constant threshold across all activity classes. | Provides a broad, systematic identification method [1]. |

| Second | (R)MMP-cliff formalism (single substitution site). | Variable, activity class-dependent threshold. | Focuses on structural analogs, improving chemical interpretability [1]. |

| Third | Analog series (single or multiple substitution sites). | Variable, activity class-dependent threshold. | Highest SAR information content, directly relevant to lead optimization [1]. |

Experimental Protocols for Activity Cliff Analysis

Workflow for Systematic Identification

The following diagram illustrates a standard computational workflow for the systematic identification and analysis of activity cliffs in compound datasets.

Activity Cliff Identification Workflow

Step-by-Step Protocol:

Data Curation & Standardization: Extract compound structures (e.g., SMILES strings) and associated potency data (preferably Ki or KD values) from reliable databases such as ChEMBL [5] [4]. Standardize structures using a tool like the ChEMBL structure pipeline to remove salts, solvents, and standardize representation [4].

Molecular Representation:

- For fingerprint-based similarity, generate extended-connectivity fingerprints (ECFP4 or ECFP6) or other molecular fingerprints [6] [4].

- For substructure-based similarity, systematically fragment compounds to generate Matched Molecular Pairs (MMPs), defined as pairs of compounds that differ only at a single site [5]. Apply size restrictions to ensure meaningful analog relationships (e.g., the core must be at least twice the size of the substituent, and the substituent is restricted to a maximum of 13 heavy atoms) [5].

Apply Similarity Criterion:

- Using fingerprints, calculate the Tanimoto coefficient for compound pairs. A pair is considered structurally similar if its Tc exceeds a chosen threshold.

- Using MMPs, similarity is inherently defined by the shared core structure.

Apply Potency Difference Criterion: For the similar pairs identified, calculate the difference in potency. A common threshold is a 100-fold difference (ΔpKi ≥ 2.0) [5]. Alternatively, calculate an activity class-dependent threshold based on the distribution of potency differences in the dataset [1].

Activity Cliff Identification: Compound pairs that satisfy both the similarity and potency difference criteria are classified as activity cliffs.

Network and SAR Analysis: Construct an activity cliff network where nodes represent compounds and edges represent pairwise cliff relationships. These networks often reveal clusters of coordinated cliffs, which contain rich SAR information [5] [1]. Simplified network representations can transform complex clusters into easily interpretable formats based on Matching Molecular Series (MMS) [5].

The Scientist's Toolkit: Essential Research Reagents

The table below lists key computational tools and data resources essential for experimental activity cliff research.

Table 3: Key Research Resources for Activity Cliff Studies

| Item / Resource | Function / Description | Relevance to Activity Cliff Research |

|---|---|---|

| ChEMBL Database | A large-scale, open-source database of bioactive molecules with drug-like properties. | Primary public source for extracting curated compound structures and associated bioactivity data (e.g., Ki, IC50) for various protein targets [3] [5] [4]. |

| RDKit | Open-source cheminformatics software. | Used for standardizing structures, computing 2D/3D molecular descriptors, generating fingerprints (e.g., ECFP), and fragmenting molecules for MMP analysis [6] [4]. |

| Cytoscape | An open-source platform for complex network analysis and visualization. | Used to construct, visualize, and analyze activity cliff networks, helping to decipher coordinated cliff formations and SAR patterns [5]. |

| Matched Molecular Pair (MMP) | A pair of compounds that are only distinguished by a structural modification at a single site. | A core, chemically intuitive concept for defining the structural similarity criterion in advanced activity cliff definitions (MMP-cliffs) [5] [1]. |

| Docking Software | Software (e.g., AutoDock Vina, Glide) for predicting protein-ligand binding modes and affinities. | Used for structure-based analysis of activity cliffs and as a target-specific scoring function for de novo molecular design, capable of reflecting activity cliffs [3] [2]. |

Key Examples and Impact on AI Research

A Classic Example: Factor Xa Inhibitors

A canonical example of an activity cliff involves inhibitors of blood coagulation factor Xa. As shown in a representative case, the addition of a single hydroxyl group (-OH) to a parent compound can lead to an increase in inhibition potency of almost three orders of magnitude [4]. This small chemical modification drastically improves binding affinity, creating a steep activity cliff that is critical for SAR understanding.

Coordinated Activity Cliffs and Network Representations

Activity cliffs are rarely isolated pairs. More than 90% of activity cliffs are formed in a coordinated manner by groups of structurally similar compounds with significant potency variations [5] [1]. In network representations, these give rise to complex clusters. For example, the activity cliff network for melanocortin receptor 4 ligands consists of 426 cliffs organized in 17 clusters, while the network for coagulation factor Xa ligands contains 915 cliffs with several densely connected clusters [5]. Analyzing these clusters provides higher SAR information content than studying individual cliffs.

Critical Implications for Generative AI and Molecular Property Prediction

The presence of activity cliffs has profound implications for AI in drug discovery and materials science.

A Major Challenge for QSAR Models: Activity cliffs are a well-documented source of prediction error for quantitative structure-activity relationship (QSAR) models [4]. Both classical and modern deep learning models experience a significant drop in predictive accuracy when applied to "cliffy" compounds [6] [4]. This is because ML models tend to generate analogous predictions for structurally similar molecules, a principle that fails at activity cliffs [3].

Informing Generative Molecular Design: The limitations of standard benchmarks have spurred the development of AI frameworks that explicitly account for activity cliffs. The Activity Cliff-Aware Reinforcement Learning (ACARL) framework is a prime example. ACARL leverages a novel Activity Cliff Index to identify these critical points and incorporates them into the reinforcement learning process through a tailored contrastive loss function. This guides the generative model to focus on high-impact SAR regions, leading to superior performance in generating high-affinity molecules compared to state-of-the-art algorithms [3]. The integration of domain knowledge about SAR discontinuities is thus key to advancing reliable AI for molecular design.

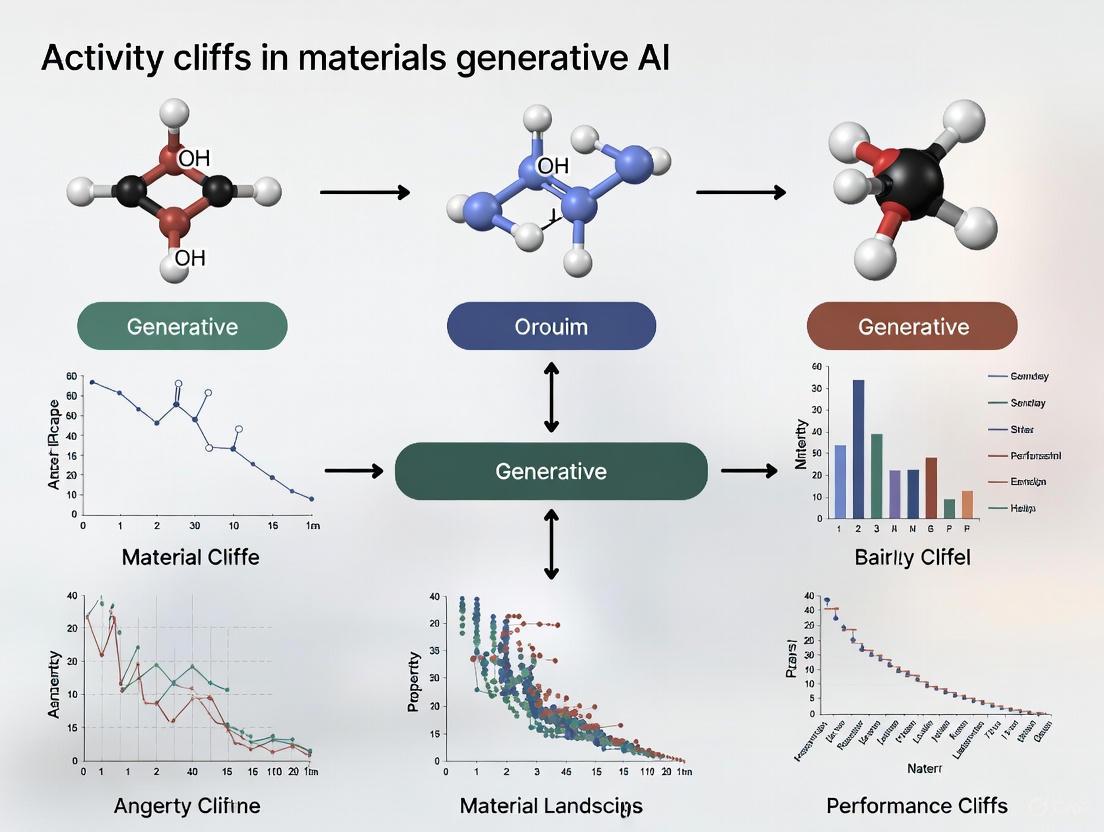

Visualizing the AI Framework for Activity Cliffs

The diagram below illustrates the core architecture of an AI system, like ACARL, designed to address activity cliffs in de novo molecular design.

AI Framework for Activity Cliff Awareness

The principle of molecular similarity is a foundational axiom in quantitative structure-activity relationship (QSAR) modeling, positing that structurally similar molecules are likely to exhibit similar biological activities [4] [2]. This principle provides the theoretical basis for predicting biological activity based on chemical structure and enables the extrapolation of activity from known compounds to unknown analogs. Activity cliffs (ACs) represent a critical exception to this rule, defined as pairs of structurally similar compounds that nevertheless exhibit large differences in their binding affinity for a given target [4] [7]. The existence of ACs directly challenges the core assumption of QSAR, creating significant discontinuities in the structure-activity relationship (SAR) landscape that complicate both prediction and optimization efforts in drug discovery [4] [8].

The quantitative definition of an activity cliff typically depends on two criteria: a similarity criterion (often based on Tanimoto similarity or matched molecular pairs) and a potency difference criterion (usually requiring a difference of at least two orders of magnitude in activity) [2]. For instance, Figure 1 in the search results illustrates a dramatic example where the addition of a single hydroxyl group to a factor Xa inhibitor results in an almost three orders of magnitude increase in inhibition [4]. Such dramatic shifts in potency from minimal structural modifications defy the gradual changes expected under the similarity principle and reveal the complex, non-linear nature of molecular recognition in biological systems.

The Mechanistic Basis of Activity Cliff Formation

Structural and Energetic Underpinnings

The formation of activity cliffs can be rationalized through several structural and energetic mechanisms that operate at the molecular level. Small structural modifications may compromise critical interactions with the receptor, alter binding modes, or hamper the adoption of energetically favorable conformations [2]. At the structural level, activity cliffs can be analyzed through differences in hydrogen bond formation, ionic interactions, lipophilic contacts, aromatic stacking, the presence of explicit water molecules, and stereochemical considerations [2].

The 3D interpretation of activity cliffs suggests that local differences in an overall similar pattern of contacts with the target can explain the significant potency differences between cliff-forming partners [2]. This perspective expands the traditional ligand-centric view of 2D activity cliffs by incorporating structural information about the target protein and its specific interactions with ligands. For example, a minor modification might block a key interaction without significantly altering the overall binding mode, yet result in a dramatic loss of activity due to the disproportionate energetic contribution of that specific interaction.

Quantifying Activity Cliffs

Several quantitative approaches have been developed to identify and characterize activity cliffs in molecular datasets:

Structure-Activity Landscape Index: SALI quantifies the roughness of the activity landscape and is calculated as SALIᵢⱼ = |Pᵢ - Pⱼ| / (1 - simᵢⱼ), where P represents potency and sim represents similarity [9]. High SALI values indicate the presence of activity cliffs.

Extended SALI (eSALI): This approach uses extended similarity (eSIM) frameworks to quantify activity landscape roughness with O(N) scaling, making it computationally efficient for large datasets [9]. The formula is eSALIᵢ = [1/(N(1-se))] × Σ|Pᵢ - P̄|, where se is the extended similarity of the set.

Activity Cliff Index: Recent approaches like ACtriplet incorporate triplet loss from face recognition with pre-training strategies to develop specialized prediction models [7], while ACARL introduces a quantitative Activity Cliff Index (ACI) to detect SAR discontinuities systematically [8].

Table 1: Key Metrics for Quantifying Activity Cliffs

| Metric | Formula | Application | Advantages |

|---|---|---|---|

| SALI | SALIᵢⱼ = |Pᵢ - Pⱼ| / (1 - simᵢⱼ) | Pairwise cliff identification | Intuitive interpretation of cliff steepness |

| eSALI | eSALIᵢ = [1/(N(1-se))] × Σ|Pᵢ - P̄| | Dataset-level landscape roughness | Linear scaling with dataset size |

| ACI | Combines structural similarity with activity differences | Systematic cliff detection in generative AI | Enables integration with machine learning |

Experimental Evidence: QSAR Model Performance on Activity Cliffs

Systematic Evaluation of QSAR Models

Recent studies have systematically evaluated the performance of various QSAR models in predicting activity cliffs. A comprehensive 2023 study constructed nine distinct QSAR models by combining three molecular representation methods—extended-connectivity fingerprints (ECFPs), physicochemical-descriptor vectors (PDVs), and graph isomorphism networks (GINs)—with three regression techniques: random forests (RFs), k-nearest neighbors (kNNs), and multilayer perceptrons (MLPs) [4]. These models were evaluated on three case studies: dopamine receptor D2, factor Xa, and SARS-CoV-2 main protease.

The results strongly support the hypothesis that QSAR models frequently fail to predict activity cliffs. The study observed low AC-sensitivity across evaluated models when the activities of both compounds were unknown. However, a substantial increase in AC-sensitivity occurred when the actual activity of one compound in the pair was provided [4]. This finding has significant implications for practical drug discovery, suggesting that knowledge of even one compound's activity in a pair can dramatically improve cliff prediction.

Performance Across Model Architectures

The comparative performance of different QSAR modeling approaches reveals important patterns:

Graph isomorphism features were found to be competitive with or superior to classical molecular representations for AC-classification, suggesting their potential as baseline AC-prediction models or simple compound-optimization tools [4].

For general QSAR prediction, however, extended-connectivity fingerprints consistently delivered the best performance among the tested input representations [4].

Notably, descriptor-based QSAR methods were reported to even outperform more complex deep learning models on "cliffy" compounds associated with activity cliffs [4], countering earlier hopes that the approximation power of deep neural networks might ameliorate the AC problem.

Table 2: QSAR Model Performance Comparison on Activity Cliff Prediction

| Model Architecture | Molecular Representation | AC Prediction Sensitivity | General QSAR Performance |

|---|---|---|---|

| Random Forest | ECFP | Low to moderate | Consistently strong |

| k-Nearest Neighbors | Physicochemical descriptors | Low | Variable |

| Multilayer Perceptron | Graph isomorphism networks | Moderate | Competitive |

| Graph Neural Network | Learned graph representations | Moderate to high | Dataset-dependent |

Extended Similarity Approaches for Data Splitting

The presence of activity cliffs significantly impacts model performance based on data splitting strategies. Recent research has proposed several extended similarity and extended SALI methods to study the implications of ACs distribution between training and test sets [9]. These approaches include:

- Medoid-based splitting: Ranking molecules by complementary similarity from "medoids" to "outliers"

- Uniform splitting: Dividing molecules into batches based on complementary similarity

- Diverse selection: Systematically adding molecules that minimize extended similarity

- Anti-eSALI selection: Minimizing eSALI to reduce activity cliff presence

Experiments demonstrated that non-uniform ACs and chemical space distribution tend to lead to worse models than uniform methods, though ML modeling on AC-rich sets needs to be analyzed case-by-case [9]. Overall, random splitting often performed better than more complex splitting alternatives, highlighting the challenge of systematically addressing activity cliffs through data partitioning alone.

Methodologies for Activity Cliff Prediction

Structure-Based Prediction Approaches

Structure-based methods offer a promising avenue for activity cliff prediction by leveraging 3D structural information of protein-ligand complexes. Docking and virtual screening approaches have demonstrated significant accuracy in predicting activity cliffs, particularly when using ensemble- and template-docking methodologies [2]. These advanced structure-based methods can rationalize 3D activity cliff formation by accounting for:

- Binding mode variations despite high structural similarity

- Critical interaction networks that disproportionately influence binding affinity

- Protein flexibility and conformational changes induced by ligand modifications

One comprehensive study utilized a diverse database of cliff-forming co-crystals encompassing 146 3DACs across 9 pharmaceutical targets, including CDK2, thrombin, HSP90, and factor Xa [2]. By progressively moving from ideal scenarios toward realistic drug discovery situations, the research established that despite well-known limitations of empirical scoring schemes, activity cliffs can be accurately predicted by advanced structure-based methods.

Deep Learning Architectures for AC Prediction

Recent advances in deep learning have produced specialized architectures for activity cliff prediction:

ACtriplet: This model integrates triplet loss from face recognition with pre-training strategies, significantly improving deep learning performance across 30 datasets [7]. The approach demonstrates how transfer learning and specialized loss functions can enhance AC prediction.

ACARL Framework: The Activity Cliff-Aware Reinforcement Learning framework introduces a novel activity cliff index to identify and amplify activity cliff compounds, incorporating them into the reinforcement learning process through a tailored contrastive loss [8]. This method focuses model optimization on high-impact SAR regions.

AMPCliff: Extending activity cliff analysis beyond small molecules, this framework provides a quantitative definition and benchmarking for activity cliffs in antimicrobial peptides, employing pre-trained protein language models like ESM2 that demonstrate superior performance [10].

Advanced Experimental Protocols

Protocol 1: Systematic QSAR Model Construction for AC Evaluation

Objective: To evaluate the AC-prediction power of modern QSAR methods and its quantitative relationship to general QSAR-prediction performance [4].

Methodology:

- Data Curation: Collect binding affinity data for dopamine receptor D2, factor Xa, and SARS-CoV-2 main protease from ChEMBL database and COVID moonshot project

- Data Standardization: Process SMILES strings using ChEMBL structure pipeline for standardization and desalting

- Molecular Representations:

- Generate extended-connectivity fingerprints (ECFPs) with specified parameters

- Compute physicochemical-descriptor vectors (PDVs) incorporating key molecular properties

- Implement graph isomorphism networks (GINs) for learned graph representations

- Model Training:

- Combine each representation with three regression techniques: random forests, k-nearest neighbors, and multilayer perceptrons

- Implement stratified splitting to ensure representative AC distribution

- Optimize hyperparameters using cross-validation

- Evaluation:

- Assess general QSAR performance using standard regression metrics

- Evaluate AC-classification sensitivity using pairwise compound analysis

- Compare performance with and without known activity for one compound in pairs

Protocol 2: Extended Similarity Framework for Data Splitting

Objective: To explore the implications of ACs distribution between training and test sets on QSAR model errors using extended similarity measures [9].

Methodology:

- Dataset Preparation: Curate datasets for 30 molecular targets with associated Ki or EC50 values

- Fingerprint Generation: Compute MACCS and ECFP4 binary fingerprints using RDKIT

- Extended Similarity Calculation:

- Perform column-wise summation of fingerprints: Σ = [σ₁, σ₂, ..., σ_M]

- Calculate indicator for each σₖ: Δσ = 2σₖ - N

- Classify columns as similarity or dissimilarity counters based on threshold γ

- Apply weighting functions for partial similarity (fs) and dissimilarity (fd)

- Data Splitting Methods:

- Implement random, medoid, uniform, diverse, and Kennard-Stone selection

- Apply eSALI-based splitting to maximize or minimize activity cliff presence

- Compare traditional clustering approaches with extended similarity methods

- Model Evaluation:

- Train machine learning models on different splits

- Assess performance on AC-rich versus AC-sparse test sets

- Analyze error distribution relative to activity landscape roughness

Research Reagent Solutions

Table 3: Essential Computational Tools for Activity Cliff Research

| Research Tool | Type | Function | Application in AC Research |

|---|---|---|---|

| RDKit | Cheminformatics library | Molecular fingerprint generation | Compute ECFP4 and MACCS fingerprints for similarity assessment [9] |

| ChEMBL Database | Chemical database | Bioactivity data source | Extract curated binding affinity data for QSAR modeling [4] [8] |

| GRAMPA Dataset | AMP-specific database | Antimicrobial peptide activities | Benchmark AC phenomena in peptide space [10] |

| ESM2 | Protein language model | Sequence representation learning | Predict activity cliffs in antimicrobial peptides [10] |

| ICM | Docking software | Structure-based prediction | Generate binding poses and scores for 3DAC analysis [2] |

| ACTriplet | Deep learning model | AC prediction with triplet loss | Improve sensitivity to activity cliffs [7] |

| ACARL | Reinforcement learning framework | De novo molecular design | Generate molecules considering AC constraints [8] |

Implications for Materials Generative AI Research

The study of activity cliffs provides crucial insights for materials generative AI research, particularly in understanding and modeling complex property-structure relationships. The violation of the similarity principle observed in molecular systems likely extends to materials science, where minor structural modifications can similarly lead to discontinuous changes in functional properties [8]. Generative AI models for materials design must account for these potential discontinuities to reliably propose novel structures with targeted properties.

The ACARL framework demonstrates how domain knowledge about activity cliffs can be explicitly incorporated into AI-driven design pipelines through specialized reward functions and sampling strategies [8]. This approach represents a paradigm shift from treating activity cliffs as statistical outliers to leveraging them as informative examples that highlight critical regions in the property-structure landscape. For materials generative AI, analogous "property cliff" awareness could significantly enhance the efficiency and success rate of inverse design algorithms.

Future directions should focus on developing cliff-aware generative models that explicitly model discontinuous regions of the property-structure landscape, improved representation learning that captures the structural features responsible for property cliffs, and cross-domain transfer of activity cliff methodologies from drug discovery to materials informatics [7] [8] [10]. By addressing the fundamental challenge posed by activity cliffs to similarity-based prediction, both fields can advance toward more accurate and reliable computational design frameworks.

Activity cliffs (ACs) represent a critical phenomenon in medicinal chemistry and drug discovery where small structural modifications to a molecule lead to significant changes in its biological potency. The ability to quantitatively identify and analyze these cliffs is paramount for understanding structure-activity relationships (SARs) and for guiding the optimization of lead compounds. This technical guide provides an in-depth examination of the Activity Cliff Index (ACI), a recently developed metric for quantifying activity cliffs, and the Tanimoto similarity coefficient, a foundational cheminformatics measure upon which many AC identification methods are built. Framed within the context of materials generative AI research, this review explores how these quantitative descriptors enable more sophisticated AI-driven molecular design by explicitly modeling critical SAR discontinuities. We present detailed methodologies, comparative analyses of similarity metrics, and visualization frameworks to equip researchers with practical tools for implementing activity cliff awareness in computational drug discovery pipelines.

The concept of molecular similarity serves as a cornerstone in cheminformatics, underpinning various applications from virtual screening to property prediction [11]. At its core lies the similar property principle, which posits that structurally similar molecules are likely to exhibit similar biological activities [11]. While this principle generally holds true, activity cliffs (ACs) represent important exceptions that prove critically informative for understanding structure-activity relationships (SARs).

Activity cliffs are formally defined as pairs of structurally similar compounds that exhibit large differences in biological potency against the same target [12] [13]. From a medicinal chemistry perspective, these cliffs reveal specific structural modifications that profoundly impact biological activity, thereby serving as key sources of SAR information [12]. The accurate identification and interpretation of ACs enable researchers to pinpoint molecular regions and features most critical for binding affinity and functional efficacy.

The reliable detection of activity cliffs requires the simultaneous quantification of two key aspects: molecular similarity and potency difference. Molecular similarity can be assessed using various approaches, including fingerprint-based Tanimoto coefficients [12], matched molecular pairs (MMPs) [8] [12], or shared molecular scaffolds [13]. Potency differences are typically measured using bioactivity values such as inhibitory constants (Ki) or their logarithmic transformations (pKi = -log10 K_i) [8]. A commonly applied threshold defines significant potency differences as changes of at least two orders of magnitude (100-fold) [12], though target set-dependent thresholds have also been proposed to account for variations in potency value distributions across different target classes [12].

Within generative AI research, activity cliffs present both a challenge and an opportunity. Traditional machine learning models, including quantitative structure-activity relationship (QSAR) models, often struggle to accurately predict the properties of activity cliff compounds because these models typically assume smoothness in the activity landscape [8]. However, the explicit incorporation of activity cliff awareness into AI frameworks—such as through the recently proposed Activity Cliff-Aware Reinforcement Learning (ACARL) approach—enables more sophisticated molecular generation that targets high-impact regions of the chemical space [8].

The Tanimoto Similarity Coefficient

Theoretical Foundation

The Tanimoto coefficient (also known as the Jaccard-Tanimoto coefficient) stands as one of the most widely adopted similarity measures in cheminformatics [14] [15] [16]. Originally introduced by T. T. Tanimoto in 1957 while working at IBM [15], this metric quantifies the similarity between two sets or binary vectors by comparing their intersection to their union.

For two binary vectors A and B representing molecular fingerprints, the Tanimoto coefficient T is defined as:

T(A,B) = |A ∩ B| / (|A| + |B| - |A ∩ B|) [15] [16] [17]

where |A ∩ B| represents the number of bits set to 1 in both fingerprints (intersection), while |A| and |B| represent the total number of bits set to 1 in each fingerprint, respectively [15]. The resulting value ranges from 0 (no similarity) to 1 (identical fingerprints) [15] [17].

The corresponding Tanimoto distance, which quantifies dissimilarity, is defined as:

D(A,B) = 1 - T(A,B) [15]

This distance metric also ranges from 0 (identical) to 1 (completely different) [15].

Comparative Analysis of Similarity Metrics

While the Tanimoto coefficient remains the most popular choice for molecular similarity comparisons, several other metrics offer alternative approaches with distinct mathematical properties and applications.

Table 1: Key Similarity and Distance Metrics for Molecular Fingerprints

| Metric Name | Formula for Binary Variables | Type | Range | ||||

|---|---|---|---|---|---|---|---|

| Tanimoto (Jaccard) coefficient | T = c/(a+b+c) [17] | Similarity | 0 to 1 | ||||

| Dice coefficient | D = 2c/(a+b+2c) [17] | Similarity | 0 to 1 | ||||

| Cosine coefficient | C = c/√( | A | · | B | ) [17] | Similarity | 0 to 1 |

| Soergel distance | S = (a+b)/(a+b+c) [17] | Distance | 0 to 1 | ||||

| Hamming/Manhattan distance | H = a+b [17] | Distance | 0 to N | ||||

| Euclidean distance | E = √(a+b) [17] | Distance | 0 to √N |

In the formulas above, the variables represent: c = number of common features (intersection), a = number of features unique to molecule A, b = number of features unique to molecule B, and N = length of the molecular fingerprints [17].

Notably, the Soergel distance is mathematically related to the Tanimoto coefficient as its complement (S = 1 - T) [17]. Similarly, the Dice coefficient can be derived from the Tversky index by setting both weighting parameters α and β to 0.5 [18].

Performance Characteristics and Practical Considerations

Comparative studies have evaluated the performance of various similarity metrics in cheminformatics applications. A large-scale analysis using sum of ranking differences (SRD) and ANOVA found that the Tanimoto index, Dice index, Cosine coefficient, and Soergel distance performed best for similarity calculations, producing rankings closest to the composite average of multiple metrics [14]. The study further recommended against using Euclidean and Manhattan distances as standalone similarity measures, though their variability from other metrics might be advantageous for data fusion approaches [14].

A common practice in chemical similarity searching involves using a Tanimoto threshold of 0.85 to define similar compounds, based on early studies suggesting this value indicates a high probability of shared activity [11] [17]. However, this "0.85 rule" has been questioned, as different fingerprint types produce different similarity score distributions, meaning that the same threshold value may correspond to different probabilities of activity sharing depending on the representation used [11] [17]. Additionally, the Tanimoto coefficient has demonstrated a tendency to favor smaller compounds in dissimilarity selection [14].

The Activity Cliff Index (ACI) Framework

Theoretical Basis and Calculation

The Activity Cliff Index (ACI) represents a quantitative framework specifically designed to detect and quantify activity cliffs in molecular datasets [8]. This metric simultaneously incorporates both structural similarity and potency difference measurements to identify significant SAR discontinuities.

The ACI framework operates on pairs of compounds, calculating the intensity of activity cliffs by comparing their structural similarity with their difference in biological activity [8]. The core innovation of ACI lies in its ability to systematically identify compounds that exhibit activity cliff behavior, enabling their explicit incorporation into machine learning pipelines [8].

The mathematical formulation of ACI can be conceptually understood as a function that increases with greater potency differences and decreases with lower structural similarities. While the exact mathematical definition may vary across implementations, the fundamental principle involves normalizing potency differences by structural similarity metrics, typically using Tanimoto similarity or matched molecular pairs (MMPs) as structural descriptors [8].

Table 2: Molecular Descriptors for Activity Cliff Detection

| Descriptor Type | Description | Application in AC Identification |

|---|---|---|

| Fingerprint-based Tanimoto similarity | Calculated using structural keys or hashed fingerprints [11] | General-purpose similarity measure for diverse compounds |

| Matched Molecular Pairs (MMPs) | Pairs differing only at a single site [8] [12] | Chemically interpretable, reaction-based similarity |

| Maximum Common Substructure (MCS) | Largest substructure shared between two molecules [18] | Sensitive measure, especially for size-different compounds |

| Multi-site analogs | Compounds with different substitutions at multiple sites [12] | Identification of complex structure-activity relationships |

Advanced Activity Cliff Categorization

Recent research has expanded the traditional activity cliff concept to include more specialized categories that capture different aspects of SAR discontinuities:

- Single-site activity cliffs (ssACs): Traditional activity cliffs formed by pairs of analogs with modifications at a single site, typically identified as MMP-cliffs [12]. These are the most frequently encountered AC type.

- Dual-site activity cliffs (dsACs): ACs formed by analog pairs with different substitutions at two sites, representing over 90% of multi-site ACs [12].

- Multi-site activity cliffs (msACs): A broader category encompassing ACs with modifications at multiple sites, including dsACs as the predominant subtype [12].

- Structural isomer-based ACs: ACs formed by structural isomers, combining different similarity criteria for enhanced SAR interpretation [13].

The analysis of multi-site ACs has revealed different patterns of substitution effects, including cases where single substitutions dominate the potency difference (redundant information), as well as instances of additive, synergistic, and compensatory effects when both substitutions contribute significantly to the observed activity cliff [12].

Experimental Protocols and Methodologies

Workflow for Activity Cliff Identification

The reliable identification of activity cliffs requires a systematic approach combining computational chemistry, data curation, and statistical analysis. The following protocol outlines the key steps for comprehensive AC analysis:

Step 1: Data Curation and Preparation

- Extract bioactive compounds from reliable databases (e.g., ChEMBL) with exact potency measurements (e.g., Ki values) [12].

- Apply data filtering criteria: include only compounds with direct interactions (target relationship type: "D") at high confidence levels (assay confidence score: 9) [12].

- Convert potency values to logarithmic scale (pKi = -log10 Ki) for normalized comparison [8].

- For target set-dependent thresholds, analyze potency value distributions within each target class [12].

Step 2: Molecular Representation and Similarity Calculation

- Generate molecular fingerprints using appropriate descriptors (e.g., ECFP, MACCS keys, or atom-pair descriptors) [19] [18].

- Calculate pairwise similarity matrices using Tanimoto coefficient or alternative metrics [17] [18].

- Alternatively, generate matched molecular pairs (MMPs) using retrosynthetic fragmentation rules for chemically interpretable similarity relationships [12].

Step 3: Activity Cliff Identification

- Apply similarity threshold (e.g., Tc ≥ 0.85 for fingerprint-based methods or MMP criteria for substructure-based methods) [11] [12].

- Apply potency difference threshold (e.g., ≥100-fold or target set-dependent thresholds) [12].

- Calculate Activity Cliff Index for qualifying compound pairs to quantify cliff intensity [8].

- For multi-site ACs, implement hierarchical analog data structures to analyze individual substitution contributions [12].

Step 4: Validation and Analysis

- Visualize activity cliffs in chemical space using dimensionality reduction techniques (t-SNE) [19].

- Perform statistical analysis of AC distributions across target classes.

- Interpret ACs in structural context to identify SAR determinants.

Figure 1: Activity Cliff Identification Workflow. This diagram illustrates the systematic process for identifying and analyzing activity cliffs, from data preparation to AI model integration.

Research Reagents and Computational Tools

Table 3: Essential Research Reagents and Computational Tools for Activity Cliff Studies

| Tool/Resource | Type | Functionality | Access | |

|---|---|---|---|---|

| ChEMBL database | Chemical database | Source of bioactive compounds with curated potency data [8] [12] | Public | |

| RDKit | Cheminformatics toolkit | Fingerprint generation, similarity calculation, MMP identification [19] | Open source | |

| jaccard R package | Statistical package | Significance testing for Jaccard/Tanimoto similarity coefficients [16] | Open source | |

| ChemMine Tools | Web platform | Compound clustering, similarity comparisons, property predictions [18] | Public | |

| - | ACARL framework | AI methodology | Reinforcement learning with explicit activity cliff modeling [8] | Research code |

| - | Mcule database | Compound supplier | Source of purchasable compounds for virtual screening [14] [19] | Commercial |

Integration with Generative AI Research

The explicit modeling of activity cliffs represents a significant advancement for generative AI in drug discovery. Traditional molecular generation models often treat activity cliff compounds as statistical outliers rather than informative examples, leading to smoothed output that misses critical SAR discontinuities [8]. The integration of ACI into AI frameworks addresses this limitation through several innovative approaches:

Activity Cliff-Aware Reinforcement Learning (ACARL) This novel framework incorporates activity cliffs directly into the molecular generation process through two key components [8]:

- Activity Cliff Index (ACI): Quantitatively identifies activity cliff compounds within datasets.

- Contrastive Loss Function: Prioritizes learning from activity cliff compounds during reinforcement learning, shifting model focus toward high-impact SAR regions.

Extended Similarity Indices for Set-Based Comparisons Recent developments in n-ary similarity metrics enable the simultaneous comparison of multiple molecules, providing enhanced measures of set compactness and diversity [19]. These extended indices scale more efficiently (O(N) vs. O(N²) for pairwise comparisons) and offer superior performance in diversity selection algorithms [19].

Challenges in Predictive Modeling Quantitative structure-activity relationship (QSAR) models and other machine learning approaches face significant challenges with activity cliff compounds. Studies have demonstrated that prediction performance substantially deteriorates for these molecules across descriptor-based, graph-based, and sequence-based methods [8]. Neither increasing training set size nor model complexity reliably improves accuracy for activity cliff compounds, highlighting the need for specialized approaches like ACARL [8].

Figure 2: ACARL Framework Architecture. This diagram shows the integration of Activity Cliff Index calculation with reinforcement learning for improved molecular generation.

The quantitative description of activity cliffs through the Activity Cliff Index and Tanimoto similarity represents a critical advancement in cheminformatics and AI-driven drug discovery. These metrics provide researchers with robust tools to identify and analyze significant SAR discontinuities, moving beyond traditional approaches that often smooth over these informative regions of chemical space. The integration of activity cliff awareness into generative AI models, as demonstrated by the ACARL framework, enables more sophisticated molecular design that explicitly targets high-impact regions of the activity landscape. As these methodologies continue to evolve, they promise to enhance the efficiency and effectiveness of drug discovery pipelines, ultimately accelerating the development of novel therapeutic agents with optimized potency and selectivity profiles.

The integration of artificial intelligence (AI) into molecular science promises to revolutionize drug discovery and materials design. However, a significant gap persists between theoretical model performance and real-world applicability. A core challenge undermining AI reliability is the phenomenon of activity cliffs (ACs)—instances where minute structural modifications to a molecule lead to dramatic, non-linear changes in its biological activity or properties [8] [20]. For AI models that typically learn smooth, continuous structure-function relationships, these discontinuities represent a major source of prediction error and can lead to flawed molecular design [6] [7].

This whitepaper examines the profound consequences of activity cliffs on molecular property prediction and generative AI. We detail the technical hurdles they introduce, survey cutting-edge methodologies designed to address them, and provide a rigorous experimental framework for evaluation. Furthermore, we situate these technical challenges within the pressing business reality of the pharmaceutical industry's impending "patent cliff," where the urgency for efficient, predictive AI has never been greater [21] [22]. The ability to navigate activity cliffs is not merely an academic exercise; it is a critical determinant of success in modern generative materials research.

The Core Challenge: Activity Cliffs and AI

Defining the Activity Cliff Phenomenon

An activity cliff is formally defined as a pair of structurally similar compounds that exhibit a large difference in potency or binding affinity for a given target [7] [20]. Quantitatively, this involves two key aspects:

- Molecular Similarity: Typically calculated using the Tanimoto coefficient based on fingerprints like Extended Connectivity Fingerprints (ECFP) [20], or through the identification of Matched Molecular Pairs (MMPs), where two compounds differ only at a single site [8].

- Potency Difference: Measured by a significant change (e.g., a 10-fold or greater difference) in biological activity, often represented by the negative logarithm of the inhibitory constant (

pKi= -log₁₀Ki) or half-maximal inhibitory concentration (IC₅₀) [8] [20].

Table 1: Quantitative Definition of an Activity Cliff

| Metric | Calculation Method | Threshold for Activity Cliff |

|---|---|---|

| Structural Similarity | Tanimoto similarity of ECFP4/ECFP6 fingerprints | ≥ 0.9 (90% similarity) [20] |

| Potency Difference | ΔpKi or ΔpIC₅₀ | ≥ 1.0 (10-fold difference) [20] |

Impact on AI-Driven Drug Discovery

Activity cliffs pose a fundamental problem for AI/ML models because these models often rely on the assumption that similar inputs yield similar outputs. The presence of ACs violates this principle, leading to several critical failures:

- Prediction Inaccuracy: Standard Quantitative Structure-Activity Relationship (QSAR) and deep learning models exhibit significantly deteriorated performance when predicting the potency of activity cliff compounds. Studies show that neither increasing training data size nor model complexity reliably improves accuracy for these challenging cases [8].

- Generalization Failure: Models tend to overfit the shared structural features of an activity cliff pair and fail to capture the subtle structural differences responsible for the dramatic activity shift. This is a specific instance of the "intra-scaffold" generalization problem [20].

- Misguided Generative Design: In generative molecular design, optimization driven by inaccurate property predictors can steer the search toward suboptimal or outright false regions of chemical space. If a model cannot recognize the sharp discontinuities of an activity cliff, it may fail to propose the small but critical structural changes needed for optimization [23] [8].

Methodological Advances in Activity Cliff-Aware AI

In response to these challenges, researchers have developed novel AI frameworks that explicitly account for activity cliffs. The following table summarizes three key state-of-the-art approaches.

Table 2: Comparison of Advanced Activity Cliff-Aware AI Frameworks

| Framework | Core Innovation | Reported Advantage |

|---|---|---|

| ACARL (Activity Cliff-Aware Reinforcement Learning) [8] | Integrates a contrastive loss function within an RL loop to prioritize learning from identified activity cliff compounds. | Superior generation of high-affinity molecules by focusing optimization on high-impact SAR regions. |

| ACES-GNN (Activity-Cliff-Explanation-Supervised GNN) [20] | Incorporates explanation supervision into GNN training, forcing model attributions to align with ground-truth substructures causing ACs. | Simultaneously improves predictive accuracy and model interpretability for ACs across 30 pharmacological targets. |

| ACtriplet [7] | Combines a pre-training strategy with a triplet loss function, a technique borrowed from facial recognition. | Significantly improves deep learning performance on 30 benchmark datasets by making better use of limited data. |

Deep Dive: The ACARL Framework

The ACARL framework represents a significant shift from conventional reinforcement learning for molecular generation. Its methodology can be broken down into two core components:

- Activity Cliff Index (ACI): A quantitative metric designed to identify and rank activity cliff compounds within a dataset. The ACI measures the intensity of the SAR discontinuity by combining measures of structural similarity and potency difference [8].

- Contrastive Loss in RL: A custom loss function integrated into the reinforcement learning process. This loss function amplifies the reward or penalty associated with activity cliff compounds, forcing the generative model to pay more attention to these high-value, high-sensitivity regions of the chemical space. This shifts the model's focus from overall average performance to robust performance in critical areas [8].

The workflow of the ACARL framework, from data preparation to molecule generation, is illustrated below.

Deep Dive: The ACES-GNN Framework

The ACES-GNN framework tackles the "black box" problem of GNNs while improving their performance on activity cliffs. The key innovation is the use of explanation supervision.

Experimental Protocol for ACES-GNN:

- Data Curation: Assemble a dataset from resources like ChEMBL, encompassing multiple pharmacological targets (e.g., kinases, proteases) [20].

- Activity Cliff Identification: For each target, identify AC pairs using the defined similarity and potency thresholds (e.g., ECFP4 Tanimoto ≥ 0.9, ΔpKi ≥ 1.0).

- Ground-Truth Explanation Generation: For each AC pair, the ground-truth atom-level explanation is defined. The uncommon substructures attached to the shared molecular scaffold are labeled as the true drivers of the activity difference [20].

- Model Training with Explanation Loss: A standard GNN (e.g., a Message Passing Neural Network) is trained with a multi-task loss function (L_total):

L_total = L_prediction + λ * L_explanationHere,L_predictionis the standard loss for activity prediction (e.g., Mean Squared Error), andL_explanationis a loss term that penalizes the model when its internal feature attributions (e.g., from a method like Gradient-weighted Class Activation Mapping) do not align with the ground-truth atom coloring. The hyperparameterλcontrols the strength of the explanation supervision [20].

Experimental Protocols and Benchmarking

Rigorous evaluation is paramount for validating the real-world utility of activity cliff-aware models. The following protocol provides a template for benchmarking.

A Protocol for Benchmarking AC-Aware Models

Objective: To compare the performance of a novel activity cliff-aware model against baseline models in generating/predicting molecules with desired properties, with a focus on robustness to SAR discontinuities.

Materials (The Scientist's Toolkit): Table 3: Essential Research Reagents and Computational Tools

| Item / Resource | Type | Function in Experiment |

|---|---|---|

| ChEMBL Database [20] [24] | Public Bioactivity Database | Primary source for curated molecular datasets with binding affinity data (e.g., Ki, IC₅₀). |

| RDKit [6] | Cheminformatics Toolkit | Used to compute molecular descriptors, fingerprints (ECFP), and handle molecular data. |

| CARA Benchmark [24] | Specialized Benchmark Dataset | Provides assays pre-classified as Virtual Screening (VS) or Lead Optimization (LO), enabling realistic task-specific evaluation. |

| Docking Software (e.g., AutoDock Vina) [8] | Structure-Based Scoring | Used as an oracle to estimate binding affinity, providing a computationally-derived ground truth for generated molecules. |

| FS-Mol Dataset [24] | Few-Shot Learning Benchmark | Useful for evaluating model performance in data-scarce regimes common in drug discovery. |

Methodology:

- Data Sourcing and Curation:

Activity Cliff Annotation:

- For all molecular pairs in each dataset, compute pairwise Tanimoto similarity using ECFP4 fingerprints.

- Compute the absolute difference in

pKivalues. - Label pairs that meet the thresholds (e.g., similarity ≥ 0.9, ΔpKi ≥ 1.0) as activity cliffs [20].

Model Training and Evaluation:

- Models: Train the proposed model (e.g., ACARL, ACES-GNN) and several baselines (e.g., standard GNN, VAE, Random Forest on ECFP).

- Splitting: Use a time-split or scaffold-split to avoid data leakage and better simulate real-world generalization [24].

- Evaluation Metrics:

- For Prediction: Report standard metrics (RMSE, MAE, AUROC) but include a separate analysis on the subset of activity cliff molecules.

- For Generation: Evaluate the diversity, synthesizability, and binding affinity (via docking) of generated molecules. Critically, assess the model's ability to generate novel activity cliff compounds.

Benchmarking Results and Insights

Recent benchmarking efforts on real-world datasets like CARA reveal critical insights:

- Task-Specific Performance: Training strategies that work well for Virtual Screening (VS) assays (e.g., meta-learning) may not be optimal for Lead Optimization (LO) assays, which are densely populated with congeneric compounds and activity cliffs [24].

- The Data Bottleneck: Representation learning models (e.g., GNNs) require large datasets to excel. In low-data regimes, simpler models using fixed fingerprints can be more robust, highlighting the importance of few-shot learning techniques [6] [25].

- Limitations of Current Models: Even advanced models show limitations in sample-level uncertainty estimation and consistently predicting activity cliffs, indicating a need for further research [24].

The Business Imperative: The Patent Cliff Context

The technical challenges of molecular prediction and design are set against a backdrop of immense financial pressure on the pharmaceutical industry. The period from 2025 to 2030 is projected to see the largest "patent cliff" in history, with an estimated $200-$350 billion in annual revenue at risk as blockbuster drugs like Keytruda (Merck), Eliquis (BMS/Pfizer), and Stelara (J&J) lose patent protection [21] [22].

This creates a dual imperative for AI-driven discovery:

- Mitigating R&D Margin Decline: R&D margins are expected to fall from 29% to 21% of revenue by 2030. Rising clinical trial costs and plummeting phase I success rates (down to 6.7% in 2024 from 10% a decade ago) make efficiency paramount [22]. AI that can accurately predict failures early and optimize leads faster is crucial for maintaining profitability.

- Fueling M&A and Pipeline Replenishment: To replace lost revenue, large pharmaceutical companies are expected to engage in significant mergers and acquisitions (M&A), targeting smaller biotech firms with promising late-stage pipelines [21] [26]. Companies with robust, AI-accelerated discovery platforms—particularly those capable of navigating complex SAR like activity cliffs—will be highly valued assets.

The journey toward robust and reliable AI for molecular science is inextricably linked to solving the activity cliff problem. While methodologies like ACARL and ACES-GNN represent promising advances, several frontiers require continued exploration:

- Integration of Human Feedback: As emphasized in generative AI for drug discovery (GADD), reinforcement learning with human feedback (RLHF) is critical for capturing the nuanced judgment of experienced drug hunters, which is often context-dependent and not fully captured by multiparameter optimization functions [23].

- Uncertainty Quantification: Developing models that can not only predict properties but also reliably quantify their own uncertainty is essential for prioritizing experimental testing and managing risk in drug discovery campaigns.

- Multi-Modal and Explainable AI: Future frameworks must integrate structural biology data (e.g., from docking or molecular dynamics) with ligand-based information [23]. Furthermore, as demonstrated by ACES-GNN, explainability is not a luxury but a necessity for building trust and generating chemically actionable insights.

Success in this domain will yield a profound real-world impact: shortening the timeline from target to candidate, reducing the astronomical costs of drug development, and ultimately, bridging the gap between AI-generated hypotheses and clinically successful molecules.

AI Architectures for Cliff-Aware Prediction and Generation: From Contrastive Learning to Target Perception

The integration of artificial intelligence (AI) into drug discovery promises to revolutionize the traditionally lengthy and costly process of developing effective therapeutics [3]. A central challenge in this field, particularly in de novo molecular design, is the accurate modeling of complex structure-activity relationships (SAR). Among the most significant SAR phenomena is the activity cliff (AC)—a scenario where minimal structural modifications to a molecule result in dramatic, discontinuous shifts in its biological activity [3] [4].

Conventional AI-driven molecular design algorithms often treat activity cliff compounds as statistical outliers, failing to leverage their high informational value in understanding SAR discontinuities [3]. This oversight is a critical limitation, as activity cliffs are not mere artifacts; they represent opportunities to identify transformative molecular changes that can guide the design of compounds with significantly enhanced efficacy [3] [4]. The Activity Cliff-Aware Reinforcement Learning (ACARL) framework is a novel approach designed to address this gap explicitly. By incorporating domain-specific knowledge of activity cliffs directly into the reinforcement learning paradigm, ACARL enables more targeted and effective exploration of the molecular space for drug candidate optimization [3].

The ACARL Framework: Core Architecture and Components

The ACARL framework introduces two primary technical innovations that allow it to prioritize and learn from activity cliff compounds effectively.

The Activity Cliff Index (ACI): A Quantitative Metric for SAR Discontinuity

A fundamental requirement for handling activity cliffs is a robust method for their identification. ACARL formulates a quantitative Activity Cliff Index (ACI) to measure the "smoothness" of the biological activity function over the discrete set of molecular structures [3].

The ACI for two molecules, (x) and (y), is defined as: [ ACI(x,y;f):=\frac{|f(x)-f(y)|}{dT(x,y)},\quad x,y \in S ] where (f(x)) and (f(y)) represent the biological activities (e.g., binding affinity) of the two molecules, and (dT(x,y)) is the Tanimoto distance between their molecular structure descriptors [3]. This index captures the intensity of an SAR discontinuity by quantifying the change in activity per unit of structural change. A high ACI value pinpoints a pair of compounds where a small structural distance corresponds to a large activity difference, thus flagging a critical activity cliff [3].

Contrastive Loss in Reinforcement Learning: Prioritizing High-Impact Compounds

ACARL incorporates the ACI within a Reinforcement Learning (RL) framework through a tailored contrastive loss function. This component is the engine that drives the model's focus toward high-impact SAR regions [3].

In traditional RL for molecular generation, the learning process often weighs all samples equally. In contrast, ACARL's contrastive loss function actively amplifies the learning signal from activity cliff compounds identified by the ACI [3]. By doing so, it dynamically shifts the model's optimization focus toward regions of the molecular space where small structural changes are known to have significant pharmacological consequences. This mechanism enhances the model's ability to generate novel compounds that align with the complex, non-linear SAR patterns observed with real-world drug targets [3] [27].

Table: Core Components of the ACARL Framework

| Component | Function | Mechanism |

|---|---|---|

| Activity Cliff Index (ACI) | Identifies & quantifies activity cliffs | Calculates the ratio of biological activity difference to Tanimoto structural distance between molecular pairs [3]. |

| Contrastive Loss Function | Guides RL learning process | Amplifies the contribution of high-ACI compounds during model training, focusing optimization on critical SAR regions [3]. |

| Reinforcement Learning Agent | Generates novel molecular structures | Uses a transformer-based decoder to propose new molecules and is rewarded based on their predicted properties [3]. |

Experimental Validation and Performance

The ACARL framework's performance was rigorously evaluated through experiments on multiple biologically relevant protein targets, demonstrating its superiority over existing state-of-the-art molecular generation algorithms [3].

Key Experimental Methodology

The experimental validation of ACARL followed a structured protocol to ensure a fair and meaningful comparison with baseline methods [3]:

- Target Selection: Experiments were conducted across three distinct protein targets to demonstrate generalizability.

- Baseline Comparison: ACARL was compared against other advanced molecular design algorithms.

- Evaluation Metrics: The primary metric for success was the generation of novel molecules with high binding affinity for the specified targets. Structural diversity of the generated molecules was also assessed to ensure the model explored a wide chemical space [3].

- Oracle/Scoring Function: The experiments utilized structure-based docking software as the scoring function (oracle). Docking scores have been proven to authentically reflect activity cliffs, unlike simpler scoring functions found in benchmarks like GuacaMol, which often lack this critical discontinuity [3]. The relationship between the docking score (binding free energy, (\Delta G)) and the inhibitory constant ((Ki)) is given by: [ \Delta G = RT \ln Ki ] where (R) is the universal gas constant and (T) is the temperature. A lower (K_i) (and thus a lower, more negative (\Delta G)) indicates higher activity [3].

Quantitative Results and Comparative Analysis

ACARL consistently demonstrated an enhanced ability to generate molecules with high predicted binding affinity across the tested protein targets.

Table: Summary of ACARL's Experimental Performance

| Evaluation Aspect | Key Finding | Implication |

|---|---|---|

| Binding Affinity | ACARL surpassed state-of-the-art algorithms in generating high-affinity molecules [3]. | Direct improvement in the primary objective of discovering potent drug candidates. |

| Structural Diversity | The generated molecules exhibited diverse structures [3]. | Indicates robust exploration of chemical space, reducing the risk of over-optimizing for a narrow set of chemotypes. |

| SAR Modeling | Effectively integrated complex SAR principles, including activity cliffs, into the design pipeline [3]. | Moves beyond smooth QSAR assumptions, leading to more practically relevant molecular generation. |

The Scientist's Toolkit: Essential Research Reagents and Materials

Implementing and experimenting with the ACARL framework requires a combination of software tools, datasets, and computational resources.

Table: Essential Research Reagents and Materials for ACARL

| Item / Resource | Function / Description | Relevance to ACARL |

|---|---|---|

| ChEMBL Database | A large-scale, open-access bioactivity database containing millions of compound-protein interaction records [3]. | Serves as a primary source of training data (molecular structures and associated (K_i) activities) for various protein targets [3]. |

| Molecular Docking Software | Computational tools (e.g., AutoDock Vina, Glide) that predict the binding orientation and affinity of a small molecule to a protein target [3]. | Functions as the environment/oracle in the RL loop, providing the reward signal ((\Delta G) docking score) for generated molecules [3]. |

| Tanimoto Similarity / MMPs | Methods for quantifying molecular structural similarity. Tanimoto similarity uses molecular fingerprints, while Matched Molecular Pairs (MMPs) define pairs differing at a single site [3]. | Fundamental for calculating the structural distance, (d_T(x,y)), in the Activity Cliff Index formula [3]. |

| Reinforcement Learning Library | A software framework for implementing RL algorithms (e.g., OpenAI Gym, Ray RLLib). | Provides the infrastructure for building and training the RL agent that generates molecular structures. |

| Chemical Representation Library | Software like RDKit or PaDEL for calculating molecular descriptors and fingerprints [3]. | Used to convert molecular structures into machine-readable representations (e.g., ECFPs) for similarity calculation and model input. |

The ACARL framework represents a paradigm shift in AI-driven molecular design by moving beyond the assumption of smooth structure-activity landscapes. Its explicit formulation of the Activity Cliff Index and the integration of a contrastive loss within a reinforcement learning pipeline demonstrate the powerful synergy of combining deep domain knowledge with advanced machine learning [3]. This approach allows generative models to prioritize and exploit high-impact regions of the chemical space, leading to the more efficient discovery of novel, high-affinity drug candidates.

Future work in this field will likely focus on extending this principle to multi-parameter optimization, where activity cliffs must be balanced against other critical properties like ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity). Furthermore, applying similar cliff-aware paradigms to other material generative AI research areas could unlock new avenues for discovering compounds with tailored, discontinuous property enhancements.

Activity cliffs (ACs), characterized by small structural modifications in molecules leading to significant changes in biological activity, represent a critical challenge in drug discovery and materials generative AI research. Traditional computational methods, which predominantly focus on ligand information, face significant limitations in robustness and generalizability across diverse receptor-ligand systems. This whitepaper presents MTPNet (Multi-Grained Target Perception network), a unified framework that innovatively incorporates multi-grained protein semantic conditions to dynamically optimize molecular representations for activity cliff prediction. By integrating both Macro-level Target Semantic (MTS) guidance and Micro-level Pocket Semantic (MPS) guidance, MTPNet internalizes complex interaction patterns between molecules and their target proteins through conditional deep learning. Extensive experimental validation on 30 representative activity cliff datasets demonstrates that MTPNet significantly outperforms previous state-of-the-art approaches, achieving an average RMSE improvement of 18.95% on top of several mainstream GNN architectures. This technical guide provides an in-depth examination of MTPNet's architectural principles, detailed methodologies for implementation, and comprehensive performance benchmarks, establishing a new paradigm for activity cliff-aware generative AI in drug discovery [28].

In the field of drug discovery and materials generative AI, Activity Cliffs (ACs) present a formidable challenge where minor structural changes in molecules yield significant differences in biological activity. These discontinuities in structure-activity relationships (SAR) complicate the drug optimization process and serve as a major source of prediction error in conventional AI models. Traditional computational methods have primarily relied on molecular fingerprint comparison and similar techniques but suffer from limited robustness and generalization [28]. The fundamental limitation of these approaches lies in their focus on modeling molecules themselves while overlooking the critical role of paired receptor proteins in determining biological activity [28].

The emergence of deep learning approaches, particularly Graph Neural Networks (GNNs), has advanced the field beyond traditional methods. Models such as MoleBERT, ACGCN, and MolCLR have demonstrated improved capability in capturing complex structure-activity relationships [28]. However, these methods still face two significant challenges: (1) insufficient use of protein features hampers accurate modeling of molecular-protein interactions, and (2) limited generalizability across various types of AC prediction tasks constrains their applicability to different binding targets [28]. This limitation has become a critical bottleneck hindering the widespread adoption of AI-driven approaches in practical drug discovery applications [28].

MTPNet addresses these fundamental limitations by introducing a novel paradigm that incorporates receptor protein information as guiding semantic conditions. This approach enables the model to capture critical dynamic interaction characteristics that drive activity cliff phenomena, providing a unified framework for activity cliff prediction across diverse receptor-ligand systems [28].

MTPNet Architectural Framework

Core Theoretical Foundation

MTPNet operates on the fundamental principle that activity cliffs are driven by complex interactions between ligands and receptor proteins, rather than being intrinsic properties of molecules alone. The framework formalizes activity cliff prediction within a conditional deep learning paradigm where protein information serves as semantic guidance for optimizing molecular representations. Formally, for an activity cliff molecular dataset (D), each instance (xi) represents input features of a molecular-receptor pair, with (yi) representing the corresponding continuous property value (change in compound potency values, (\Delta pKi)). The input features (xi) include receptor protein features (xi^{\text{pro}(m)}) and ligand molecule features (xi^{\text{mol(m)}}), which are fused using the Multi-Grained Target Perception (MTP) Module to capture critical interaction features [28].

The framework categorizes binding targets into single binding target and multiple binding targets, acknowledging that different binding targets affect how molecules bind to receptor proteins, thereby influencing model training and accuracy [28]. This distinction enables MTPNet to handle diverse prediction scenarios across different drug discovery contexts.

Multi-Grained Target Perception Module

The MTP module constitutes the core innovation of MTPNet, comprising two complementary components that operate at different granularities to capture protein-ligand interaction semantics:

Macro-level Target Semantic (MTS) Guidance

The MTS component focuses on global interaction patterns between molecules and proteins, capturing broad functional characteristics that influence binding affinity. This high-level semantic guidance enables the model to understand how different protein families or types interact with molecular structures at a macroscopic level. The MTS guidance operates by extracting holistic protein features and establishing their correlation with molecular representations through cross-attention mechanisms, allowing the model to learn which molecular features are most relevant for specific protein classes [28].

Micro-level Pocket Semantic (MPS) Guidance

The MPS component targets precise spatial and chemical interactions at the binding site level, detecting small structural variations that result in significant differences in biological activity. This fine-grained guidance analyzes the physicochemical properties and spatial arrangements of protein binding pockets, focusing on atomic-level interactions that drive activity cliff phenomena. By perceiving critical interaction details at this granular level, MPS guidance enables the model to identify subtle structural changes in molecules that disproportionately impact binding affinity [28].

The synergistic combination of MTS and MPS guidance allows MTPNet to dynamically optimize molecular representations through multi-grained protein semantic conditions, effectively capturing both broad interaction patterns and precise critical contacts that determine activity cliff behavior [28].

Architectural Implementation

The MTPNet architecture implements the MTP module as a plug-and-play component that can be integrated with various mainstream GNN backbones. The protein features are extracted using advanced protein language models (PLMs) such as ESM (Evolutionary Scale Modeling) and SaProt, which leverage self-supervised learning on large-scale protein sequences to capture rich semantic representations [28]. These protein representations are then processed through separate pathways for MTS and MPS guidance, generating conditional signals that modulate the molecular representation learning process.

The molecular representations are typically extracted using GNNs that operate on molecular graphs, capturing structural and chemical features. The MTP module fuses protein and molecular representations through cross-attention mechanisms, enabling the model to focus on molecular substructures that are most relevant for interaction with specific protein features. This conditional optimization process results in interaction-aware molecular representations that significantly enhance activity cliff prediction accuracy [28].

Experimental Protocols and Methodologies

Dataset Preparation and Curation

The experimental validation of MTPNet utilized 30 representative activity cliff datasets encompassing diverse receptor-ligand systems. Each dataset was curated to include molecular structures, corresponding biological activities (typically expressed as (pKi = -\log{10}Ki), where (Ki) is the inhibitory constant), and associated protein target information [28]. The protein features were extracted using pre-trained protein language models, with ESM and SaProt identified as particularly effective for capturing relevant semantic information [28].

For molecular representation, multiple input modalities were supported, including molecular graphs (with atoms as nodes and bonds as edges) and SMILES strings. The activity values were processed as continuous regression targets, with the specific focus on predicting (\Delta pK_i) values that quantify the potency differences indicative of activity cliffs [28]. Dataset partitioning followed rigorous protocols to ensure meaningful evaluation, with careful consideration of split strategies to avoid data leakage and assess generalizability across different binding targets.

Model Training Procedures

The training protocol for MTPNet involved a multi-stage approach:

Initialization: Protein language models and GNN encoders were initialized with pre-trained weights when available, leveraging transfer learning from large-scale molecular and protein datasets [28].