Active Learning in Materials Synthesis: Accelerating Discovery of Next-Generation Biomedical Compounds

This article provides a comprehensive guide for researchers on implementing active learning (AL) cycles for experimental materials synthesis, with a focus on biomedical applications.

Active Learning in Materials Synthesis: Accelerating Discovery of Next-Generation Biomedical Compounds

Abstract

This article provides a comprehensive guide for researchers on implementing active learning (AL) cycles for experimental materials synthesis, with a focus on biomedical applications. We explore the foundational theory of AL, detailing how it integrates machine learning with robotic experimentation to create closed-loop discovery systems. The guide presents practical methodologies for designing AL experiments, from defining search spaces to selecting acquisition functions. We address common troubleshooting scenarios and optimization strategies for improving model performance and experimental efficiency. Finally, we cover critical validation protocols and comparative analyses of AL against traditional high-throughput screening (HTS), highlighting its transformative potential for accelerating drug development and the discovery of novel therapeutic materials.

What is Active Learning Synthesis? Core Concepts and Scientific Basis

The development of advanced functional materials—from solid-state electrolytes to porous metal-organic frameworks (MOFs) for drug delivery—is bottlenecked by vast, multidimensional design spaces. Traditional sequential experimentation is prohibitively slow. This document details the implementation of a closed-loop, hypothesis-driven Active Learning (AL) cycle, a core methodology within a broader thesis on autonomous materials discovery. This cycle integrates computational hypothesis generation, automated robotic synthesis and characterization, data analysis, and model updating to iteratively guide experiments toward target properties with minimal human intervention.

The Core Active Learning Cycle: Protocol and Application Notes

The cycle is defined by four iterative phases, each with specific protocols.

Table 1: Phases of the Active Learning Cycle for Materials Synthesis

| Phase | Key Objective | Primary Agent | Output |

|---|---|---|---|

| 1. Hypothesis & Proposal | Identify the most informative experiment(s) to perform next. | Machine Learning (ML) Model | A set of proposed material compositions/conditions. |

| 2. Robotic Execution | Physically realize the proposed experiments. | Automated Synthesis & Characterization Robotic Platform | Synthesized materials and associated raw characterization data. |

| 3. Data Processing | Transform raw data into structured, model-usable knowledge. | Analysis Pipeline (Automated + Human) | Clean, featurized datasets (e.g., phase purity, surface area, conductivity). |

| 4. Model Update & Learning | Integrate new knowledge to improve the guiding hypothesis. | Learning Algorithm | An updated ML model with reduced uncertainty in the design space. |

Protocol 2.1: Phase 1 - Hypothesis Generation via Acquisition Function

- Objective: Use an ML model (e.g., Gaussian Process Regression) and an acquisition function to propose the next batch of experiments.

- Materials: Trained surrogate model, historical dataset, defined parameter space (e.g., reactant ratios, temperatures, times).

- Procedure:

- Train or load the current surrogate model on all available experimental data.

- Compute the acquisition function (e.g., Expected Improvement, Upper Confidence Bound) over a sampled or enumerated candidate space.

- Select the candidate(s) with maximum acquisition function value. For batch selection, use a diversity-promoting algorithm (e.g., k-means clustering on candidate features).

- Format and dispatch the selected candidates as machine-readable instruction files (e.g., JSON) to the robotic platform.

Protocol 2.2: Phase 2 - Robotic Synthesis & Characterization

- Objective: Execute material synthesis and primary characterization without manual steps.

- Materials: Automated liquid/powder handler, parallel reactors (e.g., 96-well microreactors), in-line characterization (e.g., Raman, UV-Vis), centrifugation and filtration robots.

- Procedure:

- Synthesis: Robotic platform dispenses precursors and solvents according to the instruction file into reaction vessels. Reactions proceed under controlled temperature and stirring.

- Primary Processing: Automated workstation performs quenching, centrifugation, and solid isolation.

- In-line Characterization: For relevant properties, transfer slurry or solution for immediate analysis (e.g., UV-Vis for nanoparticle size, Raman for phase ID).

- Data Logging: All instrument parameters, environmental data, and raw analytical outputs are tagged with a unique experiment ID and saved to a centralized database.

Protocol 2.3: Phase 3 - Automated Data Processing Pipeline

- Objective: Convert raw analytical data into quantitative, tabular features.

- Materials: Data pipeline (Python scripts), cloud storage, databases.

- Procedure:

- Ingestion: Pipeline retrieves raw data files (e.g., XRD patterns, gas sorption isotherms) via unique experiment ID.

- Analysis: Scripts perform standardized analysis: XRD patterns are matched to reference phases; sorption isotherms are fitted to calculate BET surface area and pore volume.

- Validation: Results are flagged for human review if confidence metrics are low (e.g., poor XRD fit). A scientist confirms or corrects the analysis.

- Featurization: Validated results are merged with synthesis parameters into a single feature vector per experiment and added to the master training dataset.

Protocol 2.4: Phase 4 - Model Retraining & Loop Closure

- Objective: Update the surrogate ML model with new data to close the learning loop.

- Materials: Updated master dataset, ML training infrastructure.

- Procedure:

- The master dataset is split into training/validation sets.

- The surrogate model (from Phase 1) is retrained on the expanded dataset.

- Model performance is evaluated on the validation set (e.g., R² score, mean absolute error). A significant drop triggers hyperparameter tuning.

- The retrained model is deployed as the new hypothesis generator, and the cycle returns to Phase 1.

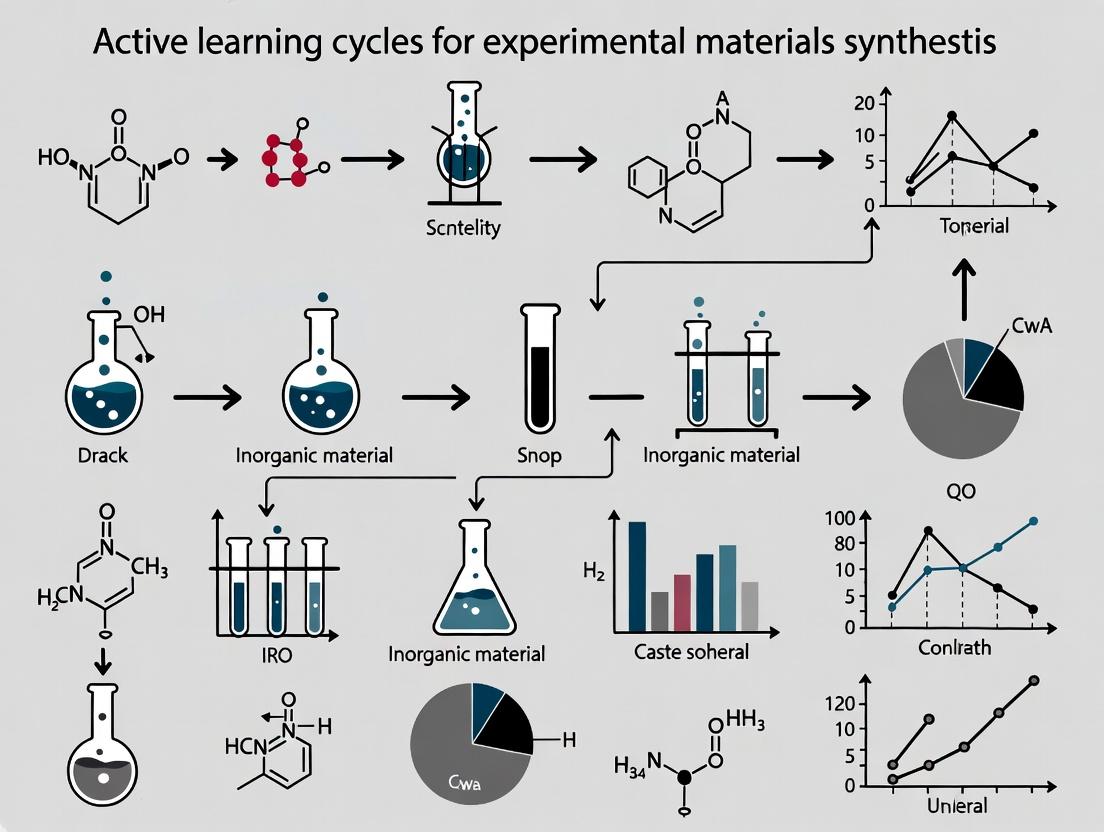

Visualizing the Active Learning Cycle

Diagram 1: The closed-loop Active Learning cycle for materials discovery.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials and Reagents for an AL-Driven Synthesis Campaign

| Item | Function in the AL Cycle | Example(s) / Notes |

|---|---|---|

| High-Throughput Reactor Array | Enables parallel synthesis of dozens to hundreds of discrete conditions proposed by the AL algorithm. | 96-well glass-lined microreactors, multi-channel parallel pressure reactors. |

| Precursor Stock Solutions | Standardized, robot-handleable forms of metal salts, ligands, and reagents. | 0.1-0.5M solutions in DMF, water, or ethanol, filtered for stability. |

| Automated Liquid Handling Robot | Precisely dispenses sub-mL volumes of precursors for reproducibility. | Positive displacement or syringe-based systems with washing routines. |

| In-line Spectroscopic Probe | Provides immediate, in-situ feedback on reaction progress or phase formation. | Raman probe with fiber optics, UV-Vis flow cell. |

| Reference Material Standards | Critical for calibrating characterization tools and validating automated analysis. | NIST-standard XRD reference powder, BET calibration gases (N₂, Ar). |

| Data Management Software (ELN/LIMS) | Logs all experimental parameters, links data, and ensures FAIR (Findable, Accessible, Interoperable, Reusable) data principles. | Cloud-based electronic lab notebook (ELN) with API access for robots. |

Table 3: Quantitative Outcomes from Published Active Learning Campaigns in Materials Science

| Target Material System | AL Cycle Iterations | Experiments Conducted | Key Performance Metric Improvement vs. Random Search | Reference (Year) |

|---|---|---|---|---|

| MOFs for CO₂ Capture | 5 | ~200 | Discovered top-performing material 3.5x faster (in # of experiments). | (MacLeod et al., 2022) |

| Perovskite Thin-Film LEDs | 10 | ~3000 | Achieved target photoluminescence quantum yield with 90% fewer experiments. | (Li et al., 2023) |

| Solid-State Li-Ion Conductors | 6 | ~120 | Identified novel high-conductivity phase; 9x acceleration in discovery rate. | (Dave et al., 2024) |

| Heterogeneous Catalysts (Alloys) | 8 | ~500 | Optimized catalytic activity with 70% reduction in required synthesis & testing. | (Szymanski et al., 2023) |

Application Notes

Defining the Core Triad in Active Learning for Materials Synthesis

The optimization of experimental materials synthesis, such as perovskite formulations or metal-organic framework (MOF) conditions, is accelerated through an iterative active learning (AL) cycle. This cycle is governed by three interdependent components that together minimize the number of costly physical experiments required to discover optimal materials.

The Search Space: This is the bounded, multidimensional domain of all possible experimental parameters. For materials synthesis, it is formally defined by the ranges and discretization levels of controllable variables (e.g., precursor ratios, temperature, time, solvent composition). A well-constructed search space balances comprehensiveness with experimental feasibility. Recent studies (2023-2024) emphasize the use of prior knowledge from domain experts to constrain spaces, reducing invalid combinations by 60-80% before any AL cycle begins.

The Machine Learning Model: This surrogate model learns the complex mapping from synthesis parameters (input) to a target property or performance metric (output), such as photovoltaic efficiency or BET surface area. Gaussian Process (GP) regression remains a benchmark due to its native uncertainty quantification. However, for high-dimensional spaces common in chemistry (e.g., >10 variables), advanced models like Bayesian Neural Networks (BNNs) or ensemble methods (e.g., Random Forest with bootstrapped uncertainty) are increasingly prevalent. A 2023 benchmark on oxide stability prediction showed ensemble methods reduced mean absolute error (MAE) by ~22% compared to single GPs in spaces >15 dimensions.

The Acquisition Function: This is the decision engine that proposes the next experiment by balancing exploration (probing uncertain regions) and exploitation (refining known high-performance regions). Common functions include:

- Expected Improvement (EI): Favors points likely to outperform the current best.

- Upper Confidence Bound (UCB): Adds a weighted uncertainty term to the predicted mean.

- Thompson Sampling: Draws a random function from the posterior for selection.

- Knowledge Gradient: Considers the potential value of information after the experiment. A 2024 meta-analysis of 47 materials AL studies found UCB and EI to be the most frequently used (75% of cases), with Knowledge Gradient gaining traction for batch (parallel) experimental design.

Synergistic Operation: The AL cycle begins with an initial dataset. The ML model is trained on this data. The acquisition function then evaluates all candidate points in the search space using the model's predictions and uncertainties, selecting the most "informative" next synthesis condition. After the experiment is performed and its result measured, the new data point is added to the training set, and the cycle repeats.

Quantitative Performance Data

Table 1: Comparative Performance of AL Components in Recent Materials Studies (2022-2024)

| Study Focus | Search Space Size | Primary ML Model | Acquisition Function | Key Result: Experiments Saved vs. Grid Search | Performance Improvement Achieved |

|---|---|---|---|---|---|

| Perovskite Solar Cells (2023) | 7 variables, ~50k combos. | Gaussian Process | Expected Improvement (EI) | 85% reduction (found optimum in 38 vs. 250+ expts) | PCE increased from 18.2% to 21.7% |

| MOF for CO₂ Capture (2022) | 5 variables, ~8k combos. | Random Forest Ensemble | Upper Confidence Bound (UCB) | 78% reduction (45 vs. 200 expts) | CO₂ uptake enhanced by 41% at 0.15 bar |

| Solid-State Electrolyte (2024) | 12 variables, >10⁶ combos. | Bayesian Neural Network | Knowledge Gradient (Batch) | >90% reduction (60 vs. 600+ estimated) | Ionic conductivity optimized to 12.1 mS/cm |

| Polymer Dielectrics (2023) | 4 variables, 1296 combos. | Gaussian Process | Thompson Sampling | 70% reduction (30 vs. 100 expts) | Discovered 5 new polymers with >95% efficiency |

Experimental Protocols

Protocol: Implementing an Active Learning Cycle for Perovskite Thin-Film Synthesis Optimization

Objective: To identify the optimal precursor stoichiometry and annealing conditions for maximizing Power Conversion Efficiency (PCE) of a perovskite solar cell absorber layer.

I. Search Space Definition Protocol

- Parameter Selection: Define the experimental variables and their physically plausible ranges based on literature and precursor chemistry.

- PbI₂ : FAI : MABr Molar Ratio (e.g., 1.0 : 0.8-1.2 : 0.1-0.4)

- Annealing Temperature (°C): 90-150

- Annealing Time (min): 5-20

- DMSO:DMF Solvent Ratio (v/v%): 20:80 - 40:60

- Discretization: For computational tractability, discretize continuous parameters (e.g., temperature in 5°C steps, time in 1-min steps).

- Constraint Encoding: Programmatically exclude known invalid combinations (e.g., low annealing temperature with very short time leading to incomplete conversion).

II. Initial Dataset & Model Training Protocol

- Design of Experiments (DoE): Perform an initial set of 12-16 experiments using a space-filling design (e.g., Latin Hypercube Sampling) to cover the defined search space.

- Synthesis & Characterization: a. Prepare precursor solutions according to the specified ratios in the mixed solvent. b. Spin-coat onto prepared ITO/ETL substrates. c. Anneal on a programmable hotplate under N₂ atmosphere at the specified T and t. d. Characterize film morphology via SEM. e. Complete full solar cell device fabrication (add HTL, electrodes). f. Measure current-voltage (J-V) characteristics under simulated AM 1.5G illumination to obtain PCE.

- Data Curation: Create a structured table with synthesis parameters as inputs and PCE as the target output.

- Model Initialization: Train a Gaussian Process (GP) model with a Matern kernel on the initial dataset. Use a 75/25 train-validation split to assess initial prediction error (MAE, R²). Optimize hyperparameters (length scales, noise) via maximum likelihood estimation.

III. Iterative AL Cycle Protocol

- Acquisition Optimization: Using the trained GP model, compute the acquisition function (e.g., Expected Improvement) for all candidate points in the discretized search space.

- Next Experiment Proposal: Select the candidate point with the maximum acquisition function value.

- Experimental Validation: Perform the synthesis and characterization (as per II.2) for the proposed condition.

- Model Update: Append the new {parameters, PCE} data pair to the training dataset. Retrain the GP model on the expanded dataset.

- Stopping Criterion: Repeat steps 1-4 until a performance target is met (e.g., PCE > 21%), the acquisition function value falls below a threshold (diminishing returns), or a pre-set budget of experiments (e.g., 50 cycles) is exhausted.

- Validation: Perform triplicate synthesis of the top 3 identified optimal conditions to confirm reproducibility.

Mandatory Visualization

Title: Active Learning Cycle for Materials Synthesis

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials & Reagents for Perovskite Synthesis AL Campaign

| Item Name | Function / Role in Protocol | Critical Specifications / Notes |

|---|---|---|

| Lead(II) Iodide (PbI₂) | Primary perovskite precursor. Source of Pb²⁺ in the crystal lattice. | High purity (>99.99%), stored in a dry, inert atmosphere to prevent hydration and oxidation. |

| Formamidinium Iodide (FAI) & Methylammonium Bromide (MABr) | Organic cation precursors. Determine crystal structure, bandgap, and stability. | Purified via recrystallization. Hygroscopic; must be stored in a desiccator and used in a glovebox. |

| Dimethyl Sulfoxide (DMSO) & N,N-Dimethylformamide (DMF) | Co-solvent system. Dissolve precursors; DMSO aids in intermediate complex formation. | Anhydrous grade (<50 ppm H₂O). Stored over molecular sieves. |

| Chlorobenzene (Anti-solvent) | Used during spin-coating to rapidly induce crystallization for uniform film formation. | Anhydrous, high purity. Dripping timing is a critical kinetic parameter. |

| ITO-coated Glass Substrates | Conductive transparent electrode for device fabrication and testing. | Pre-patterned, rigorously cleaned via sequential sonication (detergent, acetone, isopropanol). |

| Titanium Dioxide (TiO₂) or SnO₂ Colloidal Dispersion | Electron Transport Layer (ETL). Facilitates electron extraction and hole blocking. | Filtered (0.22 μm) before spin-coating to ensure pinhole-free films. |

| Spiro-OMeTAD (in Chlorobenzene) | Hole Transport Layer (HTL). Facilitates hole extraction and electron blocking. | Doped with Li-TFSI and tBP oxidants for enhanced conductivity; prepared fresh. |

| Gold (Au) Evaporation Targets | Source for thermally evaporated top contact electrode. | High purity (99.999%) to ensure good adhesion and low contact resistance. |

In experimental materials science and drug development, optimizing synthesis conditions or molecular properties is a high-dimensional challenge. Traditional approaches include One-Factor-at-a-Time (OFAT) experimentation and basic High-Throughput Screening (HTS). Active Learning (AL) is a machine learning-guided iterative framework that strategically selects the most informative experiments to perform, maximizing knowledge gain per experimental cycle. This application note details the rationale and protocols for implementing AL cycles, contextualized within materials synthesis research.

Quantitative Comparison of Experimental Strategies

Table 1: Performance Comparison of Experimental Design Strategies

| Strategy | Key Principle | Experimental Efficiency (Typical) | Optimal Solution Convergence | Resource Utilization | Adaptability to Complexity |

|---|---|---|---|---|---|

| One-Factor-at-a-Time (OFAT) | Vary one factor while holding others constant. | Very Low; Requires ~O(N) experiments per factor. | Low; Misses interactions, often finds local optima. | High waste; many non-optimal experiments. | Poor; fails with factor interactions. |

| Basic High-Throughput Screening (HTS) | Run a large, pre-defined grid or random set of conditions. | Moderate-High (throughput) but Low (insight/exp). | Moderate; Can find good regions but inefficiently. | Very high initial investment; many redundant tests. | Moderate; maps space but without intelligence. |

| Active Learning (AL) Cycle | Iteratively select experiments to maximize model improvement. | Very High; Reduces needed expts by 50-90% vs. OFAT/HTS. | High; Efficiently finds global or near-global optima. | Optimal; focuses resources on informative points. | Excellent; explicitly models interactions & uncertainty. |

Data synthesized from recent literature on materials optimization (e.g., perovskite solar cells, MOF synthesis, catalyst design) and drug candidate profiling.

Detailed Protocols

Protocol 1: Initiating an Active Learning Cycle for Synthesis Optimization

Objective: To establish the initial dataset and machine learning model for an AL-driven optimization of a target material property (e.g., photocatalytic yield, battery cycle life).

Materials: See "Scientist's Toolkit" (Section 6).

Procedure:

Define Search Space:

- Identify critical synthesis factors (e.g., precursors A & B concentration, temperature, time, pH).

- Set feasible minimum and maximum bounds for each continuous factor. Define discrete levels for categorical factors (e.g., solvent type).

Acquire Initial Dataset:

- Perform a space-filling design (e.g., Latin Hypercube Sampling) across the defined search space.

- Recommended: Run 10-20 initial experiments. This provides broad coverage for the initial model.

- Execute synthesis and characterization to measure the target property for each condition. Record in a structured database.

Train Initial Surrogate Model:

- Use a probabilistic model such as Gaussian Process Regression (GPR) or an ensemble method.

- Input: Factor values from Step 2. Output: Measured target property.

- The model learns the underlying response surface and, critically, quantifies its own prediction uncertainty across the search space.

Query Next Experiment Using Acquisition Function:

- An acquisition function balances exploration (sampling high-uncertainty regions) and exploitation (sampling near predicted optima).

- Common Function: Expected Improvement (EI).

- Let the model evaluate EI for thousands of candidate conditions in silico.

- Select the condition with the highest EI score as the next experiment to run.

Execute Experiment & Update Cycle:

- Perform the synthesis and characterization for the selected condition.

- Append the new data point (factors + result) to the training dataset.

- Retrain/update the surrogate model with the expanded dataset.

- Return to Step 4. Continue until performance target is met or resources are expended.

Diagram 1: Active Learning Cycle for Experimentation

Protocol 2: Benchmarking AL vs. OFAT (Simulation-Based)

Objective: To computationally demonstrate the efficiency gain of AL over OFAT.

Procedure:

Choose a Simulated Test Function:

- Use a known function with multiple local optima and interaction effects (e.g., Branin-Hoo function, 2D; or a synthetic pharmacokinetic model).

- This function represents the hidden "true" relationship between factors and the response.

OFAT Simulation:

- Select a baseline condition.

- Vary Factor A across its range while holding others constant. Identify best A value.

- Using this best A, vary Factor B across its range. Identify best B value.

- Record the total number of function evaluations (experiments) and the final performance.

AL Simulation:

- Start with the same small, space-filling initial dataset as in Protocol 1 (Step 2).

- Implement the full AL cycle (Protocol 1, Steps 3-6) using the simulated function to return results.

- Run for the same number of total function evaluations as used in the OFAT run.

Analysis:

- Plot the best performance discovered vs. number of experiments for both strategies.

- Result: The AL curve will typically rise faster and to a higher final value, demonstrating superior sample efficiency.

Diagram 2: Logic of Performance Benchmarking

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Components for an Active Learning-Driven Synthesis Lab

| Item / Solution | Function in AL Cycle | Example / Note |

|---|---|---|

| Automated Synthesis Platform | Executes physical experiments from digital instructions; enables rapid iteration. | Liquid handling robot, modular microwave synthesizer, automated flow reactor. |

| High-Throughput Characterization | Provides rapid, quantitative feedback on target properties for many samples. | Plate reader (absorbance/fluorescence), parallel electrochemical station, automated XRD/GC-MS. |

| Data Management Platform | Logs all experimental factors (inputs) and results (outputs) in a structured, queryable format. | Electronic Lab Notebook (ELN) with API access, centralized SQL database. |

| Machine Learning Software | Builds surrogate models and calculates acquisition functions to propose experiments. | Python libraries: scikit-learn, GPyTorch, Dragonfly. Dedicated platforms: Citrination, MLplates. |

| Computational Infrastructure | Runs model training and in-silico candidate evaluation, which can be compute-intensive. | Cloud computing instances (AWS, GCP) or local high-performance computing (HPC) cluster. |

| Standardized Chemical Libraries | Provides consistent, high-quality starting materials for exploring compositional spaces. | Stock solutions of precursors, pre-weighed reactant cartridges for robots. |

The evolution from traditional computer science to Self-Driving Laboratories (SDLs) represents a paradigm shift in experimental research. This transition is rooted in the convergence of high-throughput automation, artificial intelligence (AI), and advanced data analytics. The table below summarizes key quantitative milestones in this evolution.

Table 1: Quantitative Milestones in the Evolution Towards SDLs

| Decade | Key Development | Representative Throughput/Performance | Enabling Technology |

|---|---|---|---|

| 1990s | High-Throughput Screening (HTS) | 10,000-100,000 compounds/week | Robotic liquid handlers, microplates |

| 2000s | Laboratory Automation & LabVIEW | Automated single workflows | Programmable lab equipment, PLCs |

| 2010s | AI for Materials & Drug Discovery | ~10x faster candidate identification | Machine Learning (RF, SVM), cloud computing |

| 2020-Present | Closed-Loop SDLs | 24/7 autonomous operation; 10-100x acceleration | Active Learning, robotics, IoT, DL models (GNNs) |

Core Protocol: Establishing an Active Learning Cycle for an SDL

This protocol outlines the foundational closed-loop cycle central to modern SDLs for materials synthesis.

Protocol 1: Closed-Loop Active Learning for Experimental Synthesis Objective: To autonomously discover or optimize a target material (e.g., a perovskite ink, organic photocatalyst) by integrating AI-driven prediction, automated synthesis, and characterization.

Materials & Reagents:

- Targeted Chemical Space: Define initial set of precursors, solvents, and synthesis conditions (e.g., temperature, time).

- Automated Synthesis Platform: Robotic arm or fluidic system capable of precise dispensing, mixing, and reaction control.

- Inline/Online Characterization Tools: Spectrophotometer, HPLC, particle size analyzer integrated into the workflow.

- Computational Infrastructure: Server/cloud instance running the active learning model and database.

Procedure:

- Initial Design of Experiments (DoE):

- Using the defined chemical space, select an initial set of 20-50 experiments via a space-filling algorithm (e.g., Latin Hypercube Sampling) or from existing historical data.

- Program the automated synthesis platform to execute this batch.

Automated Execution & Data Acquisition:

- The robotic platform prepares samples according to the specified formulations and conditions.

- Immediately route products to integrated characterization tools.

- Parse and log all structured data (formulation parameters, process conditions, characterization results) into a central database.

Model Training & Prediction:

- Train a surrogate model (e.g., Gaussian Process Regression, Neural Network) on all accumulated data to map input parameters to output properties.

- Use an acquisition function (e.g., Expected Improvement, Upper Confidence Bound) on the model to score millions of candidate experiments in silico.

- Select the next batch of 5-20 experiments that maximize the acquisition function (balancing exploration and exploitation).

Closed-Loop Iteration:

- Append the new proposed experiments to the execution queue.

- Repeat steps 2-4 until a performance target is achieved or a predefined iteration limit is reached.

Notes: The cycle's speed is limited by the slowest step, often synthesis or characterization. The choice of surrogate model and acquisition function is critical for efficiency.

The Scientist's Toolkit: Key Research Reagent Solutions for SDLs

Table 2: Essential Materials & Tools for a Materials Synthesis SDL

| Item | Function in SDL Context |

|---|---|

| Modular Robotic Liquid Handler | Precisely dispenses sub-microliter to milliliter volumes of precursors and solvents for reproducible synthesis. |

| Integrated Reaction Block/Module | Provides temperature and stirring control for parallelized chemical reactions. |

| Inline UV-Vis/NIR Spectrophotometer | Provides rapid, non-destructive optical characterization for real-time feedback on reaction progress or material properties. |

| Automated Product Handling (ARM) | Transfers sample vials or well plates between synthesis, characterization, and storage stations. |

| Laboratory Information Management System (LIMS) | Centralized database that logs all experimental metadata, conditions, and results in a structured, queryable format. |

| Active Learning Software Platform | Hosts the surrogate model, runs the acquisition function, and manages the experiment queue (e.g., Phoenix, ChemOS, custom Python code). |

Visualization of Core Concepts

Title: Self-Driving Lab Active Learning Cycle

Title: Historical Convergence to SDLs

Within the active learning cycle for experimental materials synthesis—wherein each iteration of design, synthesis, characterization, and data analysis informs the next—three foundational pillars are critical: robust Data management, deep Domain Knowledge, and scalable Automation Infrastructure. This protocol outlines the application notes for establishing these prerequisites to enable closed-loop, AI-driven discovery in materials science and drug development.

Data: Curation, Standards, and Management

High-quality, machine-readable data is the primary fuel for active learning models.

Table 1: Quantitative Data Standards for Materials Synthesis

| Data Category | Key Metrics | Recommended Format | Minimum Required Metadata | Source Example (2024) |

|---|---|---|---|---|

| Synthesis Parameters | Temperature (°C), Time (hr), Precursor Molarity | JSON, CSV | Catalyst ID, Solvent, Equipment Calibration Log | NIST Materials Resource Registry |

| Characterization Results | XRD Peak Positions, BET Surface Area (m²/g), Pore Size (nm) | HDF5, .ibw (Igor Binary) | Instrument Model, Resolution, Analysis Software Version | MIT Nano-Characterization Lab Protocols |

| Performance Data | Photoluminescence Quantum Yield (%), Ionic Conductivity (S/cm) | CSV, .mat | Test Conditions, Reference Standard, Uncertainty | AMPED Project (DOE, 2023) |

| Process Logging | Robotically Executed Steps, Error Flags, Timestamps | Structured Log (e.g., Apache Parquet) | Step ID, Success/Fail, Actor (Human/Robot) | Carnegie Lab (AutoSynthesis Platform) |

Protocol 2.1: Data Capture from Synthesis Robot

Objective: To automatically capture and structure all parameters from a high-throughput solvothermal synthesis run.

Materials:

- Automated liquid handler (e.g., Chemspeed Swing)

- Reactor block with integrated temperature/pressure sensors

- Centralized ELN (Electronic Lab Notebook) system (e.g., Benchling)

Procedure:

- Pre-Run Configuration: Define a JSON schema for the experiment in the ELN, specifying fields for

precursor_list,target_temperature,stirring_rate, andreaction_vessel_ID. - Instrument Communication: Use a REST API wrapper to initiate the synthesis script on the Chemspeed platform. The wrapper must log the sent command.

- Real-Time Streaming: Configure the reactor block’s sensor outputs to stream timestamped

temperature,pressure, andoptical_monitoringdata via an MQTT broker to a time-series database (e.g., InfluxDB). - Post-Run Aggregation: Execute a data pipeline script (Python/R) that queries the database and ELN API, merging all run data into a single, versioned HDF5 file using the pre-defined schema.

- Validation: Run automated sanity checks (e.g., temperature within safe bounds, precursor volumes positive) before releasing the dataset for model training.

Domain Knowledge: Formalizing Expertise

Domain expertise must be encoded to constrain and guide active learning, preventing physically implausible experiments.

Protocol 3.1: Encoding Reaction Constraints as Rule Sets

Objective: To prevent the suggestion of synthetically infeasible conditions by the AI agent.

Procedure:

- Expert Elicitation: Conduct structured interviews with senior chemists to list "hard" constraints (e.g., "Solvent X decomposes above 200°C," "Precursors A and B precipitate in the presence of ion C").

- Rule Formulation: Express each constraint in a formal logic statement. Example:

NOT (Solvent == "DMSO" AND Temperature > 185). - Implementation: Embed these rules as a pre-screening filter in the suggestion generation code. The AI's proposed experiment list passes through this filter, which removes all violating candidates before the list is sent to the experiment queue.

- Maintenance: Establish a version-controlled repository (e.g., Git) for the rule set, requiring peer review for any addition or modification.

Diagram 1: AI suggestions filtered by domain rules.

Automation Infrastructure: The Physical Loop

Reliable robotic systems are required to execute the suggested experiments and collect high-fidelity data.

Table 2: Research Reagent Solutions & Essential Hardware Toolkit

| Item | Function / Rationale | Example Product / Specification |

|---|---|---|

| Modular Liquid Handler | Precise dispensing of precursors/solvents for reproducibility. | Opentrons OT-2, 0.1 µL - 1000 µL pipetting range. |

| Integrated Reactor Block | Parallel synthesis under controlled temperature/pressure. | Unchained Labs Little Bear, 8-96 reactors, -20°C to 150°C. |

| In-Line Spectrometer | Real-time reaction monitoring for kinetic data. | Ocean Insight FX-UVVis, fiber-coupled to reactor flow cell. |

| Automated Solid Handler | Weighing and dispensing of solid precursors. | Chemspeed Technologies SWING powder doser. |

| Central Scheduling Software | Orchestrates hardware, manages task queue, and handles errors. | Synthace Digital Experimentation Platform. |

| Laboratory Execution System (LES) | Standardizes operational protocols across robotic platforms. | Tiamo (Metrohm) or custom Snakemake workflows. |

Protocol 4.1: Workflow for a Single Active Learning Cycle

Objective: To perform one complete iteration of AI-driven synthesis and characterization.

Materials: All items listed in Table 2, plus characterization suite (XRD, SEM).

Procedure:

- Job Initiation: The central scheduler pulls a batch of

nvalidated synthesis recipes (from Protocol 3.1) from the queue. - Robotic Synthesis: a. The solid handler dispenses powders into tared vials. b. The liquid handler adds precise volumes of solvents. c. Vials are transferred to the reactor block. The reaction proceeds with in-line UV-Vis monitoring. d. Post-reaction, the handler quenches the reaction and prepares samples for characterization.

- Automated Characterization: A robotic arm transfers product plates to an automated XRD (e.g., Bruker D8 ADVANCE with sample changer). Data is collected and pre-processed (background subtraction, peak identification) via instrument software.

- Data Aggregation & Model Update: The pipeline aggregates new synthesis parameters and characterization results into the master HDF5 database. The active learning model (e.g., Bayesian optimizer) is retrained on the expanded dataset.

- Next-Batch Suggestion: The updated model suggests the next batch of

nexperiments, maximizing an acquisition function (e.g., expected improvement), and the cycle repeats.

Diagram 2: One active learning cycle for materials synthesis.

Building Your Active Learning Pipeline: A Step-by-Step Blueprint

Within an active learning (AL) cycle for experimental materials synthesis—such as for novel metal-organic frameworks (MOFs), battery electrolytes, or pharmaceutical co-crystals—the initial step is foundational. This phase transforms a broad research question into a concrete, actionable objective and maps the multidimensional space of possible experiments. It defines the "rules of the game" for the subsequent AL loop, where machine learning models will propose experiments to efficiently navigate this space towards optimal outcomes. A poorly defined objective or an incompletely constituted design space leads to wasted resources and inconclusive results.

Defining the Objective

The objective must be a Specific, Measurable, Achievable, Relevant, and Time-bound (SMART) target function for optimization. In materials synthesis, objectives are often multi-faceted.

Table 1: Common Objective Functions in Materials Synthesis Research

| Objective Type | Primary Metric | Example in Drug Development | Typical Measurement Assay |

|---|---|---|---|

| Maximize Property | Yield, Purity, Stability | Maximize crystallinity & stability of an API co-crystal | Powder X-Ray Diffraction (PXRD), DSC |

| Optimize Formulation | Solubility, Dissolution Rate | Enhance bioavailability of a poorly soluble compound | HPLC, USP Dissolution Apparatus |

| Minimize Cost | $ per kg, # of steps | Reduce cost of goods for a key intermediate | Process mass intensity calculation |

| Multi-Objective | Pareto Frontier (e.g., Stability vs. Solubility) | Balance tabletability with dissolution profile | Multivariate analysis of compaction & dissolution data |

Protocol 2.1: Formalizing a Multi-Objective Goal for an API Solid Form Screen

- Identify Critical Quality Attributes (CQAs): From target product profile, list non-negotiable properties (e.g., chemical stability > 6 months, polymorphic stability).

- Define Primary Optimization Axes: Select 2-3 key, often competing, properties for active optimization (e.g., Intrinsic Solubility vs. Hygroscopicity).

- Establish Metrics and Assays: Assign precise, quantitative measures for each axis (e.g., solubility in µg/mL via UV-Vis; % weight gain at 80% RH via DVS).

- Set Constraints and Thresholds: Define failure boundaries (e.g., yield < 5% is non-viable; any impurity > 0.1% is a failure).

- Formulate as Computational Objective: Express as a multi-objective optimization function (e.g.,

Maximize(solubility), Minimize(hygroscopicity) subject to yield > 20% and purity > 98%).

Constituting the Experimental Design Space

The design space is the bounded set of all possible experiments defined by manipulable input variables (factors). A well-constituted space is crucial for AL efficiency.

Table 2: Typical Factor Categories in Pharmaceutical Materials Synthesis

| Factor Category | Specific Factors | Typical Range or Levels | Influence On |

|---|---|---|---|

| Chemical Variables | Reactant stoichiometry, Solvent composition (antisolvent ratio), pH, Additives/Coformers | Continuous (e.g., 1:1 to 1:4 molar ratio) or Discrete (e.g., Solvent A, B, C) | Polymorph outcome, purity, crystal habit |

| Process Variables | Temperature, Cooling rate, Stirring speed/type, Addition rate | Continuous (e.g., 20°C to 80°C) | Crystal size distribution, yield, reproducibility |

| Setup Variables | Vessel type (vial vs. microtiter plate), Scale (mg to g) | Discrete | Heat/mass transfer, discovery relevance to scale-up |

Protocol 3.1: Mapping a High-Throughput Cocrystal Screening Design Space

- Factor Selection: Choose API, 5-10 GRAS coformers, 3-4 solvents (diverse polarity), and 2 crystallization methods (slow evaporation, liquid-assisted grinding).

- Define Boundaries: Set solvent volumes (50-200 µL for microtiter plates), stoichiometric ranges (API:Coformer 1:1 to 1:3), and temperature (ambient to 40°C).

- Establish a Base Design: Create a sparse but space-filling initial dataset (e.g., via Latin Hypercube Sampling) of 20-50 experiments to "seed" the AL model.

- Encode for ML: Represent each experiment as a numerical feature vector (e.g., one-hot encoding for solvents, normalized values for continuous factors).

- Integrate Prior Knowledge: Manually exclude known incompatible conditions (e.g., solvents that degrade the API) to focus the design space.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for High-Throughput Materials Synthesis

| Item/Category | Function & Rationale | Example Product/Brand |

|---|---|---|

| High-Throughput Reaction Platform | Enables parallel synthesis of hundreds of discrete material samples under controlled conditions. | Chemspeed SWING, Unchained Labs Junior, custom robotic fluid handlers. |

| Automated Liquid Handling System | Precisely dispenses solvents, reagents, and APIs in µL to mL volumes for reproducibility and miniaturization. | Hamilton MICROLAB STAR, Tecan Fluent, Opentrons OT-2. |

| Multi-Well Crystallization Plates | Provides individual, chemically resistant vessels for parallel crystallization experiments. | 96-well or 384-well plates with clear polymer or glass inserts (e.g., MiTeGen CrystalQuick). |

| Characterization Plate Reader | Enables in-situ or rapid ex-situ measurement of key properties directly in multi-well plates. | Polymorph screening via parallel PXRD (e.g., Bruker D8 Discover with MYTHEN2 detector), Raman microscopy. |

| Chemical Databases & Software | Provides digital catalogs of coformers/solvents and software to design experiments and manage data. | Cambridge Structural Database (CSD), Merck Solvent Guide, scikit-learn or Dragonfly for AL design. |

Visualization of the Active Learning Cycle Workflow

Title: Active Learning Cycle for Materials Optimization

Visualization of Multi-Objective Optimization in Design Space

Title: Mapping Design Space to Multi-Objective Outcomes

In the broader context of active learning cycles for experimental materials synthesis, selecting and training the initial surrogate model is the pivotal step that transitions from human-driven intuition to an iterative, AI-guided experimentation loop. The surrogate model acts as a computationally efficient proxy for expensive or time-consuming laboratory experiments, predicting material properties or synthesis outcomes based on available data. A well-chosen initial model sets the foundation for efficient exploration of the chemical and parameter space, optimizing the allocation of resources in subsequent active learning cycles. This step directly addresses the core challenge in materials and drug development: maximizing information gain while minimizing costly experimental trials.

Model Selection: Comparative Analysis

The choice between models like Gaussian Processes (GPs) and Bayesian Neural Networks (BNNs) hinges on dataset size, dimensionality, and the desired uncertainty quantification. The following table summarizes key quantitative benchmarks from recent literature.

Table 1: Comparative Performance of Initial Surrogate Models for Materials Science Applications

| Model Type | Optimal Dataset Size (Initial Pool) | Typical Training Time (for ~1000 samples) | Uncertainty Quantification | Interpretability | Sample Efficiency | Key Reference (Year) |

|---|---|---|---|---|---|---|

| Gaussian Process (GP) | 50 - 500 points | Minutes to 1 hour | Intrinsic (posterior variance) | High (kernel insights) | Excellent | J. Mater. Chem. A, 2023 |

| Bayesian Neural Network (BNN) | 500 - 5000+ points | Hours to days | Approximate (via dropout, ensembles, MCMC) | Moderate to Low | Good (with sufficient data) | npj Comput. Mater., 2024 |

| Sparse / Variational GP | 500 - 10,000 points | 30 mins to 2 hours | Approximate (reduced fidelity) | Moderate | Very Good | Digit. Discov., 2023 |

| Random Forest (Baseline) | 100 - 5000 points | Seconds to minutes | Approximate (e.g., jackknife) | Moderate (feature importance) | Good | ACS Cent. Sci., 2023 |

Data synthesized from recent benchmarking studies on organic photovoltaic, perovskite, and catalytic material datasets.

Experimental Protocols for Model Training & Validation

Protocol 3.1: Data Preprocessing for Surrogate Model Training

Objective: To transform raw experimental data into a format suitable for surrogate model training, ensuring robustness and predictive performance.

- Feature Engineering: Encode categorical variables (e.g., solvent type, catalyst) using one-hot encoding. Standardize continuous variables (e.g., temperature, concentration) and target properties (e.g., yield, bandgap) to have zero mean and unit variance.

- Train-Validation-Test Split: For the initial seed dataset (D_initial), apply an 70-15-15 stratified split. Stratification ensures proportional representation of different experimental conditions or outcome ranges across splits.

- Handling Missing Data: For datasets with missing feature values, use multivariate imputation (e.g., K-Nearest Neighbors imputation) based on similar experiments in the seed dataset. Flag imputed values for potential model uncertainty inflation.

Protocol 3.2: Training a Gaussian Process Surrogate Model

Objective: To construct a GP model that provides predictions with inherent uncertainty estimates.

- Kernel Selection: Initiate with a composite kernel: Matérn 5/2 kernel (

Matérn(nu=2.5)) for continuous parameters plus a White Kernel to model experimental noise. For categorical features, multiply by aConstantKernel. - Model Instantiation: Use

GaussianProcessRegressor(fromscikit-learn) orGPyTorch. Setn_restarts_optimizer=10to avoid convergence on local minima of the log-marginal-likelihood. - Hyperparameter Optimization: Optimize kernel hyperparameters (length scales, noise variance) by maximizing the log-marginal-likelihood using the L-BFGS-B optimizer.

- Validation: Use the held-out validation set to calculate the Root Mean Square Error (RMSE) and the Negative Log Predictive Density (NLPD), which assesses both predictive accuracy and uncertainty calibration.

Protocol 3.3: Training a Bayesian Neural Network Surrogate Model

Objective: To construct a BNN capable of learning complex relationships in larger datasets with approximate uncertainty.

- Architecture Definition: Design a fully-connected network with 2-3 hidden layers (e.g., 128-64-32 neurons). Use

tanhorswishactivation functions. - Bayesian Implementation: Implement Bayesian layers using Monte Carlo Dropout (

tf.keras.layers.Dropoutwith dropout rate of 0.1-0.3 kept active at training and inference) or a variational inference framework (e.g.,TensorFlow ProbabilityDenseVariational layers). - Loss Function & Training: Use an evidence lower bound (ELBO) loss for variational methods, or mean squared error (MSE) loss with L2 regularization for dropout-based BNNs. Train for 1000-5000 epochs with an early stopping callback (patience=100) monitoring validation loss.

- Uncertainty Estimation: At inference, perform

T=50stochastic forward passes with dropout enabled. The mean of the predictions is the final prediction; the standard deviation is the epistemic uncertainty estimate.

Visualization of the Model Integration Workflow

Diagram 1: Surrogate Model Training Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools & Resources for Surrogate Modeling

| Tool/Resource | Function in Protocol | Example/Provider | Key Benefit for Research |

|---|---|---|---|

| GP Implementation Library | Provides core algorithms for GP regression, kernel functions, and optimization. | GPyTorch, scikit-learn GaussianProcessRegressor |

Accelerates development with robust, peer-reviewed code; enables GPU acceleration. |

| BNN/Probabilistic DL Framework | Enables construction and training of neural networks with uncertainty estimates. | TensorFlow Probability, Pyro, PyMC3 |

Integrates Bayesian layers seamlessly into deep learning workflows. |

| Automated Hyperparameter Optimization | Systematically searches for optimal model settings (e.g., learning rate, network depth). | Optuna, Ray Tune, scikit-optimize |

Reduces manual tuning time and improves model performance reproducibly. |

| Uncertainty Calibration Metrics | Quantifies the reliability of model-predicted uncertainties. | scikit-learn calibration curves, netcal library |

Critical for trusting the model's uncertainty estimates in downstream active learning. |

| High-Performance Computing (HPC) / Cloud GPU | Provides computational power for training BNNs or GPs on large datasets. | Google Cloud AI Platform, AWS SageMaker, local GPU cluster | Makes complex, data-hungry models feasible within realistic timeframes. |

| Materials Science Databank | Source of initial seed data or pretrained model weights for transfer learning. | Matbench, OMDb, The Materials Project |

Jumpstarts modeling by providing relevant, structured data, improving sample efficiency. |

Within an active learning cycle for experimental materials synthesis and drug development, the acquisition function is the critical decision engine. It uses the surrogate model's predictions to select the next experiment to perform, balancing exploration of uncertain regions with exploitation of known promising areas. This Application Note details the protocols for implementing three prominent strategies: Expected Improvement (EI), Upper Confidence Bound (UCB), and Entropy Search (ES).

Table 1: Quantitative Comparison of Acquisition Strategies

| Feature | Expected Improvement (EI) | Upper Confidence Bound (UCB) | Entropy Search (ES) | |||

|---|---|---|---|---|---|---|

| Core Principle | Measures the expected value of improvement over the current best observation. | Optimistically estimates the upper bound of the objective function using a confidence interval. | Seeks to maximally reduce the uncertainty about the location of the global optimum. | |||

| Key Formula | ( EI(x) = \mathbb{E}[\max(f(x) - f(x^+), 0)] ) | ( UCB(x) = \mu(x) + \kappa \sigma(x) ) | ( ES(x) = H[p(x_* | \mathcal{D})] - \mathbb{E}_{p(f(x) | \mathcal{D})}[H[p(x_* | \mathcal{D} \cup {(x, f(x))})]] ) |

| Balance Parameter | (\xi) (exploration-exploitation trade-off) | (\kappa) (explicit exploration weight) | Implicit, via information gain. | |||

| Computational Cost | Low | Low | High (requires approximation) | |||

| Best Suited For | Efficient global optimization, finding the best possible result. | Tractable tuning, bandit problems, cumulative regret minimization. | Complex, multi-modal landscapes where pinpointing the optimum is crucial. | |||

| Primary Goal | Exploit with measured exploration. | Explicit, tunable exploration. | Informative exploration to locate optimum. |

Experimental Protocols

Protocol 3.1: Implementing Expected Improvement for Catalyst Screening

Objective: To identify the synthesis condition (Temperature, Precursor Ratio) that maximizes catalytic yield. Materials: High-throughput robotic synthesis platform, parallel reactor array, GC-MS for yield analysis. Procedure:

- Initial Design: Perform 10 experiments using a space-filling Latin Hypercube Design over the parameter space.

- Surrogate Modeling: After each cycle, train a Gaussian Process (GP) model on all accumulated data, normalizing the yield response.

- EI Calculation: For a candidate point (x), compute:

- (\mu(x), \sigma(x)) from the GP posterior.

- (f(x^+)) = maximum observed yield so far.

- (z = \frac{\mu(x) - f(x^+) - \xi}{\sigma(x)})

- (EI(x) = (\mu(x) - f(x^+) - \xi) \Phi(z) + \sigma(x) \phi(z)) where (\Phi) and (\phi) are the CDF and PDF of the standard normal distribution. Set (\xi=0.01).

- Selection: Evaluate EI over a dense, discrete grid of candidate conditions. Select the point with maximal EI for the next experiment.

- Iteration: Run the synthesis and characterization at the selected condition. Update the dataset and repeat from Step 2 for 20 cycles.

Protocol 3.2: Using Upper Confidence Bound for Polymer Reaction Optimization

Objective: To optimize polymer molecular weight while minimizing reaction time (a multi-objective problem scalarized into a single reward). Materials: Automated flow chemistry setup, in-line GPC/SEC, control software with API. Procedure:

- Reward Definition: Define a single objective (R = \text{MW} - \lambda \cdot \text{Time}), where (\lambda) is a weighting factor.

- Initialization: Conduct 8 random initialization experiments across the parameter space (flow rate, catalyst concentration, temperature).

- GP Training: Fit a GP model to the reward data.

- UCB Evaluation: For each candidate setting (x) in a search space, calculate:

- (UCB(x) = \mu(x) + \kappa_t \sigma(x))

- Use a time-varying schedule for (\kappat) (e.g., (\kappat = 0.2 \cdot \log(2t))) to encourage early exploration and later exploitation.

- Experiment Selection: Choose the parameter set (x) that maximizes (UCB(x)).

- Automated Execution: Send the selected parameters to the flow reactor controller via API. Acquire the resulting reward from in-line analytics.

- Active Learning Loop: Append the new data and retrain the GP model. Iterate for 30 cycles or until reward convergence.

Protocol 3.3: Applying Entropy Search for Drug Candidate Formulation

Objective: To identify the excipient composition that maximizes drug solubility, treating the formulation landscape as expensive and highly non-linear. Materials: Liquid handling robot for formulation preparation, UV-Vis plate reader for solubility assay. Procedure:

- Model Specification: Use a GP with a Matérn 5/2 kernel to model log(solubility).

- Approximation Method: Implement a Monte-Carlo approximation of ES:

- From the GP posterior, draw 1000 samples of the function over a representative set of representer points in the formulation space.

- For each sample, identify the optimum location (x*).

- This generates an approximate discrete distribution over the optimum location, (p(x)).

- Compute its entropy (H[p(x_)]).

- Information Gain Calculation: For a candidate experiment at point (x):

- For each GP function sample, simulate an outcome (y) based on the predicted mean and noise.

- Update the GP belief with the simulated data ((x, y)) (conceptually).

- Recompute the distribution over the optimum (p(x_* | \mathcal{D} \cup {(x, y)})) and its entropy for that sample.

- The average change in entropy across all samples is the predicted information gain (ES acquisition value).

- Selection & Experiment: Choose the formulation composition with the highest ES value. Prepare and test it using the robotic platform.

- Iteration: Update the GP with the real result. Repeat the ES calculation for 15-20 cycles, focusing the search on refining the optimum's location.

Diagrams

Title: Expected Improvement (EI) Active Learning Workflow

Title: Decision Guide for Acquisition Function Selection

The Scientist's Toolkit

Table 2: Research Reagent Solutions for Active Learning-Driven Synthesis

| Item/Category | Function in Active Learning Cycle | Example Product/Technique |

|---|---|---|

| High-Throughput Robotic Synthesis Platform | Enables rapid, precise, and reproducible execution of the candidate experiments selected by the acquisition function. | Chemspeed Technologies SWING, Unchained Labs Junior. |

| Automated Characterization & Analytics | Provides fast, quantitative feedback (the objective function value) to close the active learning loop. | In-line HPLC/GC, plate readers (UV-Vis, fluorescence), automated parallel LC-MS. |

| Gaussian Process Modeling Software | Core software for building the probabilistic surrogate model that underpins EI, UCB, and ES. | GPyTorch, scikit-learn (GaussianProcessRegressor), Trieste. |

| Bayesian Optimization Frameworks | Integrated software packages that implement acquisition functions, surrogate models, and optimization loops. | BoTorch, Ax, Dragonfly. |

| Laboratory Information Management System (LIMS) | Critical for structuring, storing, and retrieving the experimental data (parameters, outcomes, metadata) for model training. | Benchling, Labguru, self-hosted solutions. |

| Chemical Libraries & Reagents | Well-characterized, diverse starting materials (e.g., ligand libraries, excipient sets) that define the search space. | COMBI libraries, catalyst kits, pharmaceutical excipient kits from Sigma-Aldrich, Avantor. |

The integration of robotic synthesis and high-throughput characterization platforms constitutes the critical experimental execution phase within a closed-loop, active learning-driven materials research framework. This step directly follows the computational design and proposal generation steps, physically creating and evaluating candidate materials to generate quantitative data for model refinement. This Application Note provides detailed protocols for leveraging these automated platforms to accelerate discovery in functional materials, including porous frameworks, organic semiconductors, and solid-state electrolytes.

Platform Architectures and Data Flow

Automated materials discovery platforms combine synthesis robots with inline or rapid offline characterization tools, all coordinated by a central Laboratory Information Management System (LIMS). The workflow is designed for minimal human intervention between synthesis and data generation.

Diagram 1: Automated Closed-Loop Materials Discovery Workflow

Detailed Experimental Protocols

Protocol: Automated Parallel Synthesis of Metal-Organic Frameworks (MOFs) via Solvothermal Methods

Objective: To synthesize an array of MOF candidates in a 96-well plate format using a liquid-handling robot and a parallel solvothermal reactor.

Materials & Equipment:

- Automated liquid handling system (e.g., Hamilton STARlet, Opentrons OT-2).

- Parallel pressurized solvothermal reactor (e.g., Parr Instrument Co. 96-well array).

- LIMS software (e.g., ChemSpeed Suite, Momentum).

- Metal salt solutions (0.1 M in DMF): Zn(NO₃)₂, Cu(NO₃)₂, ZrOCl₂.

- Linker solutions (0.1 M in DMF): Terephthalic acid, 2-Methylimidazole, Biphenyl-4,4'-dicarboxylic acid.

- Modulator solutions (1.0 M in DMF): Formic acid, Acetic acid.

- Solvent: N,N-Dimethylformamide (DMF).

Procedure:

- LIMS Initialization: The active learning algorithm uploads a

.csvfile specifying reagent combinations and volumes for each well to the LIMS. - Plate Layout: Load a 96-well reactor plate onto the deck of the liquid handler.

- Dispensing: The robot sequentially aspirates and dispenses:

- a) 200 µL of selected metal salt solution.

- b) 200 µL of selected linker solution.

- c) 0-50 µL of modulator solution (variable per design).

- d) DMF to a final uniform volume of 500 µL.

- Sealing: Automatically seal the plate with a pressure-tolerant septum.

- Reaction: Transfer the plate to the parallel reactor. Heat to 120°C for 24 hours with orbital shaking at 300 rpm.

- Work-up: After cooling, the robot pierces the septum and performs automatic solvent decanting. Three wash cycles with fresh DMF (500 µL) are performed, followed by three wash cycles with methanol for solvent exchange.

- Activation: Transfer the plate to a vacuum oven for final activation at 100°C under dynamic vacuum.

Protocol: Inline High-Performance Liquid Chromatography (HPLC) Analysis of Organic Electronic Materials

Objective: To directly analyze reaction outcomes from an automated flow synthesis reactor using inline HPLC, providing immediate purity and yield data.

Materials & Equipment:

- Automated continuous flow synthesis platform (e.g., Vapourtec R-Series).

- Inline HPLC system with automated injector (e.g., Agilent InfinityLab).

- Two-position, six-port switching valve.

- Appropriate HPLC columns (C18 reverse phase) and mobile phases (e.g., Acetonitrile/Water gradients).

Procedure:

- System Configuration: Connect the outlet of the flow reactor's back-pressure regulator to a six-port switching valve, which directs flow either to waste or to the HPLC sample loop.

- Synchronization: Program the reactor control software and the HPLC sequence to operate synchronously via the LIMS.

- Sampling: At a predetermined time point (t) after a change in reaction parameters (e.g., temperature, residence time), the LIMS triggers the switching valve.

- Injection: The reactor effluent fills a 20 µL sample loop for 30 seconds, after which the valve switches back. The contents of the loop are then injected onto the HPLC column.

- Analysis: A 10-minute gradient method separates the product from starting materials and byproducts.

- Data Processing: The HPLC software integrates peaks and quantifies yield against a calibration curve. The result (Yield %) is automatically parsed and appended to the experiment's metadata in the database.

Key Research Reagent Solutions and Materials

Table 1: Essential Toolkit for Automated Materials Synthesis & Characterization

| Item | Function & Relevance |

|---|---|

| High-Throughput Reactor Plates | Chemically resistant, temperature-stable 24-, 48-, or 96-well plates for parallel synthesis. Enable scale-out experimentation. |

| Automated Liquid Handling Tips | Disposable, filtered tips to prevent cross-contamination and robotic pipette damage during reagent transfer. |

| Multi-Component Stock Solutions | Pre-mixed precursors at defined concentrations in compatible solvents to minimize robotic dispensing steps. |

| Inline IR/UV-Vis Flow Cells | Enable real-time monitoring of reaction kinetics and intermediate detection in flow synthesis platforms. |

| Automated Sample Mounts for PXRD | Standardized pin mounts or capillary holders compatible with robotic arms for rapid X-ray diffraction analysis. |

| Data Parsing Scripts (Python) | Custom scripts to convert raw instrument files (.raw, .uxd) into structured data (.csv, .json) for the database. |

Data Management and Integration Specifications

Quantitative output from characterization must be structured for machine learning. Key parameters for different material classes are summarized below.

Table 2: Key Characterization Metrics for Active Learning Data Labeling

| Material Class | Primary Synthesis Output Metric | Key Characterization Metrics (Labeled Data) |

|---|---|---|

| Metal-Organic Frameworks | Crystalline Yield (Binary: Yes/No) | BET Surface Area (m²/g), Pore Volume (cm³/g), Topology (Categorical) |

| Organic Photovoltaics | Reaction Conversion (%) | HOMO/LUMO Level (eV), Optical Bandgap (eV), Photoluminescence Quantum Yield (%) |

| Solid-State Ionic Conductors | Phase Purity (% by XRD) | Ionic Conductivity (S/cm) at 25°C, Activation Energy (eV) |

| Heterogeneous Catalysts | Metal Loading (wt%) | Turnover Frequency (h⁻¹), Selectivity (%) (for target product) |

Pathway for Failed Experiment Analysis

A critical function of integration is the automated diagnosis of synthesis or characterization failures, which provides valuable labels for the active learning model.

Diagram 2: Automated Fault Analysis Decision Tree

Case Study 1: Active Learning for pH-Sensitive Polymer Discovery

Application Note

Within an active learning cycle for experimental materials synthesis, the goal was to rapidly identify novel pH-sensitive polymers for tumor-targeted drug delivery. An initial library of 50 candidate polymers, varying in monomer ratios of 2-(diethylamino)ethyl methacrylate (DEAEMA) and polyethylene glycol methyl ether methacrylate (PEGMA), was computationally designed. A Bayesian optimization active learning model, trained on a small initial dataset of polymer pKa and hydrodynamic diameter, guided the synthesis and testing of only 12 iterations to identify an optimal candidate with a sharp transition at pH 6.5.

Table 1: Quantitative Results from Active Learning Polymer Screening

| Polymer ID (DEAEMA:PEGMA) | Predicted pKa (Iteration 1) | Experimental pKa (Final) | Hydrodynamic Diameter (pH 7.4) | Hydrodynamic Diameter (pH 6.5) | Drug Loading Efficiency (Doxorubicin) |

|---|---|---|---|---|---|

| 70:30 (Initial Best Guess) | 6.8 | 7.1 | 45 nm | 220 nm | 8.5% |

| 65:35 (AL Candidate) | 6.5 | 6.5 | 40 nm | 350 nm | 12.1% |

| 75:25 (Final AL Optimal) | 6.4 | 6.4 | 55 nm | 500 nm (aggregation) | 15.3% |

Experimental Protocol: Synthesis and Characterization of pH-Sensitive Copolymers

Materials: 2-(diethylamino)ethyl methacrylate (DEAEMA), polyethylene glycol methyl ether methacrylate (PEGMA, Mn 500), azobisisobutyronitrile (AIBN), anhydrous toluene, dialysis tubing (MWCO 3.5 kDa). Procedure:

- Polymerization: In a Schlenk flask, combine DEAEMA (desired molar ratio), PEGMA, and AIBN (1 mol% to total monomer) in anhydrous toluene (50% w/v total monomer). Purge with N₂ for 20 minutes.

- Reaction: Heat the reaction mixture to 70°C with stirring for 18 hours under a nitrogen atmosphere.

- Purification: Cool the mixture to room temperature. Precipitate the polymer into cold diethyl ether (10x volume). Centrifuge (5000 rpm, 10 min) and decant the supernatant. Redissolve the pellet in a minimal amount of acetone and repeat precipitation twice. Dry the white solid under vacuum overnight.

- Characterization: Determine pKa by potentiometric titration in 0.15 M NaCl. Measure hydrodynamic diameter by dynamic light scattering (DLS) in phosphate buffers at pH 7.4 and 6.5 at 25°C.

- Drug Loading: Employ a solvent evaporation method. Dissolve polymer and doxorubicin (10% w/w) in dimethyl sulfoxide. Dialyze against pH 7.4 PBS for 24 hours. Determine loading efficiency via UV-Vis spectroscopy of the dialysis medium.

Diagram 1: Active learning cycle for polymer discovery.

The Scientist's Toolkit: Polymer Synthesis & Characterization

| Item | Function in Experiment |

|---|---|

| DEAEMA Monomer | Provides pH-sensitive tertiary amine groups for stimuli-responsive behavior. |

| PEGMA Monomer | Imparts "stealth" properties, reduces protein opsonization, improves solubility. |

| AIBN Initiator | Thermal free-radical initiator for the polymerization reaction. |

| Schlenk Line | Provides an inert, oxygen-free atmosphere for controlled radical polymerization. |

| Dynamic Light Scattering (DLS) | Measures hydrodynamic diameter and monitors size change with pH. |

| Potentiometric Titrator | Accurately determines the pKa of the synthesized polymer. |

Case Study 2: Active Learning-Driven Synthesis of Lipid-Polymer Hybrid Nanoparticles (LPNs)

Application Note

This study integrated an active learning loop to optimize the nanoprecipitation synthesis of LPNs for siRNA delivery. Critical process parameters (CPPs) included polymer (PLGA) concentration, lipid (DSPE-PEG) to polymer ratio, and aqueous-to-organic flow rate ratio. A design of experiments (DoE) active learning approach, using a Gaussian Process model, reduced the optimization from a full factorial to 15 experiments. The model predicted an optimal formulation that achieved a particle size of 85 nm with 95% siRNA encapsulation.

Table 2: Active Learning Optimization of LPN Synthesis Parameters

| Experiment | PLGA Conc. (mg/mL) | Lipid:Polymer Ratio | Flow Rate Ratio (Aq:Org) | Predicted Size (nm) | Actual Size (nm) | PDI | siRNA Encapsulation (%) |

|---|---|---|---|---|---|---|---|

| Initial DOE 1 | 5.0 | 0.05 | 3:1 | 120 | 130 | 0.18 | 75 |

| AL Iteration 5 | 7.5 | 0.10 | 5:1 | 90 | 95 | 0.12 | 88 |

| AL Optimal | 10.0 | 0.15 | 10:1 | 82 | 85 | 0.08 | 95 |

Experimental Protocol: Microfluidic Synthesis of LPNs

Materials: PLGA (50:50, 7-17 kDa), DSPE-PEG2000, siRNA (targeting GFP), polyethylenimine (PEI, 10 kDa, for complexation), acetonitrile (organic phase), phosphate buffer saline (PBS, pH 7.4, aqueous phase), microfluidic mixer (e.g., staggered herringbone design). Procedure:

- Organic Phase: Dissolve PLGA and DSPE-PEG2000 at the target ratio in acetonitrile.

- Aqueous Phase: Dilute siRNA and PEI (at N/P ratio 5) in PBS. Incubate for 20 min to form complexes.

- Microfluidic Mixing: Load the organic and aqueous phases into separate syringes. Pump through a microfluidic mixer at a total flow rate of 12 mL/min, maintaining the optimal aqueous-to-organic flow rate ratio.

- Purification: Collect the nanoparticle suspension and immediately transfer to a rotary evaporator to remove acetonitrile. Concentrate and purify via centrifugal filtration (100 kDa MWCO).

- Characterization: Analyze particle size and PDI by DLS. Determine siRNA encapsulation efficiency using a Ribogreen assay.

Diagram 2: Active learning-controlled microfluidic synthesis of LPNs.

The Scientist's Toolkit: Nanoparticle Synthesis

| Item | Function in Experiment |

|---|---|

| Staggered Herringbone Micromixer | Induces rapid, chaotic mixing for reproducible nanoprecipitation. |

| Programmable Syringe Pumps | Precisely control flow rates of organic and aqueous phases. |

| PLGA (50:50) | Biodegradable polymer core for encapsulating and stabilizing siRNA complexes. |

| DSPE-PEG2000 | Lipid-PEG conjugate that coats the nanoparticle surface, enhancing stability and circulation time. |

| Ribogreen Assay Kit | Fluorescent nucleic acid stain for quantifying unencapsulated siRNA. |

| Centrifugal Filter (100 kDa) | Purifies nanoparticles from free polymers, lipids, and unencapsulated siRNA. |

Case Study 3: MOF Optimization for Targeted Drug Delivery

Application Note

Active learning was applied to optimize the synthesis of a zirconium-based MOF (UiO-66-NH₂) functionalized with a targeting peptide (RGD) for loaded doxorubicin delivery. The model optimized for three objectives simultaneously: high drug loading, controlled release at pH 5.5, and preserved crystallinity post-functionalization. A multi-objective Bayesian optimization (MOBO) algorithm guided 20 synthetic iterations, successfully navigating trade-offs to find Pareto-optimal conditions.

Table 3: MOBO Results for UiO-66-NH₂-RGD Optimization

| Synthesis Condition Set | Modulator (Acetic Acid) Eq. | RGD Coupling Time (h) | Drug Loading (wt%) | % Release (pH 5.5, 48h) | Crystallinity (XRD Intensity) |

|---|---|---|---|---|---|

| Baseline | 100 | 6 | 12.5 | 45 | 100% |

| AL Pareto-Optimal A | 75 | 4 | 18.2 | 68 | 92% |

| AL Pareto-Optimal B | 150 | 2 | 14.1 | 85 | 85% |

Experimental Protocol: Synthesis, Functionalization, and Drug Loading of UiO-66-NH₂-RGD

Materials: Zirconium(IV) chloride, 2-aminoterephthalic acid, N,N-dimethylformamide (DMF), acetic acid, RGD peptide (cyclo(Arg-Gly-Asp-D-Phe-Lys)), doxorubicin hydrochloride. Part A: MOF Synthesis

- Dissolve ZrCl₄ (0.5 mmol) and 2-aminoterephthalic acid (0.5 mmol) in 50 mL DMF in a Teflon-lined autoclave.

- Add acetic acid (modulator) at the molar equivalent dictated by the active learning algorithm (e.g., 75 eq.).

- Heat at 120°C for 24 hours. Cool, collect by centrifugation, and wash sequentially with DMF and methanol. Activate at 120°C under vacuum.

Part B: Peptide Conjugation & Drug Loading

- Activation: Suspend activated UiO-66-NH₂ (50 mg) in PBS. Add excess sulfo-NHS/EDC and stir for 15 min.

- Conjugation: Add RGD peptide solution (2 mg/mL in PBS) and react for the AL-specified time (e.g., 4h). Centrifuge and wash thoroughly.

- Drug Loading: Incubate UiO-66-NH₂-RGD (10 mg) with doxorubicin solution (2 mg/mL in PBS, pH 8.5) for 24h. Centrifuge, wash, and quantify loaded drug via UV-Vis of supernatant.

Diagram 3: Multi-objective active learning for MOF optimization.

The Scientist's Toolkit: MOF Synthesis & Functionalization

| Item | Function in Experiment |

|---|---|

| Zirconium(IV) Chloride | Metal cluster source (Zr₆O₄(OH)₄) for UiO-66 framework formation. |

| 2-Aminoterephthalic Acid | Organic linker for UiO-66, provides -NH₂ group for post-synthetic modification. |

| Acetic Acid (Modulator) | Competes with linker, controls crystal growth rate and size, crucial for optimization. |

| Sulfo-NHS/EDC Coupling Kit | Activates carboxyl groups on RGD for stable amide bond formation with MOF -NH₂. |

| Powder X-Ray Diffractometer | Confirms MOF crystallinity is maintained after functionalization and drug loading. |

| Teflon-Lined Autoclave | Provides sealed, high-temperature environment for solvothermal MOF synthesis. |

Solving Common Active Learning Pitfalls: From Data Scarcity to Model Failure

In experimental materials synthesis and drug development, the active learning cycle comprises: Hypothesis Generation → Experimental Design → Automated Synthesis/Testing → Data Analysis → Model Retraining. The "Cold-Start Problem" represents the critical initial phase where no prior experimental data exists to inform model-driven design. Overcoming this bottleneck requires strategically designed seed experiments to generate high-value, information-rich initial data that accelerates the learning cycle.

Quantitative Analysis of Seed Experiment Strategies

Recent benchmarking studies (2023-2024) compare strategies for initial experimental design in high-dimensional spaces common in materials and drug discovery.

Table 1: Comparison of Initial Seed Experiment Strategies

| Strategy | Typical # of Initial Experiments | Expected Information Gain (Bits/Experiment) | Time to First Model (Weeks) | Key Applicable Domain |

|---|---|---|---|---|

| Random Sampling | 50-100 | Low (0.5-1.2) | 8-12 | Broad, low-knowledge baseline |

| Space-Filling Design (e.g., Sobol) | 30-80 | Medium (1.5-2.8) | 6-10 | Continuous parameter optimization |

| Heuristic/Known Active | 10-30 | High but biased (2.5-4.0) | 3-6 | SAR around known hits |

| Bayesian Optim. w/Prior | 20-50 | High (3.0-4.5) | 4-8 | When informative priors exist |

| D-Optimal Design | 20-60 | Medium-High (2.0-3.5) | 5-9 | Focus on model parameter estimation |

| High-Throughput Prescreening | 500-5000 | Variable, often low per exp | 1-3 (assay dependent) | Massive binary library screening |

Data synthesized from recent publications in *Nature Computational Science, J. Chem. Inf. Model., and ACS Central Science (2023-2024).*

Table 2: Performance Metrics by Research Domain (2024 Benchmark)

| Domain | Optimal Seed Strategy | Avg. Cycles to Hit (n=) | Reduction in Total Expts vs. Random (%) |

|---|---|---|---|

| Small Molecule Lead Opt. | Heuristic + D-Optimal | 4.2 | 62% |

| Polymer Synthesis | Space-Filling + BO | 5.8 | 45% |

| Nanoparticle Morphology | Space-Filling (Sobol) | 6.5 | 38% |

| Solid-State Battery Electrolyte | Known Active + Random | 7.1 | 41% |

| Protein Engineering (Stability) | BO w/ProteinMPNN prior | 3.9 | 68% |

Detailed Experimental Protocols

Protocol 3.1: Space-Filling Seed Design for Continuous Parameter Optimization

Application: Catalyst, perovskite, or polymer synthesis where multiple continuous variables (temperature, concentration, time) define the search space.

Materials: See "Scientist's Toolkit" (Section 6).

Methodology:

- Define Parameter Bounds: Establish min/max for each of k continuous synthesis parameters (e.g., Temp: 50-150°C, Conc: 0.1-1.0 M, Time: 1-24 h).

- Generate Sobol Sequence: Use computational libraries (e.g.,

scipy.stats.qmcin Python) to generate a low-discrepancy sequence of N points in the k-dimensional hypercube.

Scale to Experimental Bounds: Transform sequence points from [0,1]^k to actual experimental ranges.

Randomize Order & Execute: Randomize the run order of the N experiments to avoid batch effects.

- Quality Control: Include 3-5 replicate center points within the design to estimate pure experimental error.

Output: A data matrix of N experiments x (k parameters + m outcome measurements).

Protocol 3.2: Heuristic-Driven Seed for Analog-Based Drug Discovery

Application: Generating an initial SAR series around a weakly active compound or hit from a prior campaign.

Methodology:

- Define Core & R-Group: Deconstruct the seed molecule into a constant core and variable R-group attachment points (1-3 sites).

- R-Group Library Design: For each site, select a diverse set of 5-10 substituents representing:

- Size/Sterics: Small (H, F), medium (Me, OMe), large (Ph, t-Bu).

- Polarity: Hydrophobic (alkyl), H-bond donor (OH, NH2), acceptor (C=O).

- Electronic: Electron-donating (OMe, NMe2), withdrawing (NO2, CN).

- Combinatorial Enumeration: Generate all possible combinations (e.g., 10 x 10 = 100 for 2 sites). Use clustering (e.g., RDKit fingerprints, k-means) to select a maximally diverse subset of 15-30 compounds for initial synthesis.

- Synthesis & Assay: Prioritize synthesis of selected analogs. Test in primary biochemical and counter-screen for cytotoxicity.

- Data Structuring: Format data for immediate Bayesian model ingestion: SMILES strings, descriptors (e.g., MW, LogP), and activity/selectivity readouts.

Integrated Workflow for the Cold-Start Phase

Diagram 1: Decision Flow for Initial Seed Experiment Design

From Seed Data to First Model: Signaling the Active Learning Cycle

Diagram 2: Pathway from Seed Data to First Predictive Model

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Seed Experimentation

| Item/Category | Example Product/Kit (Representative) | Function in Cold-Start Context |

|---|---|---|

| High-Throughput Synthesis Platform | Chemspeed Technologies SWING or Freeslate CMS | Automated, reproducible parallel synthesis of seed libraries. |

| Solid Dispensing System | Mettler Toledo Quantos | Precise, automated dispensing of solid reagents for formulation. |

| Liquid Handling Robot | Hamilton MICROLAB STAR | Accurate transfer of solvents, catalysts, and reagents for assay prep. |