Optimizing Experimental Conditions with Machine Learning: A Guide for Biomedical Researchers

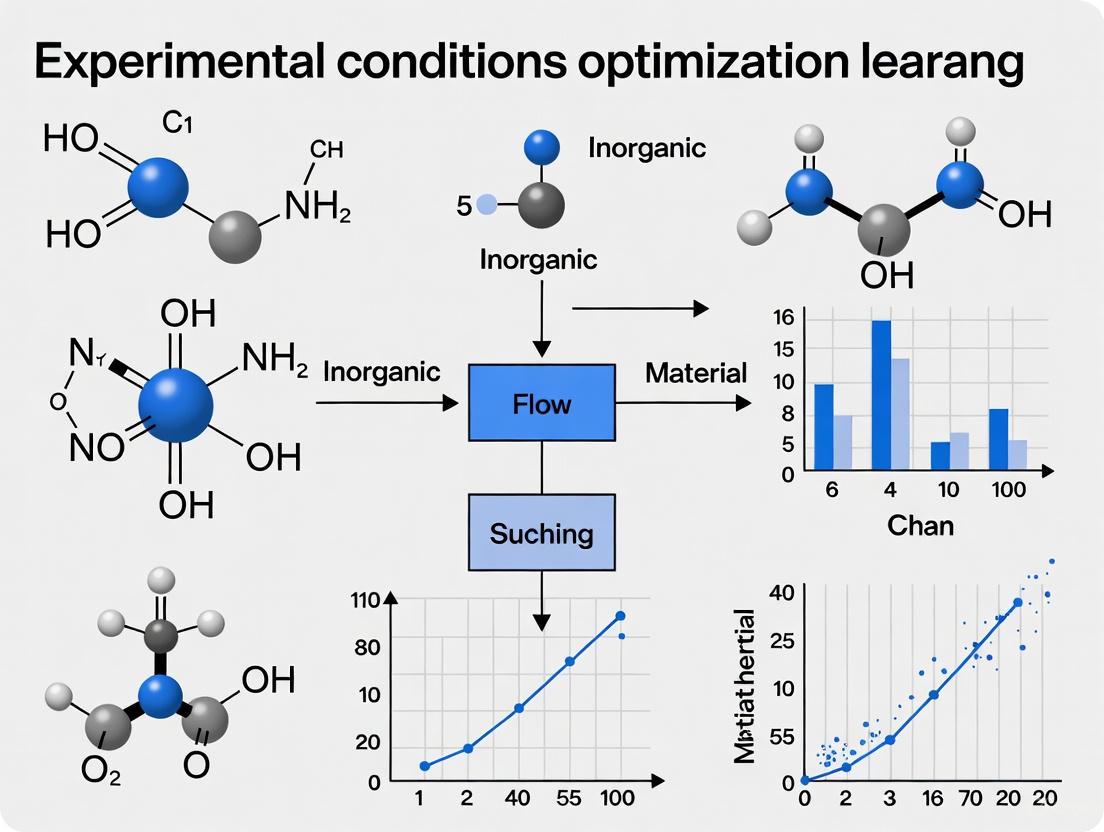

This article provides a comprehensive guide for researchers and drug development professionals on leveraging machine learning (ML) to optimize experimental designs.

Optimizing Experimental Conditions with Machine Learning: A Guide for Biomedical Researchers

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on leveraging machine learning (ML) to optimize experimental designs. It covers the foundational principles of Bayesian optimal experimental design (BOED) and simulator models, details practical methodologies for implementation, addresses common challenges like data quality and model interpretability, and presents validation frameworks through comparative case studies. The goal is to equip scientists with the knowledge to design more efficient, informative, and cost-effective experiments, accelerating discovery in biomedical and clinical research.

The Foundation: How Machine Learning is Revolutionizing Experimental Design

Bayesian Optimal Experimental Design

Bayesian Optimal Experimental Design (BOED) is a statistical framework that enables researchers to make informed decisions about which experiments to perform to maximize the gain of information while minimizing resources. By combining prior knowledge with expected experimental outcomes, BOED quantifies the value of potential experiments before they are conducted. This approach is particularly valuable in fields like drug discovery and bioprocess engineering, where experiments are often costly, time-consuming, and subject to significant uncertainty [1] [2].

The core principle of BOED involves using Bayesian inference to update beliefs about uncertain model parameters based on observed data. Unlike traditional Design of Experiments (DoE) methods that rely on predetermined mathematical models, BOED incorporates uncertainty quantification and adaptive learning, allowing for more efficient exploration of complex parameter spaces [2] [3]. This makes it exceptionally suited for optimizing experimental conditions in machine learning-driven research, where balancing exploration of unknown regions with exploitation of promising areas is crucial.

Theoretical Foundations

Core Mathematical Principles

BOED is fundamentally grounded in Bayes' theorem, which describes how prior beliefs about model parameters (θ) are updated with experimental data (y) obtained under design (d) to form a posterior distribution. The theorem is expressed as:

P(θ|y, d) = [P(y|θ, d) × P(θ)] / P(y|d)

Where:

- P(θ|y, d) is the posterior parameter distribution

- P(y|θ, d) is the likelihood function

- P(θ) is the prior parameter distribution

- P(y|d) is the model evidence [2]

The expected utility of an experimental design is typically measured by its Expected Information Gain (EIG), which quantifies the expected reduction in uncertainty about the parameters. This is often formulated as the expected Kullback-Leibler (KL) divergence between the posterior and prior distributions [4] [5].

Sequential versus Batch Design

BOED can be implemented in different configurations, with sequential and batch approaches representing two fundamental paradigms:

Table: Comparison of Experimental Design Strategies

| Design Strategy | Feedback Mechanism | Lookahead Capability | Computational Complexity | Optimality |

|---|---|---|---|---|

| Batch (Static) | None | None | Low | Suboptimal |

| Greedy (Myopic) | Immediate | Single-step | Moderate | Improved |

| Sequential (sOED) | Adaptive | Multi-step | High | Provably optimal [4] |

Sequential BOED represents the most sophisticated approach, formulating experimental design as a partially observable Markov decision process (POMDP) that incorporates both feedback from previous results and lookahead to future experiments [4]. This formulation generalizes both batch and greedy design strategies, making it provably optimal but computationally demanding.

BOED in Drug Discovery: A Case Study

Application to Pharmacodynamic Models

BOED has demonstrated significant value in optimizing pharmacodynamic (PD) models, which are mathematical representations of cellular reaction networks that include drug mechanisms of action. These models face substantial challenges due to parameter uncertainty, particularly when experimental data for calibration is limited or unavailable for novel pathways [1] [6].

A notable application involves PD models of programmed cell death (apoptosis) in cancer cells treated with PARP1 inhibitors. These models simulate synthetic lethality - where cancer cells with specific genetic vulnerabilities are targeted while healthy cells remain unaffected. However, uncertainty in model parameters leads to unreliable predictions of drug efficacy, creating a critical bottleneck in therapeutic development [1] [6].

Experimental Objectives and Metrics

In this drug discovery context, BOED aims to identify which experimental measurements will most effectively reduce uncertainty in predictions of therapeutic performance. Researchers have developed two key decision-relevant metrics:

- Uncertainty in probability of triggering cell death: Measures confidence in model estimates of drug effectiveness at inducing apoptosis

- Uncertainty in drug dosage: Quantifies confidence in predicting the dosage required to achieve a specific probability of cell death [1]

These metrics enable quantitative comparison of different experimental strategies based on their impact on predictive reliability rather than merely parameter uncertainty.

Quantitative Results and Experimental Recommendations

Simulation studies using BOED for PARP1 inhibitor models have yielded specific, quantitative recommendations for experimental prioritization:

Table: Optimal Experimental Measurements for PARP1 Inhibitor Studies

| Drug Concentration | Recommended Measurement | Uncertainty Reduction | Key Impact |

|---|---|---|---|

| Low ICâ‚…â‚€ | Activated caspases | Up to 24% reduction | Improved confidence in probability of cell death |

| High ICâ‚…â‚€ | mRNA-Bax levels | Up to 57% reduction | Enhanced dosage prediction accuracy [1] [6] |

These findings demonstrate that the optimal experimental measurement depends critically on the specific therapeutic context and performance metric of interest, highlighting the importance of defining clear objectives before applying BOED.

Experimental Protocols

General BOED Workflow for Drug Discovery

The following protocol outlines the complete BOED workflow for drug discovery applications, specifically for optimizing measurements in PARP1 inhibitor studies:

Step 1: Construct Prior Distributions

- Define prior probability distributions for all uncertain parameters in the PD model based on existing biological knowledge and literature

- Priors should encompass plausible ranges for kinetic parameters, initial conditions, and measurable species concentrations [6]

Step 2: Generate Synthetic Experimental Data

- Use the PD model with parameter samples from prior distributions to simulate experimental outcomes

- Incorporate appropriate noise models that reflect measurement error characteristics of laboratory techniques

- Generate large ensembles of synthetic datasets for each prospective experimental measurement [6]

Step 3: Perform Bayesian Inference

- Implement Hamiltonian Monte Carlo (HMC) sampling to compute posterior parameter distributions conditioned on synthetic data

- For each potential experiment type, repeat inference across multiple synthetic datasets to capture variability

- Validate convergence of sampling algorithms using diagnostic statistics [6]

Step 4: Compute Posterior Predictions

- Use posterior parameter distributions to simulate drug performance metrics

- Calculate probability of apoptosis induction across a range of drug concentrations

- Estimate minimum inhibitor concentration needed to achieve target efficacy (e.g., IC₉₀) [1] [6]

Step 5: Calculate Uncertainty Metrics

- Quantify uncertainty in key performance metrics for both prior and posterior predictions

- Compute uncertainty reduction for each candidate experiment using variance-based metrics or information-theoretic measures

- Focus on decision-relevant uncertainties rather than parameter uncertainties alone [1]

Step 6: Rank Experimental Designs

- Compare expected utility across all candidate measurements

- Select experiments that maximize reduction in decision-relevant uncertainties

- Consider practical constraints including measurement cost and technical feasibility [1] [6]

Protocol for Sequential BOED Using Policy Gradient Reinforcement Learning

For more advanced applications requiring sequential decision-making, the following protocol implements the Policy Gradient Sequential Optimal Experimental Design (PG-sOED) method:

Step 1: Problem Formulation as POMDP

- Model the sequential design problem as a finite-horizon Partially Observable Markov Decision Process

- Define belief states as posterior distributions of parameters given all available data

- Specify design spaces, observation spaces, and transition dynamics [4]

Step 2: Policy Parameterization

- Implement deep neural networks to represent policy functions that map belief states to experimental designs

- Choose appropriate network architectures based on complexity of design and parameter spaces

- Initialize policy parameters using domain knowledge where possible [4]

Step 3: Policy Gradient Optimization

- Derive gradient expressions for the expected utility with respect to policy parameters

- Employ actor-critic methods from reinforcement learning to estimate gradients

- Use Monte Carlo sampling to approximate expected information gain [4]

Step 4: Policy Evaluation and Refinement

- Simulate full trajectories of sequential experiments using current policy

- Compute cumulative information gain over the entire design horizon

- Iteratively update policy parameters using gradient ascent [4]

Step 5: Experimental Implementation

- Execute the optimized policy in actual experimental sequence

- Update belief states after each experiment using Bayesian inference

- Adapt future experimental designs based on accumulated data [4]

Computational Methods and Implementation

Algorithmic Approaches

Implementing BOED requires specialized computational methods to handle the inherent challenges of Bayesian inference and optimization:

Hamiltonian Monte Carlo (HMC): For high-dimensional parameter inference in PD models, HMC provides efficient sampling from posterior distributions by leveraging gradient information to explore parameter spaces [6].

Policy Gradient Reinforcement Learning: For sequential BOED problems, policy gradient methods enable optimization of design policies parameterized by deep neural networks, effectively handling continuous design spaces and complex utility functions [4].

Diffusion-Based Sampling: Recent advances utilize conditional diffusion models to sample from pooled posterior distributions, enabling tractable optimization of expected information gain without resorting to lower-bound approximations [5].

Table: Essential Research Reagent Solutions for BOED Implementation

| Tool/Category | Specific Examples | Function | Implementation Notes |

|---|---|---|---|

| Probabilistic Programming | Stan, PyMC, Pyro | Bayesian inference | Essential for posterior computation; HMC implementation critical for ODE models |

| Optimization Libraries | BoTorch, AX Platform | Experimental design optimization | Provide acquisition functions and optimization algorithms |

| Reinforcement Learning | TensorFlow, PyTorch | Policy gradient implementation | Enable DNN parameterization of policies in sOED |

| Specialized BOED Packages | optbayesexpt (NIST) | Sequential experimental design | Python package for adaptive settings selection [7] |

| Differential Equation Solvers | Sundials, SciPy | ODE model simulation | Required for dynamic biological system models |

Advanced Considerations and Future Directions

Addressing Model Misspecification

A significant challenge in practical BOED applications is model misspecification, where the computational model does not perfectly represent the true underlying system. Recent research has shown that in the presence of misspecification, covariate shift between training and testing conditions can amplify generalization errors. Novel acquisition functions that explicitly account for representativeness and error de-amplification are being developed to mitigate these effects [8].

Scaling to High-Dimensional Problems

Traditional BOED methods face computational bottlenecks when applied to high-dimensional design spaces or complex models. Emerging approaches leverage:

- Sparse Gaussian Processes: For efficient surrogate modeling with large datasets [2]

- Deep Ensemble Methods: To provide uncertainty estimates with non-probabilistic models [2]

- Contrastive Diffusion Models: For tractable EIG optimization in high-dimensional settings [5]

Integration with Experimental Automation

The full potential of BOED is realized when coupled with automated experimental systems. Closed-loop platforms that integrate BOED with high-throughput screening and robotic instrumentation enable rapid iteration through design-synthesize-test cycles, dramatically accelerating optimization in fields like bioprocess engineering and drug discovery [3].

Bayesian Optimal Experimental Design represents a paradigm shift in how researchers plan and execute experiments, moving from heuristic approaches to principled, uncertainty-aware decision-making. By quantifying the expected information gain of potential experiments, BOED enables more efficient resource allocation and faster scientific discovery. The protocols and applications outlined in this document provide a foundation for implementing BOED across various domains, with particular emphasis on drug discovery and bioprocess optimization. As computational methods continue to advance and integrate with automated experimental platforms, BOED is poised to become an indispensable tool in the machine learning-driven optimization of experimental conditions.

Why Traditional Experimental Design Falls Short for Complex Models

In the rapidly evolving landscape of machine learning research, traditional experimental design methodologies are increasingly revealing their limitations when applied to complex modern models. While classical Design of Experiments (DOE) approaches have served researchers well for decades in optimizing physical processes and product development, they struggle to capture the intricate, high-dimensional relationships inherent in contemporary artificial intelligence systems, particularly Large Reasoning Models (LRMs) and other sophisticated machine learning architectures [9] [10]. The fundamental disconnect stems from traditional DOE's foundation in linear modeling assumptions and its primary focus on parameter estimation efficiency, which contrasts sharply with the prediction-oriented, non-linear nature of complex AI systems [11] [12].

The emergence of AI systems capable of detailed reasoning processes has further exposed these limitations. Recent research has identified an "accuracy collapse" phenomenon in LRMs beyond certain complexity thresholds, where model performance drops precipitously despite sophisticated self-reflection mechanisms [9]. However, this apparent failure may actually reflect experimental design artifacts rather than fundamental model limitations, highlighting the critical need for more sophisticated evaluation frameworks [13]. This application note examines these limitations systematically and provides modern protocols for experimental design that align with the complexities of contemporary AI research.

Key Limitations of Traditional Experimental Designs

Statistical vs. Computational Efficiency Mismatch

Traditional experimental designs prioritize statistical efficiency through carefully structured, often sparse arrangements of experimental points. Methods like Central Composite Designs (CCDs), Box-Behnken Designs (BBDs), and Full Factorial Designs (FFDs) aim to maximize information gain while minimizing experimental runs [11]. While effective for traditional industrial experiments, this approach creates fundamental tensions with computational requirements of complex models:

- Fixed Design Inefficiency: Traditional DOEs employ fixed designs generated before data collection, making them incapable of adapting to emerging patterns during model training or evaluation [11].

- Exploration-Exploitation Imbalance: Classical designs lack mechanisms for dynamically balancing exploration of unknown regions and exploitation of promising areas, a crucial capability for optimizing complex models [14].

- Resource Misallocation: By prioritizing uniform space coverage, traditional designs often waste computational resources on unproductive regions of the parameter space that could be reallocated based on interim results [12].

Inadequate Handling of High-Dimensional Spaces

As model complexity increases, traditional experimental designs face fundamental scalability challenges:

Table 1: Scalability Comparison of Experimental Design Approaches

| Design Approach | Practical Factor Limit | Computational Complexity | Nonlinear Capture Ability |

|---|---|---|---|

| Full Factorial | 4-6 factors | O(k^n) | Limited |

| Response Surface | 6-10 factors | O(n^2) | Moderate (quadratic) |

| Space-Filling | 10-20 factors | O(n log n) | Good |

| Adaptive ML | 100+ factors | O(n) per iteration | Excellent |

The "curse of dimensionality" manifests severely in traditional designs. For instance, a full factorial design with just 20 factors at 2 levels requires 1,048,576 runs—computationally prohibitive for most complex model training scenarios [11]. While fractional factorial and other reduced designs mitigate this problem, they rely on effect sparsity assumptions that often don't hold in complex AI systems with intricate high-order interactions [12].

Rigidity in Model Representation

Traditional DOE methodologies typically assume polynomial response surfaces of limited complexity (typically quadratic), constraining their ability to capture the rich, non-linear behaviors of modern machine learning models:

- Pre-specified Model Forms: Traditional approaches require researchers to specify model forms in advance, creating a mismatch with neural networks and other models that learn representations directly from data [10].

- Limited Interaction Depth: While capable of capturing two-factor interactions, traditional designs struggle with the complex, high-order interactions that characterize deep learning models [12].

- Discrete Level Limitations: The reliance on discrete factor levels (high/low, etc.) fails to capture continuous, non-monotonic responses common in AI system hyperparameter tuning [11].

Quantitative Analysis of Design Performance

Recent comparative studies provide empirical evidence of traditional design limitations when applied to complex modeling scenarios:

Table 2: Performance Comparison of Design Approaches with ML Models (Adapted from Arboretti et al., 2023) [11]

| Design Category | Specific Design | ANN Prediction RMSE | SVM Prediction RMSE | Random Forest RMSE | Traditional RSM RMSE |

|---|---|---|---|---|---|

| Classical | CCD | 0.89 | 0.92 | 0.85 | 0.95 |

| BBD | 0.91 | 0.94 | 0.88 | 0.97 | |

| Optimal | D-optimal | 0.75 | 0.78 | 0.72 | 0.82 |

| I-optimal | 0.72 | 0.75 | 0.69 | 0.79 | |

| Space-Filling | Random LHD | 0.68 | 0.71 | 0.65 | 0.84 |

| MaxPro | 0.64 | 0.67 | 0.62 | 0.81 |

The data reveals several critical patterns. First, space-filling designs consistently outperform classical approaches across all model types, with MaxPro designs achieving 25-30% lower RMSE compared to CCDs when used with ANN models [11]. Second, the performance gap between traditional RSM and ML models is most pronounced when paired with space-filling designs, suggesting that traditional designs fundamentally limit model expressiveness. Third, I-optimal designs, which focus on prediction variance reduction, show particular promise for complex models where prediction accuracy is the primary objective [12].

Modern Experimental Design Framework for Complex Models

Adaptive Experimentation with Bayesian Optimization

Bayesian optimization represents a fundamental shift from traditional DOE by treating experimental design as a sequential decision-making process rather than a fixed plan:

Diagram 1: Bayesian Optimization Workflow

This adaptive approach, implemented in platforms like Ax (Meta's adaptive experimentation platform), employs a Gaussian process as a surrogate model during the optimization loop, making predictions while quantifying uncertainty—particularly effective with limited data points [14]. The acquisition function (typically Expected Improvement) then suggests the next most promising configurations to evaluate by capturing the expected value of any new configuration compared to the best previously evaluated configuration [14].

Multi-Objective Optimization Strategies

Complex AI systems typically involve multiple, often competing objectives—a scenario poorly handled by traditional single-response DOE:

Diagram 2: Multi-Objective Optimization Process

Modern approaches address this through compound criteria that balance competing objectives. For instance, a researcher might combine a D-optimal criterion for parameter estimation with an I-optimal criterion for prediction, represented as Φ = wD ΦD + wI ΦI, where wD and wI are weights assigned based on relative importance [12]. This enables nuanced trade-off analysis impossible with traditional methods.

Experimental Protocols for Complex Model Evaluation

Protocol: Adaptive Hyperparameter Tuning for LRMs

Objective: Efficiently optimize hyperparameters for Large Reasoning Models while accounting for their unique "thinking" characteristics and avoiding evaluation artifacts.

Materials & Setup:

- Access to LRM platform (OpenAI o1/o3, Claude Thinking, DeepSeek-R1, or Gemini Thinking)

- Bayesian optimization framework (Ax, BoTorch, or Scikit-Optimize)

- Computational budget allocation (time and monetary constraints)

Procedure:

- Define Critical Parameters: Identify 5-10 most influential hyperparameters (thinking tokens, temperature, sampling strategy, etc.) and their feasible ranges based on preliminary screening.

- Establish Compound Metric: Develop evaluation metric combining:

- Primary task accuracy (weight: 0.6)

- Reasoning efficiency (tokens/solution) (weight: 0.25)

- Solution consistency across variations (weight: 0.15)

- Initialize with Space-Filling Design: Generate 20-30 initial points using MaxPro discrete design for balanced initial coverage [11].

- Iterative Optimization Loop:

- For each iteration (50-100 total):

- Train/evaluate model with current parameter set

- Update Gaussian process surrogate model

- Calculate Expected Improvement across parameter space

- Select next parameter combination maximizing EI

- Validation & Analysis:

- Validate final parameters on holdout problem set

- Perform sensitivity analysis to identify critical parameters

- Document Pareto-optimal solutions for different resource constraints

Troubleshooting:

- For unstable convergence: Increase initial design points to 40-50

- For computational bottlenecks: Implement early stopping policies

- For metric conflicts: Return to step 2 and adjust weighting based on domain priorities

Protocol: Artifact-Free LRM Capability Evaluation

Objective: Accurately assess true reasoning capabilities while controlling for experimental artifacts like token limits and evaluation rigidity [13].

Materials:

- LRM access with sufficient token budget (≥128K context)

- Puzzle frameworks (Tower of Hanoi, River Crossing, Blocks World)

- Programmatic evaluation infrastructure with multiple output modalities

Procedure:

- Token Requirement Analysis:

- Calculate theoretical token requirements for full solution enumeration

- Verify token budget exceeds requirements by 2x margin

- For Tower of Hanoi: T(N) ≈ 5(2^N - 1)^2 + C [13]

- Set N such that T(N) ≤ 0.5 * context limit

Multi-Modal Output Assessment:

- Prompt for traditional step-by-step solutions

- Additionally prompt for algorithmic representations (Python functions, pseudocode)

- Request explanatory narratives of solution strategy

Solvability Verification:

- Mathematically verify all problem instances are solvable

- For River Crossing: Confirm N ≤ 5 for boat capacity b=3 [13]

- Exclude unsolvable instances from capability assessment

Adaptive Evaluation Framework:

- Implement credit assignment for partial solutions

- Distinguish between reasoning failures and practical constraints

- Assess conceptual understanding separately from execution completeness

Cross-Representation Analysis:

- Compare performance across output modalities

- Identify representation-dependent capability patterns

- Focus on consistent reasoning patterns rather than exact output matching

Validation:

- Confirm high accuracy on alternative representations (e.g., Lua functions for Tower of Hanoi) [13]

- Verify models demonstrate understanding through explanatory narratives

- Ensure failure cases represent genuine reasoning gaps rather than output constraints

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Research Tools for Complex Model Experimentation

| Tool/Category | Specific Examples | Primary Function | Application Context |

|---|---|---|---|

| Adaptive Experimentation Platforms | Ax, BoTorch, SigOpt | Bayesian optimization implementation | Hyperparameter tuning, resource allocation |

| Design Generation Libraries | AlgDesign (R), PyDOE2 (Python) | Traditional & optimal design generation | Initial screening, baseline comparisons |

| Multi-Objective Optimization | ParEGO, MOE, Platypus | Pareto front identification | Trade-off analysis, constraint management |

| Model Interpretation | SHAP, LIME, Partial Dependence | Black-box model interpretation | Causal investigation, feature importance |

| Uncertainty Quantification | Conformal Prediction, Bayesian Neural Networks | Prediction interval estimation | Risk assessment, model reliability |

| Benchmarking Suites | NAS-Bench, RL-Bench, Reasoning Puzzles | Standardized performance assessment | Capability evaluation, progress tracking |

| N-tritylethanamine | N-tritylethanamine, CAS:7370-34-5, MF:C21H21N, MW:287.4 g/mol | Chemical Reagent | Bench Chemicals |

| Oxfbd02 | Oxfbd02 | High-Purity Research Compound | Oxfbd02 is a high-purity chemical for research applications. For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. | Bench Chemicals |

The limitations of traditional experimental design when applied to complex models stem from fundamental mismatches in objectives, assumptions, and methodologies. While traditional DOE excels in parameter estimation for well-understood systems with limited factors, complex AI models require adaptive, flexible approaches that prioritize prediction accuracy and can navigate high-dimensional, non-linear spaces efficiently. The integration of machine learning with experimental design through Bayesian optimization, multi-objective frameworks, and artifact-aware evaluation protocols represents the path forward for researchers tackling increasingly sophisticated AI systems.

Modern platforms like Ax demonstrate the practical implementation of these principles at scale, enabling efficient optimization of complex systems while providing crucial insights into parameter relationships and trade-offs [14]. As AI systems continue to evolve toward more sophisticated reasoning capabilities, our experimental methodologies must similarly advance beyond twentieth-century statistical paradigms to twenty-first-century computational approaches that embrace rather than resist complexity.

In the rapidly evolving fields of machine learning and scientific research, simulator models and the principle of Expected Information Gain (EIG) have become foundational to optimizing experimental design. Simulator models, or computational models that emulate complex real-world systems, allow researchers to test hypotheses and run virtual experiments in a cost-effective and controlled environment. When paired with EIG—a metric from Bayesian optimal experimental design (BOED) that quantifies the expected reduction in uncertainty about a model's parameters from a given experiment—they form a powerful framework for guiding data collection. This is particularly crucial in domains like drug development, where physical experiments are exceptionally time-consuming and expensive. The core objective is to use these simulators to identify the experimental designs that will yield the most informative data, thereby accelerating the pace of discovery [15] [16].

This document details the core concepts, applications, and protocols for implementing EIG within simulator models. It is structured to provide researchers, scientists, and drug development professionals with both the theoretical foundation and the practical tools needed to integrate these methods into their research workflows for optimizing experimental conditions.

Core Concepts and Definitions

Simulator Models

Simulator models are computational programs that mimic the behavior of real-world processes or systems. In scientific and engineering contexts, they are used to understand system behavior, predict outcomes under different conditions, and perform virtual experiments that would be infeasible or unethical to conduct in reality.

- In-silico Models: These are computer simulation models used extensively in biomedical research to replicate human physiological and pathological processes. They range from pharmacokinetic/pharmacodynamic (PK/PD) models that predict drug concentration and effect in the body, to complex, multi-scale models of disease progression [15].

- Agent-Based Models (ABM): These models simulate the actions and interactions of autonomous agents (e.g., cells, individuals in a population) to assess their effects on the system as a whole. They are particularly useful for studying emergent behaviors in complex systems [17].

- Molecular Docking Simulations: Critical in drug discovery, these simulators predict how a small molecule (like a drug candidate) binds to a protein target. Machine learning is increasingly used to enhance the scoring functions that evaluate these interactions, leading to more accurate predictions of binding affinity [18].

The adoption of these models is driven by their potential to overcome the limitations of traditional animal models, including ethical concerns, high costs, and poor translational relevance to human biology [15].

Expected Information Gain (EIG)

Expected Information Gain (EIG) is the central quantity in Bayesian Optimal Experimental Design (BOED). It provides a rigorous, information-theoretic criterion for evaluating and comparing potential experimental designs before any physical data is collected [16] [19].

In the BOED framework, a model is defined with:

- A prior distribution, ( p(\theta) ), representing initial belief about the parameters of interest.

- A likelihood function, ( p(y|\theta, d) ), describing the probability of observing data ( y ) given parameters ( \theta ) and an experimental design ( d ).

The EIG for a design ( d ) is defined as the expected reduction in entropy (a measure of uncertainty) of the parameters ( \theta ) upon observing the outcome ( y ):

[ \text{EIG}(d) = \mathbf{E}_{p(y|d)} \left[ H[p(\theta)] - H[p(\theta|y, d)] \right] ]

Here, ( H[p(\theta)] ) is the entropy of the prior, and ( H[p(\theta|y, d)] ) is the entropy of the posterior distribution after observing data ( y ). Intuitively, EIG measures how much we expect to "learn" about ( \theta ) by running an experiment with design ( d ) [16]. The optimal design is the one that maximizes this quantity.

Robust Expected Information Gain (REIG) is an extension that addresses the sensitivity of EIG to changes in the model's prior distribution. It minimizes an affine relaxation of EIG over an ambiguity set of distributions close to the original prior, leading to more stable and reliable experimental designs [20].

The Scientist's Toolkit: Essential Research Reagents and Software

The following table catalogues key computational tools and conceptual "reagents" essential for research involving simulator models and EIG optimization.

Table 1: Key Research Reagents and Software Solutions

| Item Name | Type | Primary Function |

|---|---|---|

| Pyro | Software Library | A probabilistic programming language used for defining models and performing Bayesian Optimal Experimental Design, including EIG estimation [16]. |

| AnyLogic | Simulation Software | A multi-method simulation platform supporting agent-based, discrete event, and system dynamics modeling for complex systems in healthcare, logistics, and more [21]. |

| COMSOL Multiphysics | Simulation Software | An environment for modeling and simulating physics-based systems, ideal for engineering and scientific applications [21]. |

| Simulations Plus | Simulation Software | A specialized tool for AI-powered modeling in pharmaceutical processes, including drug interactions and efficacy simulations [21]. |

| Prior Distribution | Conceptual Model Component | Encodes pre-existing knowledge or assumptions about the model parameters before new data is observed [16]. |

| Likelihood Function | Conceptual Model Component | Defines the probability of the observed data given the model parameters and experimental design, forming the core of the simulator [16]. |

| Ambiguity Set | Conceptual Model Component | A set of probability distributions close to a nominal prior (e.g., in KL-divergence), used in robust EIG to account for prior uncertainty [20]. |

| Iodorphine | Iodorphine | Iodorphine is a potent synthetic μ-opioid receptor agonist for neuropharmacology research. For Research Use Only. Not for human or veterinary use. |

| Xenyhexenic Acid | Xenyhexenic Acid|C18H18O2|For Research Use | High-purity Xenyhexenic Acid for antibacterial and anticancer research. This product is for research use only (RUO) and not for human or veterinary use. |

Various methods exist for estimating the EIG, each with its own advantages, limitations, and computational trade-offs. The choice of estimator depends on factors such as the model's complexity, the dimensionality of the parameter space, and the required accuracy.

Table 2: Comparison of Expected Information Gain (EIG) Estimation Methods

| Method | Core Principle | Key Parameters | Best-Suited For |

|---|---|---|---|

| Nested Monte Carlo (NMC) [16] | A direct, double-loop Monte Carlo approximation of the EIG equation. | N (outer samples), M (inner samples) |

Models where likelihood evaluation is cheap; provides a straightforward but computationally expensive baseline. |

| Variational Inference (VI) [16] | Approximates the posterior with a simpler, parametric distribution and optimizes a lower bound on the EIG. | Guide function, number of optimization steps, loss function (e.g., ELBO). | Complex models where stochastic optimization is more efficient than sampling. |

| Laplace Approximation [16] | Approximates the posterior as a Gaussian distribution centered at its mode. | Guide, optimizer, number of gradient steps. | Models where the posterior is unimodal and approximately Gaussian. |

| Donsker-Varadhan (DV) [16] | Uses a neural network to approximate the EIG via a variational lower bound derived from the DV representation. | Neural network T, number of training steps, optimizer. |

High-dimensional problems, can be more sample-efficient than NMC. |

| Unbiased EIG Gradient (UEEG-MCMC) [19] | Estimates the gradient of EIG for optimization using Markov Chain Monte Carlo (MCMC) for posterior sampling. | MCMC sampler settings, number of samples. | Situations requiring gradient-based optimization of EIG, where robustness is key. |

Application Notes & Experimental Protocols

This section provides a detailed, step-by-step protocol for applying EIG to optimize an experimental design, using a simplified Bayesian model as an example. The model investigates the effect of a drug dosage (design d) on a binary outcome (e.g., patient response y), with an unknown efficacy parameter theta.

Protocol: EIG Maximization for a Simple Dose-Response Study

Objective: To identify the drug dosage level that maximizes the information gained about the drug's efficacy parameter.

Diagram 1: EIG Optimization Workflow

Materials and Software Requirements:

- A probabilistic programming framework (e.g., Pyro [16]).

- Python scientific computing stack (NumPy, PyTorch).

- The protocol below is implemented for a computational environment; no physical materials are required for the design phase.

Step-by-Step Procedure:

Model Specification:

- Define the Prior Distribution (

p(theta)): The prior represents the initial belief about the drug's efficacy parameter,theta. A common choice is a Normal distribution:theta ~ Normal(0, 1). - Define the Likelihood Function (

p(y | theta, d)): This models the relationship between the dosed, the efficacytheta, and the binary outcomey. A Bernoulli likelihood with a logistic link function is appropriate:y ~ Bernoulli(logits = theta * d) - Implement the Model in Code:

- Define the Prior Distribution (

Define the Design Space:

- The design space

Dis the set of all candidate dosages to be evaluated. For this example, define a tensor of dose values, e.g.,designs = torch.tensor([0.1, 0.5, 1.0, 2.0, 5.0]).

- The design space

Select and Configure an EIG Estimator:

- Choose an estimation method from Table 2. For its simplicity and direct interpretation, we will use the Nested Monte Carlo (NMC) estimator [16].

- Set the estimator's parameters. For NMC, this includes:

N: Number of outer samples (e.g., 1000).M: Number of inner samples (e.g., 100).

Compute EIG Across the Design Space:

- For each candidate design

dindesigns, compute its EIG using the chosen estimator. - Pyro Code Snippet:

- For each candidate design

Identify the Optimal Design:

- The optimal design

d*is the one with the highest EIG value.optimal_design = designs[torch.argmax(torch.tensor(eig_values))]

- The optimal design

Validation and Robustness Check (Advanced):

- To account for uncertainty in the prior, consider implementing a Robust EIG (REIG) approach [20]. This involves minimizing EIG over an ambiguity set of plausible priors, which can lead to a design that performs well under a wider range of true parameter values.

Application in Drug Development: A PK/PD Simulation Case Study

Context: A pharmaceutical company wants to design a clinical trial to learn about the pharmacokinetic (PK) and pharmacodynamic (PD) properties of a new drug. A complex simulator model exists that predicts drug concentration in the body (PK) and its subsequent effect (PD) based on parameters like clearance and volume of distribution.

Implementation:

- The PK/PD simulator is encoded as the likelihood function

p(y | theta, d), whereyare observed concentration and effect measurements,thetaare the unknown PK/PD parameters, anddincludes design variables like dosage amount and sampling time points. - EIG is calculated for different sampling schedules (e.g., sparse vs. frequent blood draws) and dosage regimens.

- The schedule that maximizes the EIG on the PK/PD parameters is selected for the actual trial. This ensures the most informative data is collected to precisely estimate the drug's properties, potentially reducing the number of subjects needed or the duration of the study [15].

Visualization of Method Relationships and Output

Understanding the relationships between different EIG estimation methods and the output of a simulation can guide methodological choices and interpretation of results.

Diagram 2: EIG Method Selection Criteria

The Economic and Ethical Imperative for Efficient Experiments

In fields such as drug development and scientific research, efficient experimentation is no longer a mere technical advantage but a fundamental economic and ethical necessity. The optimization of complex systems, where evaluating a single configuration is exceptionally resource-intensive or time-consuming, presents a significant challenge [14]. Adaptive experimentation, powered by machine learning (ML), offers a transformative solution by actively proposing optimal new configurations for sequential evaluation based on insights from previous data [14]. This approach directly addresses the high costs and protracted timelines inherent in traditional methods, particularly in pharmaceutical research. This document details the application notes and protocols for implementing these methodologies, providing researchers and drug development professionals with a practical framework for integrating efficient optimization into their experimental workflows, thereby accelerating discovery while responsibly managing resources.

The Case for Efficient Experimentation

Economic Drivers

The traditional paradigm of one-factor-at-a-time (OFAT) experimentation or exhaustive screening is economically unsustainable in high-dimensional spaces. In machine learning, for instance, tasks like hyperparameter optimization and neural architecture search can involve hundreds of tunable parameters, making exhaustive search prohibitively expensive [14]. The economic imperative is twofold:

- Reduced Direct Costs: Each experiment consumes valuable reagents, personnel time, and equipment hours. Bayesian optimization, a core method for adaptive experimentation, has been proven to identify optimal configurations with far fewer evaluations than traditional methods, leading to direct cost savings [14].

- Accelerated Time-to-Solution: In drug development, reducing the time from target identification to lead compound optimization has immense financial implications. Adaptive experimentation accelerates this process by systematically and intelligently guiding the experimental sequence towards promising regions of the parameter space, getting to an optimal result faster [14].

Ethical Imperatives

Beyond economics, efficient experimentation is an ethical obligation.

- Resource Stewardship: The responsible use of finite resources, including specialized chemicals, biological samples, and energy, is a core principle of sustainable science. Minimizing the number of experiments required to reach a conclusion is a direct manifestation of this stewardship.

- Reduction in Animal Testing: In preclinical research, optimization algorithms can be applied to in vitro assays to design more informative experiments, potentially reducing the number of animal studies required by identifying the most promising candidates and dosages earlier.

- Faster Therapeutic Development: Any methodology that can accelerate the development of new treatments for disease has an inherent ethical value. Efficient experimentation directly contributes to this goal by shortening the research timeline.

Core Machine Learning Methodology: Bayesian Optimization

At the heart of modern adaptive experimentation platforms like Ax lies Bayesian optimization (BO) [14]. This is an iterative approach for finding the global optimum of a black-box function that is expensive to evaluate, without requiring gradient information. The following protocol outlines its core mechanism.

Protocol: The Bayesian Optimization Loop

Objective: To find the configuration ( x^* ) that minimizes (or maximizes) an expensive-to-evaluate function ( f(x) ).

Materials/Reagents:

- Surrogate Model: A probabilistic model, typically a Gaussian Process (GP), used to approximate the unknown function ( f(x) ) [14].

- Acquisition Function: A function that determines the next configuration to evaluate by balancing exploration (trying uncertain regions) and exploitation (refining known good regions) [14].

- Historical Data (Optional): Any prior evaluations of the system to initialize the model.

Procedure:

- Initialization: Select a small set of initial configurations (e.g., via random or Latin Hypercube sampling) and evaluate them to form an initial dataset ( D = { (x1, y1), ..., (xn, yn) } ).

- Model Fitting: Fit the surrogate model (e.g., GP) to the current dataset ( D ). The GP will provide a predictive mean and uncertainty (variance) for any configuration ( x ) [14].

- Candidate Selection: Optimize the acquisition function (e.g., Expected Improvement - EI) to propose the next most promising configuration ( x{n+1} ) [14]. ( x{n+1} = \arg\max_x \text{EI}(x) )

- Parallel Evaluation (Optional): For batch experiments, the acquisition function can be extended to propose a batch of candidates simultaneously.

- Evaluation: Conduct the experiment with configuration ( x{n+1} ) and observe the outcome ( y{n+1} ).

- Update: Augment the dataset ( D = D \cup { (x{n+1}, y{n+1}) } ).

- Iteration: Repeat steps 2-6 until a stopping criterion is met (e.g., experimental budget exhausted, performance convergence).

Visualization of the Bayesian Optimization Workflow:

The following diagram illustrates the iterative feedback loop of the Bayesian Optimization process.

Application Notes and Experimental Protocols

This section translates the core methodology into specific, actionable protocols for common experimental scenarios.

Protocol: Multi-Objective Optimization with Constraints

Application Context: Simultaneously optimizing a primary metric (e.g., drug efficacy) while minimizing a side-effect metric (e.g., cytotoxicity) and respecting safety constraints (e.g., maximum compound concentration).

Materials/Reagents:

- Platform: Ax adaptive experimentation platform [14].

- Objectives: Two or more outcome metrics to be optimized.

- Constraints: Limits on outcome metrics that must not be violated.

Procedure:

- Problem Formulation:

- Define the search space (e.g., drug concentration, temperature, pH).

- Specify the objectives (e.g.,

Maximize: Efficacy,Minimize: Cytotoxicity). - Define any constraints (e.g.,

Cytotoxicity < 0.5).

- Algorithm Selection: Configure Ax to use a multi-objective Bayesian optimization algorithm, which will model a Pareto frontier of optimal trade-offs [14].

- Execution: Run the Bayesian optimization loop as described in Section 3.1. The acquisition function will be tailored to improve the Pareto frontier.

- Analysis: Upon completion, use Ax's analysis suite to visualize the Pareto frontier, allowing stakeholders to select a configuration based on the desired trade-off [14].

Protocol: High-Throughput Screening Triage

Application Context: Prioritizing a subset of compounds from a vast library for further testing based on early, low-fidelity assay results.

Materials/Reagents:

- High-Throughput Screening (HTS) Robot

- Primary (Low-Fidelity) Assay

- Secondary (High-Fidelity) Assay

Procedure:

- Initialization: Run the primary assay on a large, diverse subset of the compound library.

- Model Fitting: Use the primary assay data to train a model (e.g., GP) that predicts the outcome of the more expensive secondary assay.

- Active Selection: Instead of screening the entire remaining library, use an acquisition function (e.g., Probability of Improvement) to sequentially select the most promising compounds for the secondary assay based on the model's predictions.

- Iteration: Continuously update the model with new secondary assay results to refine the selection of subsequent compounds. This focuses resources on the most informative and promising candidates.

Quantitative Data and Standards

Table: WCAG Color Contrast Standards for Data Visualization

Adhering to accessibility standards, such as the Web Content Accessibility Guidelines (WCAG), is an ethical requirement for clear data communication. The following table summarizes the minimum contrast ratios for text in visualizations [22] [23].

| Text Type | Definition | Minimum Contrast (Level AA) [23] | Enhanced Contrast (Level AAA) [22] |

|---|---|---|---|

| Normal Text | Text smaller than 18pt (24px) or 14pt (18.7px) if bold | 4.5:1 | 7:1 |

| Large Text | Text at least 18pt (24px) or 14pt (18.7px) and bold | 3:1 | 4.5:1 |

Table: Key Research Reagent Solutions for ML-Driven Experimentation

| Item | Function in Experiment | Example/Notes |

|---|---|---|

| Adaptive Experimentation Platform (e.g., Ax) | Core software to manage the optimization loop, host surrogate models, and suggest new trials [14]. | pip install ax-platform [14] |

| Surrogate Model (Gaussian Process) | Probabilistic model that learns from experimental data to predict outcomes and quantify uncertainty for untested configurations [14]. | Flexible, data-efficient, provides uncertainty estimates. |

| Acquisition Function (e.g., Expected Improvement) | Algorithmic component that decides the next experiment by balancing exploration and exploitation [14]. | Directs the search towards global optima. |

| Data Logging System | Structured database (e.g., SQL, CSV) to meticulously record all experimental parameters, conditions, and outcomes for each trial. | Essential for model training and reproducibility. |

Visualization and Workflow Design

Creating clear and accessible visualizations is critical for interpreting complex experimental results. The following diagram outlines a high-level workflow for deploying adaptive experimentation in a research program, using the specified color palette and contrast rules.

Key Applications in Drug Discovery and Development

AI-Driven Optimization of Drug Synthesis Pathways

The application of Artificial Intelligence (AI) in optimizing drug synthesis pathways represents a transformative shift from traditional, resource-intensive experimental methods to data-driven, in-silico planning. AI methodologies enhance the efficiency, yield, and sustainability of synthesizing Active Pharmaceutical Ingredients (APIs) [24].

Application Note: Retrosynthetic Analysis and Reaction Optimization

Objective: To accelerate the planning of complex molecular synthesis and optimize reaction conditions (e.g., temperature, solvent, catalyst) to maximize yield and purity while reducing costs and environmental impact [24].

Background: Traditional retrosynthetic analysis relies on expert knowledge and is often a slow, iterative process. Similarly, optimizing reaction conditions through laboratory experimentation is time-consuming and expensive. AI models can learn from vast databases of known chemical reactions to predict viable synthetic routes and optimal parameters with high accuracy [24].

Protocol: AI-Powered Synthesis Planning and Optimization

Materials and Reagents:

- Chemical Reaction Databases: (e.g., Reaxys, SciFinder) for training AI models.

- Computational Resources: High-performance computing (HPC) clusters or cloud platforms (AWS, Google Cloud, Azure) [25].

- Software & Libraries: AI frameworks (TensorFlow, PyTorch), and specialized cheminformatics toolkits (RDKit) [25].

Methodology:

- Data Curation and Preprocessing:

- Assemble a dataset of chemical reactions, including reactants, products, conditions (solvent, temperature, catalyst), and yields [24].

- Standardize molecular representations (e.g., SMILES strings) and convert them into numerical features suitable for machine learning, such as molecular fingerprints or graph-based representations [24] [26].

Model Training for Retrosynthetic Analysis:

Model Training for Reaction Condition Optimization:

- Apply a Bayesian Optimization framework or a Random Forest model.

- Train the model to map molecular features of reactants to the optimal combination of reaction parameters that maximize a defined objective function (e.g., yield) [24].

Prediction and Validation:

- Retrosynthetic Analysis: Input the target drug molecule. The AI model will generate multiple plausible retrosynthetic pathways. These pathways are ranked based on learned feasibility, cost, or step-count [24].

- Reaction Optimization: Input the specific reaction to be optimized. The AI model suggests a set of promising reaction conditions for experimental testing [24].

- Experimental Verification: The top-ranked AI suggestions are executed in the laboratory for validation [24].

Table 1: Key AI Techniques for Synthesis Optimization

| AI Technique | Application in Synthesis | Key Advantage |

|---|---|---|

| Transformer Models [24] [27] | Predicts retrosynthetic steps and reaction outcomes. | Excels at processing sequential data like SMILES strings. |

| Graph Neural Networks (GNNs) [24] [26] | Models molecules as graphs for property and reaction prediction. | Naturally represents molecular structure and bonding. |

| Bayesian Optimization [24] | Iteratively optimizes complex reaction conditions. | Efficiently navigates multi-parameter spaces with few experiments. |

| Reinforcement Learning (RL) [24] | Discovers novel synthetic routes by exploring chemical space. | Capable of finding non-obvious, highly efficient pathways. |

AI-Driven Synthesis Optimization Workflow

Machine Learning for Multi-Target Drug Discovery

The single-target drug discovery paradigm is often inadequate for complex diseases like cancer and neurodegenerative disorders. Machine learning enables a systems pharmacology approach for designing multi-target drugs that modulate several disease pathways simultaneously, potentially leading to improved efficacy and reduced resistance [26].

Application Note: Polypharmacology Profiling

Objective: To predict the interaction profile of a compound across multiple biological targets (e.g., kinases, GPCRs) to identify promising multi-target drug candidates or assess off-target effects early in development [26].

Background: Experimental screening of a compound against hundreds of targets is prohibitively expensive. ML models can learn from chemical and biological data to predict Drug-Target Interactions (DTIs) in silico, prioritizing compounds with a desired polypharmacological profile [26].

Protocol: Predicting Multi-Target Interactions

Materials and Reagents:

- Drug-Target Interaction Databases: (e.g., ChEMBL, BindingDB, DrugBank) for model training [26].

- Molecular Representations: Compound fingerprints (ECFP), SMILES strings, and protein sequences or embeddings from pre-trained language models (e.g., ProtBERT) [26].

- Computing Environment: As above.

Methodology:

- Dataset Construction:

- Create a labeled dataset where each sample is a drug-target pair, and the label indicates whether an interaction occurs (and optionally, the binding affinity) [26].

Feature Engineering:

Model Training and Evaluation:

- Model Selection: Employ a multi-task deep learning model or a Graph Neural Network that can jointly learn from drug and target features. This allows for simultaneous prediction of interactions with multiple targets [26].

- Training: Train the model to classify or regress the interaction strength for each drug-target pair.

- Validation: Use cross-validation and hold-out test sets to evaluate performance using metrics like AUC-ROC and precision-recall [26].

Prospective Prediction and Screening:

- Use the trained model to screen virtual libraries of compounds against a predefined set of disease-relevant targets.

- Rank compounds based on their predicted multi-target activity profile for further experimental validation [26].

Table 2: Data Sources for Multi-Target Drug Discovery

| Data Source | Content Description | Application in ML |

|---|---|---|

| ChEMBL [26] | Database of bioactive molecules with drug-like properties. | Primary source for drug-target interaction labels and bioactivity data. |

| BindingDB [26] | Measured binding affinities for drug-target pairs. | Training data for regression models predicting interaction strength. |

| DrugBank [26] [28] | Comprehensive drug and target information. | Source for known drug-target networks and drug metadata. |

| STITCH [26] | Database of known and predicted chemical-protein interactions. | Expands training data with predicted interactions. |

Multi-Target Drug Prediction Workflow

Causal Machine Learning with Real-World Data in Clinical Development

The integration of Real-World Data (RWD) and Causal Machine Learning (CML) addresses key limitations of Randomized Controlled Trials (RCTs), such as limited generalizability and high cost, by generating robust evidence on drug effectiveness and safety in diverse patient populations [29].

Application Note: Enhancing Clinical Trials with External Control Arms and Treatment Effect Heterogeneity

Objective: To supplement or create control arms using RWD when RCTs are infeasible or unethical, and to identify subgroups of patients that demonstrate superior or inferior response to a treatment [29].

Background: RWD from electronic health records (EHRs), claims data, and patient registries captures the treatment journey of a vast number of patients outside strict trial protocols. CML methods can account for confounding biases in this observational data to estimate causal treatment effects [29].

Protocol: Constructing External Control Arms and Estimating Heterogeneous Treatment Effects

Materials and Reagents:

- RWD Sources: De-identified EHRs, insurance claims databases, and disease registries [29].

- Clinical Trial Data: Patient-level data from the interventional arm of a study.

- Software: Statistical software (R, Python) with CML libraries (e.g.,

EconML,CausalML).

Methodology:

- Data Harmonization:

- Define a common data model to align variables (e.g., demographics, lab values, comorbidities) between the RWD and the clinical trial data [29].

Cohort Definition:

- Apply identical inclusion and exclusion criteria to both the RWD population and the trial's intervention arm to create a comparable cohort [29].

Causal Effect Estimation:

- Propensity Score Modeling: Use ML models (e.g., Boosted Trees) to estimate the propensity score—the probability of a patient being in the treatment group given their covariates. This model is trained on the pooled data (RWD + trial arm) [29].

- Creating a Balanced Cohort: Apply inverse probability of treatment weighting (IPTW) or matching to create a weighted RWD cohort that is statistically similar to the trial arm across all measured baseline covariates [29].

- Outcome Analysis: Compare the outcome of interest (e.g., survival, response rate) between the trial arm and the weighted RWD external control arm. Advanced methods like Targeted Maximum Likelihood Estimation (TMLE) can provide doubly robust estimates [29].

Heterogeneous Treatment Effect (HTE) Analysis:

- Use CML algorithms, such as causal forests, to model how the treatment effect varies across patient subgroups defined by their features (e.g., genomics, disease severity) [29].

- The model outputs a Conditional Average Treatment Effect (CATE) for each patient, identifying subgroups with enhanced or diminished response [29].

Table 3: Causal ML Methods for RWD Analysis

| Causal ML Method | Principle | Use-Case in Drug Development |

|---|---|---|

| Propensity Score Matching/IPTW [29] | Balances covariates between treated and untreated groups to mimic randomization. | Creating external control arms from RWD for historical comparison. |

| Doubly Robust Methods (TMLE) [29] | Combines outcome and propensity score models; provides a valid estimate if either model is correct. | Robust estimation of average treatment effect from observational data. |

| Causal Forests [29] | An ensemble method that estimates how treatment effects vary across subgroups. | Identifying patient subpopulations with the greatest treatment benefit (precision medicine). |

| Meta-Learners (S-Learner, T-Learner) [29] | Flexible frameworks using any ML model to estimate CATE. | Exploring heterogeneous treatment effects when the underlying model form is unknown. |

Causal ML Analysis with RWD Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 4: Essential Research Reagents and Materials for AI-Driven Drug Discovery

| Reagent / Material | Function / Application | Example in Protocol |

|---|---|---|

| Curated Chemical Reaction Databases (Reaxys, SciFinder) | Provides structured, high-quality data for training AI models in synthesis prediction. | Foundation for the retrosynthetic analysis and reaction optimization protocol [24]. |

| Bioactivity Databases (ChEMBL, BindingDB) | Serves as the source of truth for known drug-target interactions, enabling supervised learning for DTI prediction. | Critical for building the multi-target drug discovery protocol [26]. |

| Molecular Graph Representation Toolkits (e.g., RDKit) | Converts chemical structures into graph or fingerprint representations that are processable by ML models. | Used in virtually all protocols for featurizing small molecules [24] [26]. |

| Pre-trained Protein Language Models (e.g., ESM, ProtBERT) | Generates numerical embeddings (vector representations) of protein sequences, capturing structural and functional semantics. | Used as target features in the multi-target prediction protocol [26]. |

| De-identified Real-World Data (EHRs, Claims Data) | Provides longitudinal, observational patient data for generating real-world evidence and building external control arms. | The primary data source for the causal ML clinical development protocol [29]. |

| High-Performance Computing (HPC) / Cloud Platforms (AWS, GCP, Azure) | Supplies the computational power required for training and running complex AI/ML models on large datasets. | An essential infrastructure component for all AI-driven discovery protocols [25]. |

| 4-Phenylazepan-4-ol | 4-Phenylazepan-4-ol|RUO | |

| HO-Peg7-CH2cooh | HO-Peg7-CH2cooh|PEG Reagent|RUO |

From Theory to Practice: Implementing ML-Driven Experimental Design

A Step-by-Step Tutorial on BOED for Simulator Models

Bayesian Optimal Experimental Design (BOED) is a principled framework for optimizing experiments to collect maximally informative data for computational models. When studying complex systems, especially in fields like drug development, traditional experimental designs based on intuition or convention can be inefficient or fail to distinguish between competing computational models. BOED formalizes experimental design as an optimization problem, where controllable parameters of an experiment (designs, ξ) are determined by maximizing a utility function, typically the Expected Information Gain (EIG) [30] [31]. This approach is particularly powerful for simulator models—models where we can simulate data but may not be able to compute likelihoods analytically due to model complexity [30]. This tutorial provides a step-by-step protocol for applying BOED to simulator models, framed within the broader context of optimizing experimental conditions with machine learning.

Theoretical Foundation of BOED

Core Mathematical Principles

In BOED, the relationship between a model's parameters (θ), experimental designs (ξ), and observable outcomes (y) is described by a likelihood function or simulator, ( p(y | \xi, \theta) ). Prior knowledge about the parameters is encapsulated in a prior distribution, ( p(\theta) ). The core metric for evaluating an experimental design is the Expected Information Gain (EIG) [31].

The Information Gain (IG) for a specific design and outcome is the reduction in Shannon entropy from the prior to the posterior: [ \text{IG}(\xi, y) = H\big[ p(\theta) \big] - H \big[ p(\theta | y, \xi) \big] ]

Since the outcome ( y ) is unknown before the experiment, we use the EIG, which is the expectation of the IG over all possible outcomes: [ \text{EIG}(\xi) = \mathbb{E}{p(y|\xi)}[\text{IG}(\xi, y)] ] where ( p(y|\xi) = \mathbb{E}{p(\theta)} \big[ p(y|\theta, \xi) \big] ) is the marginal distribution of the outcomes. The optimal design ( \xi^* ) is the one that maximizes this quantity: ( \xi^* = \arg\max_\xi \text{EIG}(\xi) ) [31].

The Critical Role of Simulator Models

Simulator models, also known as generative or implicit models, are defined by the ability to simulate data from them, even if their likelihood functions are intractable [30]. This makes them highly valuable for modeling complex behavioral or biological phenomena. In drug development, a simulator could model a cellular signaling pathway or a patient's response to a treatment. BOED is exceptionally well-suited for such models because the EIG can be estimated using simulations, circumventing the need for analytical likelihood calculations [30].

A Step-by-Step BOED Protocol for Simulator Models

The following protocol is designed for researchers aiming to implement BOED for the first time. Key computational challenges and solutions are summarized in Table 1.

Table 1: Key Computational Challenges and Modern Solutions in BOED

| Challenge | Description | Modern Solution |

|---|---|---|

| EIG Intractability | The EIG and the posterior ( p(\theta \mid y, \xi) ) are generally intractable for simulator models [30]. | Use simulation-based inference (SBI) and machine learning methods to approximate the posterior and estimate the EIG [30]. |

| High-Dimensional Design | Optimizing over high-dimensional design spaces (e.g., complex stimuli) is computationally expensive. | Leverage recent advances, such as methods based on contrastive diffusions, which use a pooled posterior distribution for more efficient sampling and optimization [32]. |

| Real-Time Adaptive Design | Performing sequential, adaptive BOED in real-time is often computationally infeasible. | Use amortized methods like Deep Adaptive Design (DAD), which pre-trains a neural network policy to make millisecond design decisions during the live experiment [31]. |

Protocol Workflow

The diagram below outlines the core iterative workflow for a static (batch) BOED procedure.

Step-by-Step Methodology

Step 1: Formalize the Scientific Goal and Model

- Action: Precisely define the scientific question. Is the goal parameter estimation, model discrimination, or prediction?

- Protocol: Formalize your theory as a simulator model. The simulator must be a function that takes parameters θ and a design ξ as input and generates synthetic data y.

- Example: In a drug response study, θ could represent pharmacokinetic parameters, ξ the dosage and timing of administration, and y the measured biomarker levels.

Step 2: Define the Prior and Design Space

- Action: Specify the prior distribution ( p(\theta) ) and the space of possible designs Ξ.

- Protocol:

- Prior (( p(\theta) )): Encode existing knowledge or plausible ranges for the model parameters. This can be informed by literature or preliminary data.

- Design Space (Ξ): Define all controllable aspects of the experiment (e.g., stimulus levels, measurement timings, compound concentrations). This space can be discrete or continuous.

Step 3: Select and Implement an EIG Estimation Method

- Action: Choose a computational method to estimate the EIG for a given design ξ.

- Protocol: For simulator models, use a likelihood-free estimation method. A common approach is Nested Monte Carlo (NMC):

- Draw K parameter samples from the prior: ( \thetak \sim p(\theta) ).

- For each ( \thetak ), simulate one outcome: ( yk \sim p(y | \thetak, \xi) ).

- For each ( yk ), draw L parameter samples from the prior: ( \thetal^{(k)} \sim p(\theta) ), and use the simulator to estimate the log-likelihood (or a proxy) for the posterior. The EIG is then approximated as: [ \widehat{EIG}{NMC}(\xi) = \frac{1}{K} \sum{k=1}^K \left[ \log p(yk | \thetak, \xi) - \log \left( \frac{1}{L} \sum{l=1}^L p(yk | \theta_l^{(k)}, \xi) \right) \right] ] Note that this is computationally intensive (requires K*L simulations). Recent methods like contrastive diffusions offer more efficient alternatives [32].

Step 4: Optimize the Experimental Design

- Action: Find the design ( \xi^* ) that maximizes the estimated EIG.

- Protocol: Use a stochastic optimization algorithm, such as Bayesian optimization or a gradient-based method if gradients can be estimated. This is an iterative process where the EIG is estimated for candidate designs proposed by the optimizer until convergence.

Step 5: Run the Experiment and Update Beliefs

- Action: Conduct the physical experiment using the optimized design ( \xi^* ), collect the real-world data ( y_{obs} ), and perform Bayesian inference.

- Protocol: Use simulation-based inference (e.g., ABC) to compute the posterior distribution ( p(\theta | y_{obs}, \xi^*) ), which updates your understanding of the parameters. This posterior can serve as the new prior for a subsequent round of BOED.

Advanced Application: Sequential BOED

For a sequence of experiments, the goal is to choose each design ( \xi{t+1} ) adaptively based on the history of previous designs and outcomes, ( ht = (\xi1, y1, \dots, \xit, yt) ). The following diagram contrasts two primary strategies.

Protocol for Sequential BOED

Myopic (One-Step Lookahead) Design:

- Procedure: At each step ( t+1 ), fit the posterior ( p(\theta | ht) ) and use it as the prior to optimize the EIG for the next design, ( \xi{t+1} ).

- Limitation: This strategy is often computationally infeasible for real-time experiments, as it requires intensive posterior computation and EIG optimization during the live experiment [31].

Amortized Design with Deep Adaptive Design (DAD):

- Procedure: Prior to the live experiment, train a neural network policy ( \pi ) that takes the history ( ht ) as input and directly outputs the next design ( \xi{t+1} ).

- Advantage: Design decisions during the live experiment are made in milliseconds via a single forward pass through the network, enabling real-time adaptive BOED [31].

Successful implementation of BOED requires both computational and experimental reagents. Table 2 details key components of the research toolkit.

Table 2: Essential Research Reagents & Computational Resources for BOED

| Category | Item | Function & Description |

|---|---|---|

| Computational Resources | Simulator Model | The core computational model of the phenomenon under study. It must be capable of generating synthetic data ( y ) given parameters ( \theta ) and a design ( \xi ) [30]. |

| High-Performance Computing (HPC) Cluster | BOED is computationally intensive. Parallel processing on an HPC cluster is often necessary for running vast numbers of simulations in a feasible time. | |

| BOED Software Package | Libraries such as the one provided in the accompanying GitHub repository offer pre-built tools for EIG estimation and design optimization [33]. | |

| Experimental Reagents | Parameter-Specific Assays | Laboratory kits and techniques (e.g., ELISA, flow cytometry, qPCR) used to measure the experimental outcomes ( y ) that are predicted by the simulator. |

| Titratable Compounds/Stimuli | Chemical compounds, growth factors, or other stimuli whose concentration, timing, and combination can be precisely controlled as the experimental design ( \xi ). |

Bayesian Optimal Experimental Design represents a paradigm shift in how experiments are conceived, moving from intuition-based to information-theoretic principles. For simulator models prevalent in complex domains like drug development, BOED provides a structured framework to maximize the value of each experiment, saving time and resources. While computational challenges remain, modern machine learning methods—from contrastive diffusions for static design to Deep Adaptive Design for sequential experiments—are making BOED increasingly practical and powerful. By following the protocols and utilizing the toolkit outlined in this tutorial, researchers can begin to integrate BOED into their own work, systematically optimizing experimental conditions to accelerate scientific discovery.

Leveraging Machine Learning for Automated Parameter Tuning

In machine learning (ML), hyperparameters are external configurations that are not learned from the data but are set prior to the training process. These parameters significantly control the model's behavior and performance. Automated hyperparameter tuning refers to the systematic use of algorithm-driven methods to identify the optimal set of hyperparameters for a given model and dataset. Mathematically, this process aims to solve the optimization problem: θ∗=argminθ∈ΘL(f(x;θ),y), where θ represents the hyperparameters, f(x;θ) is the model, and L is the loss function measuring the discrepancy between predictions and true values y [34].

The adoption of automated tuning brings substantial benefits over manual approaches. It reduces subjectivity by leveraging systematic search strategies that remove human bias, increases reproducibility through standardized methodologies, and optimizes resource usage by finding superior configurations faster than exhaustive manual searches [34]. In computationally intensive fields like drug discovery, where molecular property prediction models can require significant resources, efficient hyperparameter optimization (HPO) becomes particularly critical for developing accurate models without prohibitive computational costs [35].

Comparative Analysis of HPO Methods

Several algorithms have been developed for HPO, each with distinct mechanisms and advantages. The selection of an appropriate method depends on factors such as the complexity of the model, the dimensionality of the hyperparameter space, and available computational resources.

Table 1: Comparison of Hyperparameter Optimization Methods

| Method | Key Mechanism | Advantages | Limitations | Best-Suited Applications |

|---|---|---|---|---|

| Grid Search | Exhaustively evaluates all combinations in a predefined grid | Simple, guarantees finding best in grid | Curse of dimensionality; search time grows exponentially with parameters | Small hyperparameter spaces (<5 parameters) [34] |

| Random Search | Randomly samples combinations from defined space | More efficient than grid search; better resource allocation | May miss optimal regions; less systematic | Moderate spaces where some parameters matter more [34] |

| Bayesian Optimization | Uses probabilistic surrogate model to guide search | Balances exploration/exploitation; sample-efficient | Computational overhead for model updates; complex implementation | Expensive function evaluations [14] |

| Hyperband | Adaptive resource allocation with successive halving | Computational efficiency; fast elimination of poor configurations | May eliminate promising configurations early | Large-scale neural networks [35] |

| Evolutionary Algorithms | Population-based search inspired by natural selection | Effective for complex, non-convex spaces | High computational cost; many parameters to tune | Complex architectures with interacting parameters [34] |

Quantitative Performance Comparisons

Recent studies have quantitatively compared HPO methods across various domains. In molecular property prediction, researchers have demonstrated that Bayesian optimization and Hyperband consistently outperform traditional methods. For dense deep neural networks (DNNs) predicting polymer properties, Bayesian optimization achieved significant improvements in R² values compared to base models without HPO [35].

When comparing computational efficiency, the Hyperband algorithm has shown particular promise, providing optimal or nearly optimal molecular property values with substantially reduced computational requirements [35]. The combination of Bayesian Optimization with Hyperband (BOHB) has emerged as a powerful approach, leveraging the strengths of both methods—Bayesian optimization's intelligent search with Hyperband's computational efficiency [35].

In high-energy physics, automated parameter tuning for track reconstruction algorithms using frameworks like Optuna and Or ion demonstrated rapid convergence to effective parameter settings, significantly improving both the speed and accuracy of particle trajectory reconstruction [36].

Essential Tools and Platforms

Popular HPO Software Libraries

The growing importance of automated hyperparameter tuning has spurred the development of specialized software libraries that implement various optimization algorithms.

Table 2: Key Software Platforms for Automated Hyperparameter Tuning

| Platform/Library | Primary Algorithms | Integration with ML Frameworks | Special Features | Application Context |

|---|---|---|---|---|

| Ax (Adaptive Experimentation) | Bayesian optimization, Multi-objective optimization | PyTorch, TensorFlow | Parallel executions, Sensitivity analysis, Production-ready [14] | Large-scale industrial applications, AI model tuning [14] |

| KerasTuner | Random search, Bayesian optimization, Hyperband | Keras/TensorFlow | User-friendly, Easy coding for non-experts [35] | Deep neural networks for molecular property prediction [35] |